Compact Muon Solenoid

LHC, CERN

| CMS-PRF-21-001 ; CERN-EP-2023-136 | ||

| Development of the CMS detector for the CERN LHC Run 3 | ||

| CMS Collaboration | ||

| 8 September 2023 | ||

| JINST 19 (2024) P05064 | ||

| Abstract: Since the initial data taking of the CERN LHC, the CMS experiment has undergone substantial upgrades and improvements. This paper discusses the CMS detector as it is configured for the third data-taking period of the CERN LHC, Run 3, which started in 2022. The entire silicon pixel tracking detector was replaced. A new powering system for the superconducting solenoid was installed. The electronics of the hadron calorimeter was upgraded. All the muon electronic systems were upgraded, and new muon detector stations were added, including a gas electron multiplier detector. The precision proton spectrometer was upgraded. The dedicated luminosity detectors and the beam loss monitor were refurbished. Substantial improvements to the trigger, data acquisition, software, and computing systems were also implemented, including a new hybrid CPU/GPU farm for the high-level trigger. | ||

| Links: e-print arXiv:2309.05466 [hep-ex] (PDF) ; CDS record ; inSPIRE record ; CADI line (restricted) ; | ||

| Figures | |

png pdf |

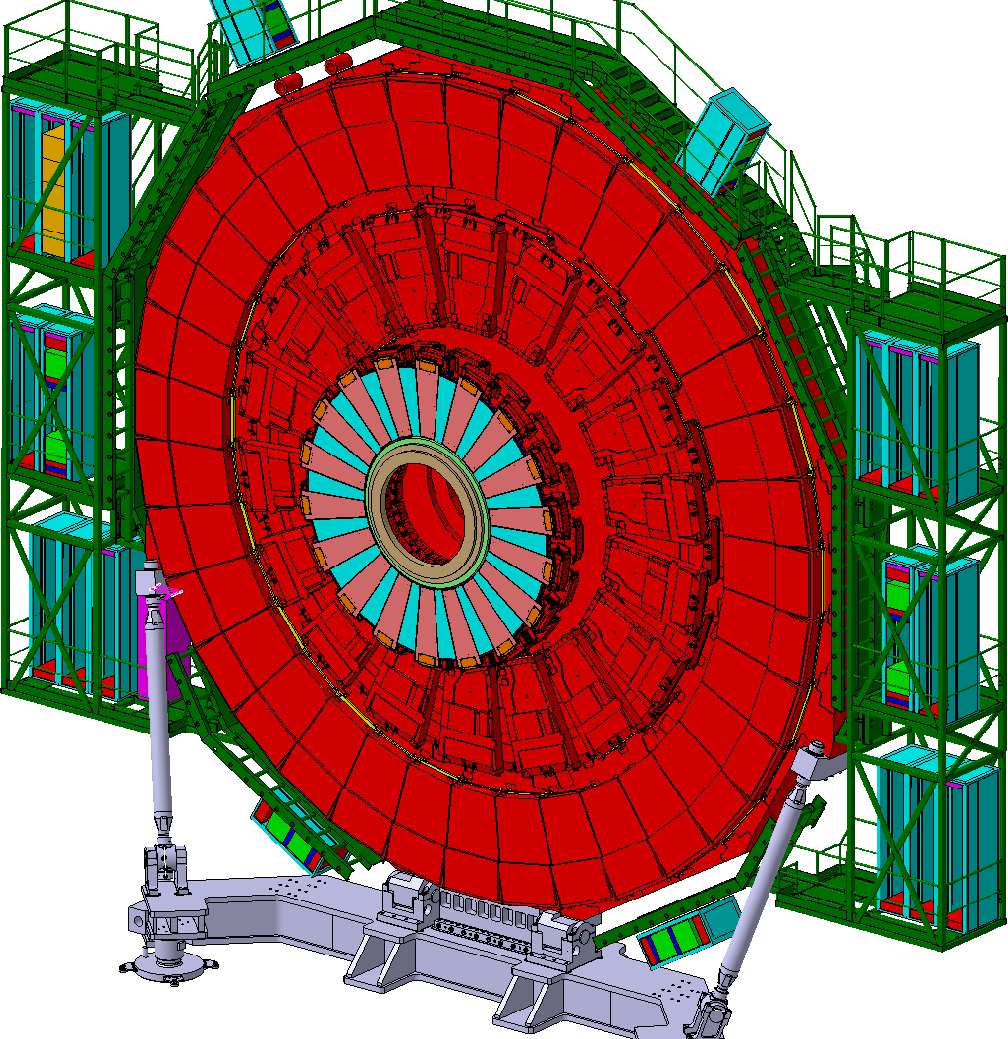

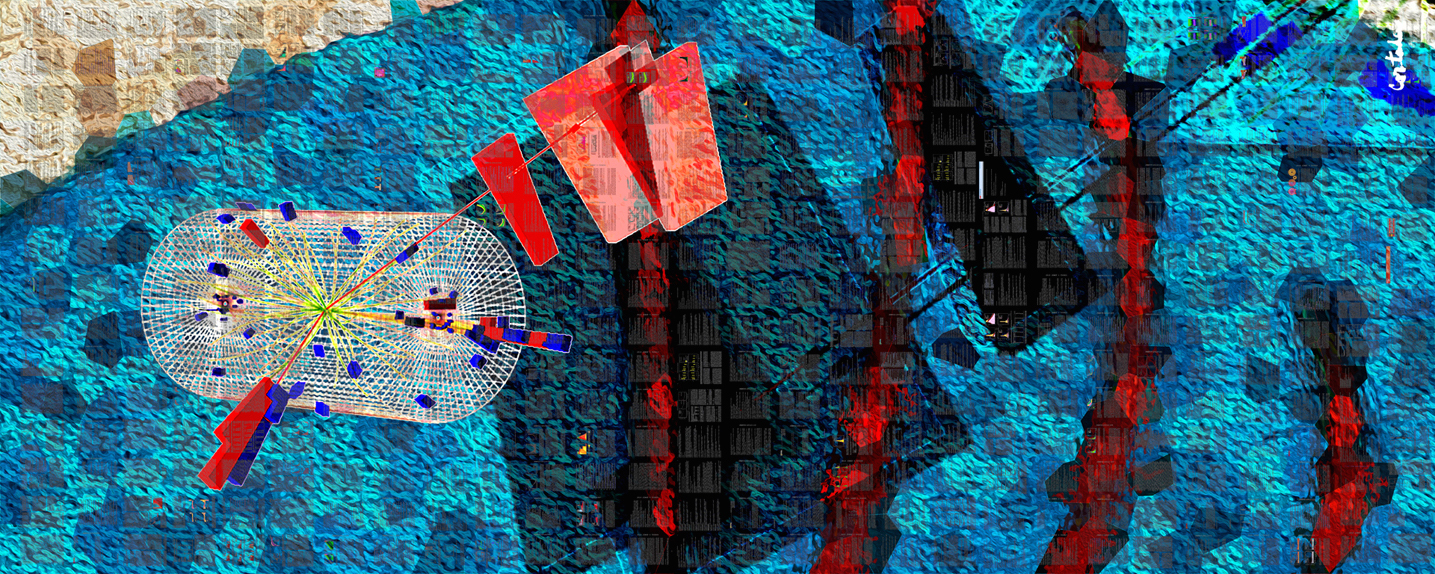

Figure 1:

Schematic drawing of the CMS detector. |

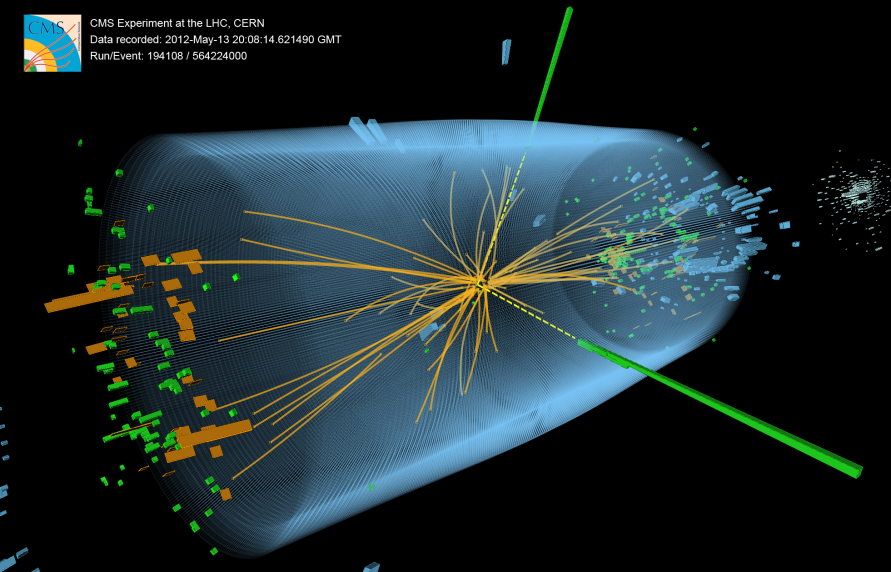

png pdf jpg |

Figure 2:

The solenoid magnet cryostat within the open CMS detector. |

png pdf |

Figure 3:

Photographs of a part of the new vacuum pumping circuit (left) and of the new CMS FWT system installed in the CMS service cavern (right). |

png |

Figure 3-a:

Photograph of a part of the new vacuum pumping circuit. |

png pdf |

Figure 4:

The CMS magnet current ramp and discharge modes representing the various magnet operation procedures and their duration. A current of 18164 A corresponds to a magnetic field of 3.8 T. |

png pdf |

Figure 5:

Longitudinal view of the Phase 1-upgraded pixel detector compared to the original detector layout. |

png pdf |

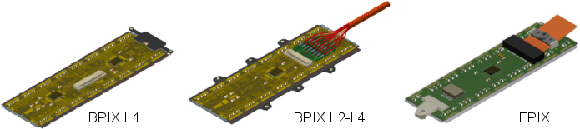

Figure 6:

Drawings of the pixel detector modules for BPIX L1, BPIX L2-4, and the FPIX detector. |

png pdf |

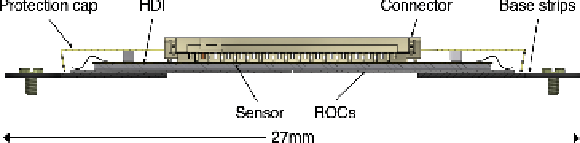

Figure 7:

Cross sectional view of a pixel detector module for BPIX L2-4, cut along the short side of the module. |

png pdf |

Figure 8:

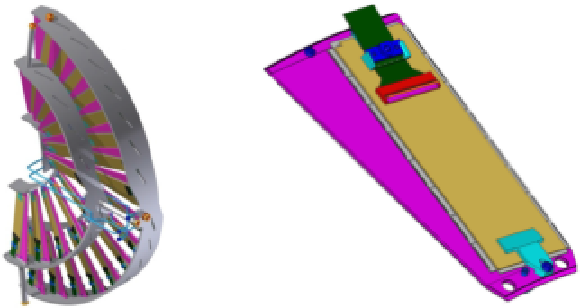

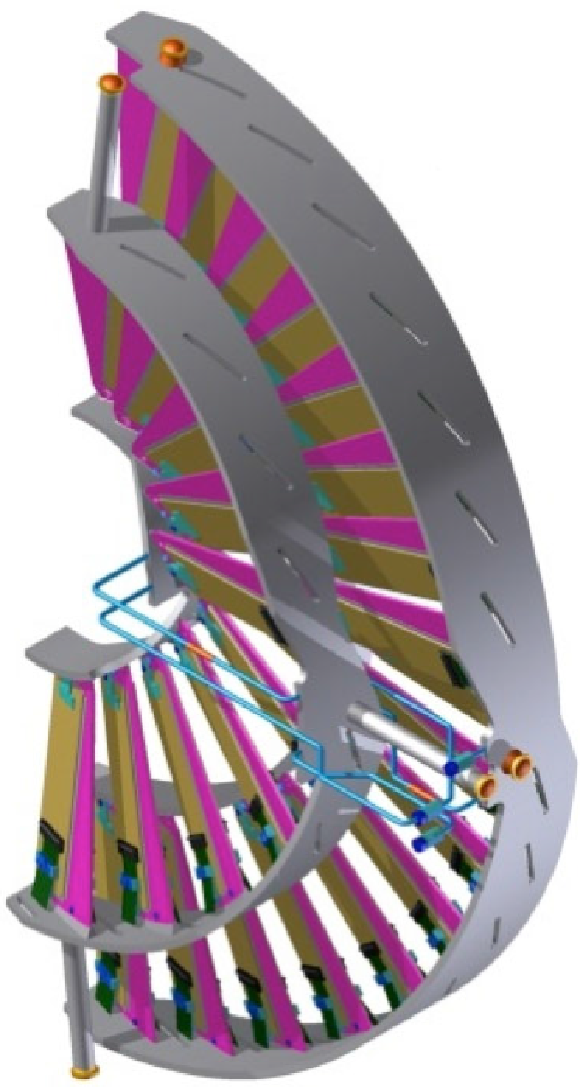

Drawing of an FPIX half-disk made from two half-rings of modules mounted on blades that are suspended between graphite rings (left), and close up view of a module mounted on a blade (right). |

png pdf |

Figure 8-a:

Drawing of an FPIX half-disk made from two half-rings of modules mounted on blades that are suspended between graphite rings. |

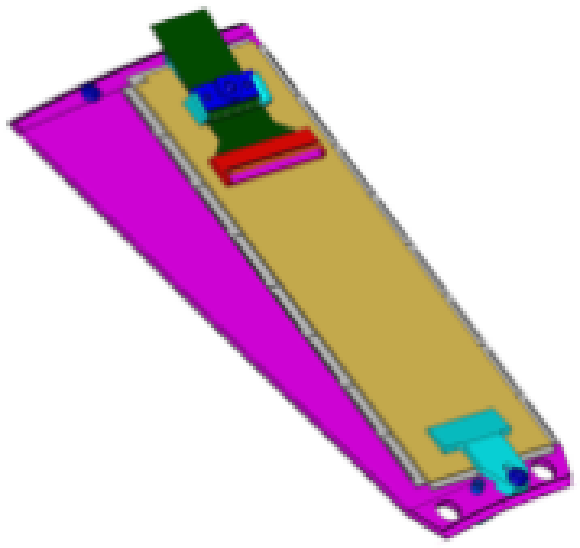

png pdf |

Figure 8-b:

Close up view of a module mounted on a blade. |

png pdf |

Figure 9:

Schematic view of one quadrant in the $ r $-$ z $ view of the CMS tracker: single-sided and double-sided strip modules are depicted as red and blue segments, respectively. The pixel detector is shown in green. |

png pdf |

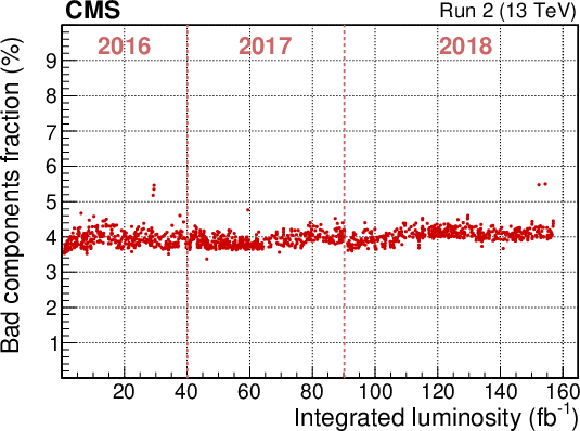

Figure 10:

Fraction of bad components for the CMS silicon strip detector as a function of the delivered integrated luminosity [32]. |

png pdf |

Figure 11:

Left: signal-to-noise ratio as a function of integrated luminosity as accumulated in pp collisions during Run 2, separately for the different detector partitions. Triangles and crosses indicate the results for sensors in the TEC of thickness 320 and 500 m, respectively. Right: hit efficiency of the silicon strip detector taken from a representative run recorded in 2018 [32] with an average hit efficiency under typical conditions at an instantaneous luminosity of 1.11 $ \times$ 10$^{34}$ cm$^{2}$s$^{-1}$. The two gray bands represent regions where the hit efficiency is not measured due to the selection criteria of the analysis [32]. |

png pdf |

Figure 11-a:

Signal-to-noise ratio as a function of integrated luminosity as accumulated in pp collisions during Run 2, separately for the different detector partitions. Triangles and crosses indicate the results for sensors in the TEC of thickness 320 and 500 m, respectively. |

png pdf |

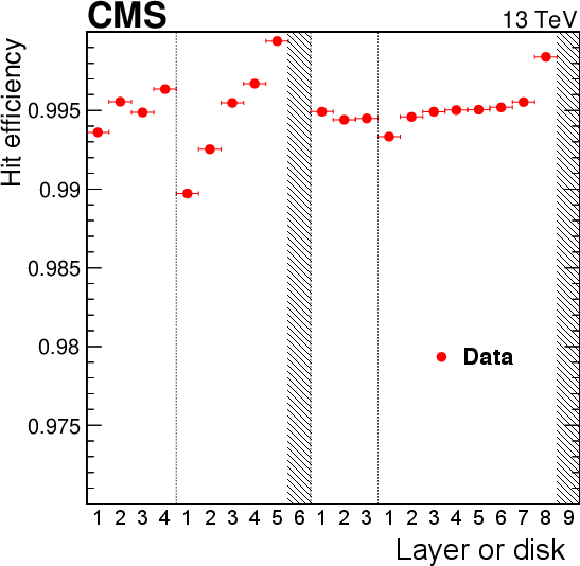

Figure 11-b:

Hit efficiency of the silicon strip detector taken from a representative run recorded in 2018 [32] with an average hit efficiency under typical conditions at an instantaneous luminosity of 1.11 $ \times$ 10$^{34}$ cm$^{2}$s$^{-1}$. The two gray bands represent regions where the hit efficiency is not measured due to the selection criteria of the analysis [32]. |

png pdf |

Figure 12:

Evolution of the full depletion voltage for one TIB layer-1 sensor as a function of the integrated luminosity and fluence until the end of Run 2. The full depletion voltage is measured from the cluster-width variable, shown as black dots, and the predicted curve is based on a model that uses fluence and temperature history as inputs [34]. The hashed area highlights the region at low values of the full depletion voltage where the analysis loses sensitivity [32]. |

png pdf |

Figure 13:

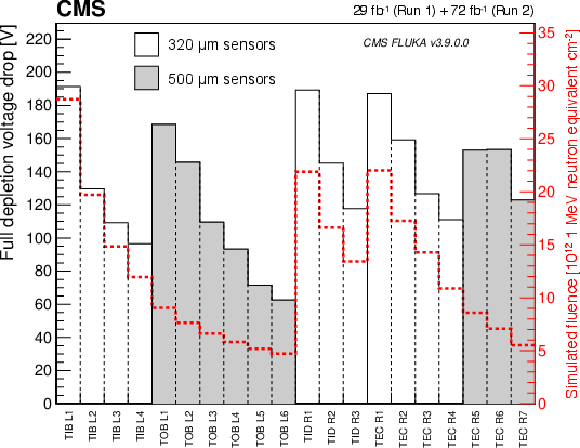

Decrease of the full depletion voltage for each layer, computed as the difference between the values measured at the time of the tracker construction and the values obtained by the analysis of a bias-voltage scan performed in September 2017 on all the tracker modules. The white (gray) histograms represent modules with 320 (500) m thick sensors. The average fluence for each layer is shown by the red line. |

png pdf |

Figure 14:

Relative response to laser light injected into the ECAL crystals, measured by the laser monitoring system, averaged over all crystals in bins of $ |\eta| $. The response change observed in the ECAL channels is up to 13% in the barrel, $ |\eta| < $ 1.5, and reaches up to 62% at $ |\eta|\approx $ 2.5, the limit of the CMS inner tracker acceptance. The response change is up to 96% in the region closest to the beam pipe. The recovery of the crystal response during the periods without collisions is visible. These measurements, performed every 40 minutes, are used to correct the physics data. The lower panel shows the LHC instantaneous luminosity as a function of time. |

png pdf |

Figure 15:

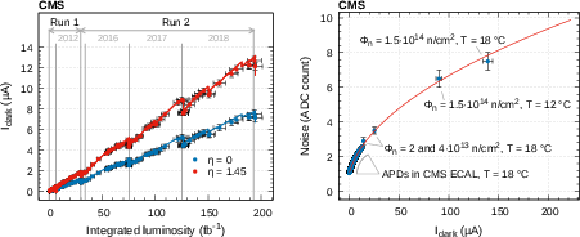

Left: evolution of the APD dark current as a function of the integrated luminosity since the beginning of Run 1. The gray vertical lines represent the ends of the Run 1 and Run 2 data-taking periods. Spontaneous annealing of the radiation-induced defects is visible as vertical steps in the measurements and corresponds to long stops in the data taking, e.g., year-end technical stops. Right: measurement of the electronic noise in the most sensitive amplification range as a function of the measured leakage current of an APD pair. The measurements are explained in the text. The pink line is a fit to the data with a square root function. The maximum expected fluence for Run 3 is 4 $ \times $ 10$ ^{13}$ $\mathrm{n}_{\mathrm{eq} }$/cm$^2 $ at $ |\eta|= $ 1.45. |

png pdf |

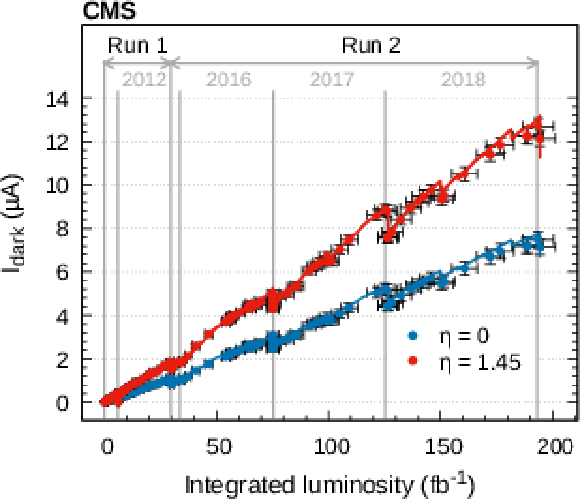

Figure 15-a:

Evolution of the APD dark current as a function of the integrated luminosity since the beginning of Run 1. The gray vertical lines represent the ends of the Run 1 and Run 2 data-taking periods. Spontaneous annealing of the radiation-induced defects is visible as vertical steps in the measurements and corresponds to long stops in the data taking, e.g., year-end technical stops. |

png pdf |

Figure 15-b:

Measurement of the electronic noise in the most sensitive amplification range as a function of the measured leakage current of an APD pair. The measurements are explained in the text. The pink line is a fit to the data with a square root function. The maximum expected fluence for Run 3 is 4 $ \times $ 10$ ^{13}$ $\mathrm{n}_{\mathrm{eq} }$/cm$^2 $ at $ |\eta|= $ 1.45. |

png pdf |

Figure 16:

Average noise per channel as extracted from data using variations (RMS) of the signal baseline at the end of each year of Run 2 data taking. The amplitude RMS is converted into an energy equivalent (left) and a transverse-energy equivalent (right). The integrated luminosity accumulated since the start of Run 1 is indicated. The spread of the equivalent noise at a given pseudorapidity is indicated by the light-colored bands. |

png pdf |

Figure 16-a:

Average noise per channel as extracted from data using variations (RMS) of the signal baseline at the end of each year of Run 2 data taking. The amplitude RMS is converted into an energy equivalent. The integrated luminosity accumulated since the start of Run 1 is indicated. The spread of the equivalent noise at a given pseudorapidity is indicated by the light-colored bands. |

png pdf |

Figure 16-b:

Average noise per channel as extracted from data using variations (RMS) of the signal baseline at the end of each year of Run 2 data taking. The amplitude RMS is converted into a transverse-energy equivalent. The integrated luminosity accumulated since the start of Run 1 is indicated. The spread of the equivalent noise at a given pseudorapidity is indicated by the light-colored bands. |

png pdf |

Figure 17:

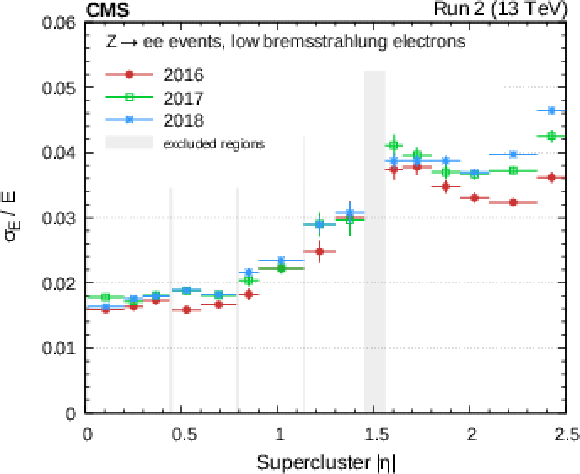

ECAL resolution for electrons having low bremsstrahlung emissions (left) and for an inclusive selection of electrons (right). The horizontal bars show the bin width. |

png pdf |

Figure 17-a:

ECAL resolution for electrons having low bremsstrahlung emissions. The horizontal bars show the bin width. |

png pdf |

Figure 17-b:

ECAL resolution for an inclusive selection of electrons. The horizontal bars show the bin width. |

png pdf |

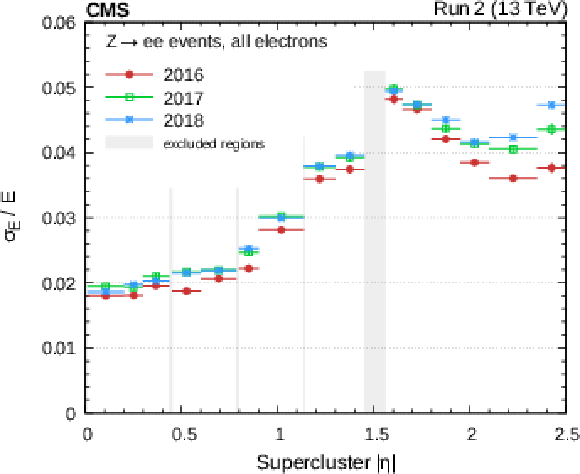

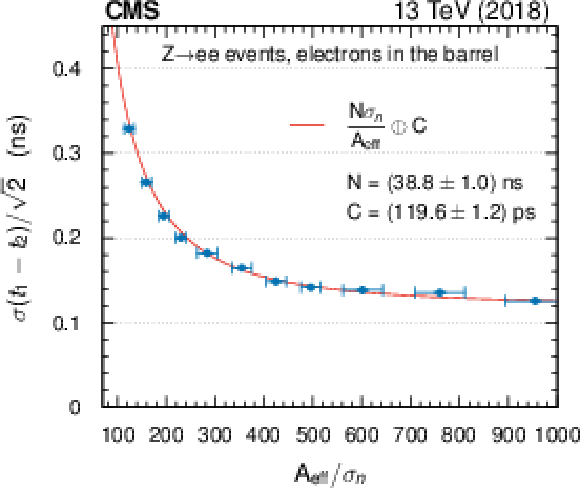

Figure 18:

ECAL timing resolution as measured from $ \mathrm{Z}\to\mathrm{e}\mathrm{e} $ events by comparing the arrival time of the two electrons. The performance has constantly improved over the years due to the frequency at which the synchronization constants have been updated, and is shown for 2018, the last year of Run 2. Updates in the constants are necessary to compensate for pulse shape changes induced by radiation. Vertical bars on the points showing the statistical uncertainties are too small to be seen in the plot. The red line correspond to a fit to the points with the parametrization of the resolution shown in the legend. |

png pdf |

Figure 19:

Schematic view of the HCAL as of 2016, showing the positions of its four major components: HB, HE, HO, and HF. The layers marked in blue are grouped together as ``depth 1,'' i.e., the signals from these layers of a given tower are optically summed and read out by a single photodetector. Similarly, the layers shown in yellow and green are combined as depths 2 and 3, respectively, and the layers shown in purple are combined for HO. The notation ``FEE'' denotes the locations of the HB and HE frontend electronics readout boxes. The solid black lines, roughly projective with the interaction point, denote the $ \eta $ divisions in the tower $ \eta $-$ \phi $ segmentation, and the numbers at the edge of the tower denote the ieta index. |

png pdf |

Figure 20:

Left: brass absorber for the hadron barrel calorimeter HB. Right: scintillating tiles with wavelength shifting fibers used as the active media in the barrel, endcap, and outer hadron calorimeters. |

png |

Figure 20-a:

Brass absorber for the hadron barrel calorimeter HB. |

png |

Figure 20-b:

Scintillating tiles with wavelength shifting fibers used as the active media in the barrel, endcap, and outer hadron calorimeters. |

png pdf |

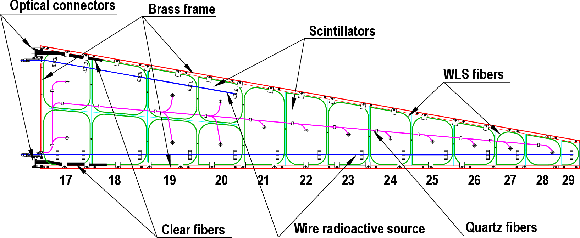

Figure 21:

Physical arrangement of the scintillator and wavelength-shifting fibers into tiles for an HE megatile. |

png pdf |

Figure 22:

Schematic view of the CMS hadron forward calorimeter, HF. The yellow region represents the steel absorber with embedded quartz fibers; the grey shaded area to the left represents fibers which deliver the signals to light guides that penetrate the steel plug shielding; the white rectangle to the left of the light guides represents the frontend readout boxes, which house the photomultiplier tubes. The dimensions shown in the diagram are in millimeters. |

png pdf |

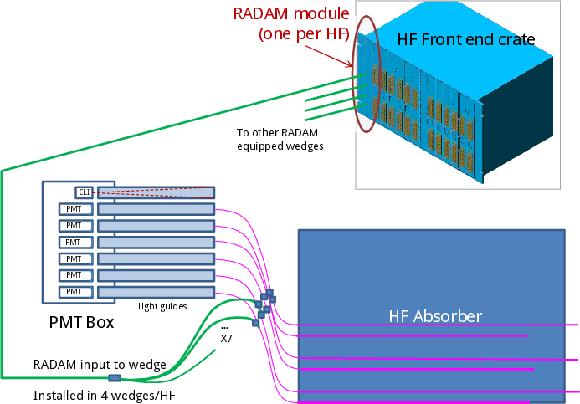

Figure 23:

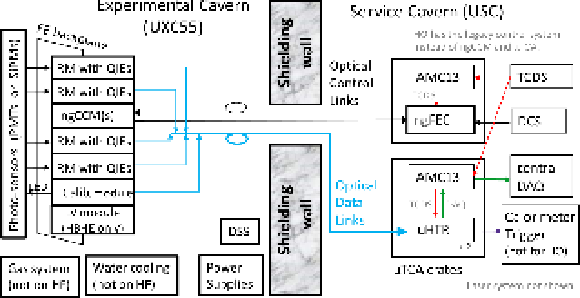

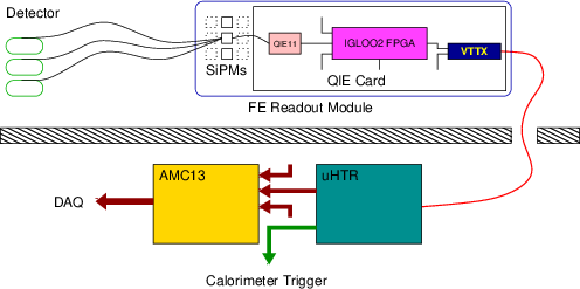

Schematic diagram of the HCAL readout. The frontend electronics chain begins with analog signals from the HPDs, SiPMs, or PMTs. The HB and HE used HPDs prior to the Phase 1 upgrade and SiPMs after, while the HF uses PMTs. These signals are digitized by the QIE chips in the readout modules (RMs). The ``next-generation clock and control module'' (ngCCM) is part of the frontend control system. The digitized data are sent to the backend $\mu$HTRs ($ \mu $TCA HCAL trigger and readout cards). Data from multiple $\mu$HTRs are concentrated into the AMC13 cards and forwarded to the CMS central data acquisition (DAQ) system. The $ \mu $TCA cards also send data to the trigger system. The AMC13 cards also distribute the fast commands arriving from the CMS timing and control distribution system (TCDS) within each $ \mu $TCA crate. The detector control system (DCS) software communicates with the ``next-generation frontend controller'' (ngFEC). |

png pdf |

Figure 24:

The longitudinal and transverse HCAL segmentation for Run 3. Within a tower, layers with the same color are routed to the same SiPM. The location of the frontend electronics is indicated by the letters FEE. |

png pdf |

Figure 25:

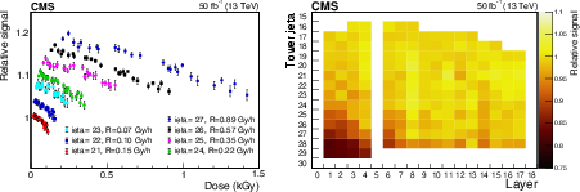

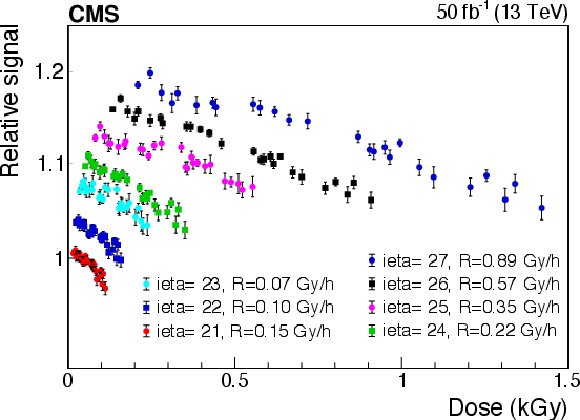

Left: relative laser light signal versus the accumulated dose for scintillator tiles in layer 1 and ieta range 21-27. The average dose rate $ R $ for each set of points is given in the legend. The vertical scale is logarithmic and subsequent sets are shifted up by a factor of 1.03 relative to the previous set for better visibility. Each set starts at a dose corresponding to an integrated luminosity of 7 fb$ ^{-1} $. The vertical bars give the scaled statistical uncertainties. Right: ratio of the signals from the $ ^{60}$Co source observed before and after the 2017 data-taking period for scintillator tiles in the HE as a function of ieta and layer number. Tubes in layers 0 and 5 have obstructions and cannot be accessed. |

png pdf |

Figure 25-a:

Relative laser light signal versus the accumulated dose for scintillator tiles in layer 1 and ieta range 21-27. The average dose rate $ R $ for each set of points is given in the legend. The vertical scale is logarithmic and subsequent sets are shifted up by a factor of 1.03 relative to the previous set for better visibility. Each set starts at a dose corresponding to an integrated luminosity of 7 fb$ ^{-1} $. The vertical bars give the scaled statistical uncertainties. |

png pdf |

Figure 25-b:

Ratio of the signals from the $ ^{60}$Co source observed before and after the 2017 data-taking period for scintillator tiles in the HE as a function of ieta and layer number. Tubes in layers 0 and 5 have obstructions and cannot be accessed. |

png pdf |

Figure 26:

Left: top and side views of an eight-channel SiPM array in its ceramic package. Right: ceramic packages at the CERN Metrology Laboratory for characterization measurements. |

png |

Figure 26-a:

Top and side views of an eight-channel SiPM array in its ceramic package. |

png pdf |

Figure 27:

Distribution of the signal response (photon detection efficiency times gain) for 3600 HE SiPMs. |

png pdf |

Figure 28:

Upgraded HCAL endcap (HE) and barrel (HB) DAQ readout chain, including the SiPMs, frontend readout electronics, and backend system. |

png pdf |

Figure 29:

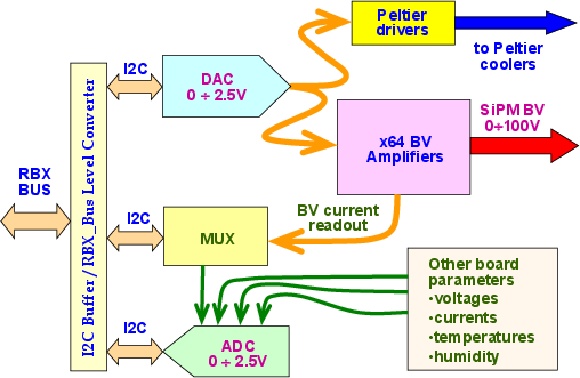

Left: control board block diagram. Right: HB card pack with one control and four frontend boards. The flex cables provide signal and bias voltage connections to 64 SiPMs. |

png pdf |

Figure 29-a:

Control board block diagram. |

png pdf |

Figure 30:

Left: view of a spare HE optical decoder unit (ODU), showing its light box and fiber mapping. The fibers route from the patch panel at the top to the ``cookie'' at the left. The side panels are clear rather than opaque for display purposes. Right: a production HE ODU. The clear fiber pigtail connectors attached to the patch panel are visible at the top. The plastic ``cookie'' is seen at the front of the ODU. |

png pdf |

Figure 31:

Block diagram of the HB controls. The ngFEC and ngCCM modules are needed to run and monitor the frontend electronics. All control links connecting the service rooms to the experimental cavern are optical. A secondary link connecting the ngFEC and the ngCCM is available in case of a primary link failure. |

png pdf |

Figure 32:

Cherenkov signals generated in the PMT windows from a muon test beam. Thin windows in the Phase 1 upgrade four-anode PMTs produce smaller signals (red) than those produced in the original PMTs with thick windows (black). |

png pdf |

Figure 33:

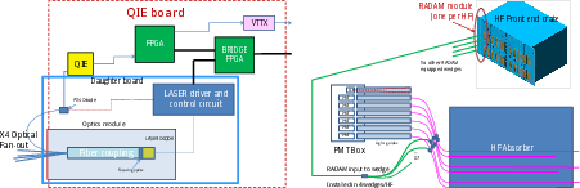

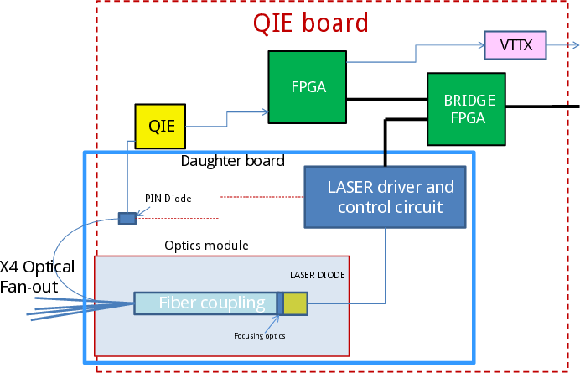

Left: schematic of the new HF QIE laser board, used in the HF radiation monitoring system. Right: sketch of the upgraded HF radiation damage monitoring system light distribution and electronics chain. |

png pdf |

Figure 33-a:

Schematic of the new HF QIE laser board, used in the HF radiation monitoring system. |

png pdf |

Figure 33-b:

Sketch of the upgraded HF radiation damage monitoring system light distribution and electronics chain. |

png pdf |

Figure 34:

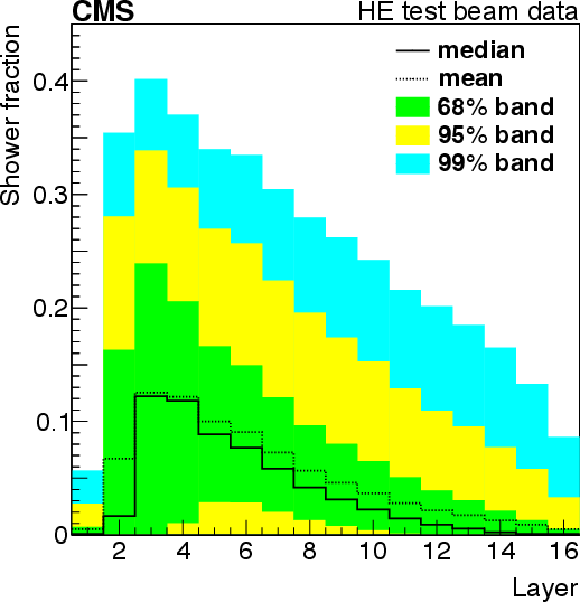

Left: energy resolution of the upgraded prototype detector as a function of the pion beam energy, shown with and without the channel calibrations derived from the response to muons. Right: longitudinal shower profile measured using the special ODU. Bands containing 68, 95, and 99% of all events for each layer are shown. |

png pdf |

Figure 34-a:

Energy resolution of the upgraded prototype detector as a function of the pion beam energy, shown with and without the channel calibrations derived from the response to muons. |

png pdf |

Figure 34-b:

Longitudinal shower profile measured using the special ODU. Bands containing 68, 95, and 99% of all events for each layer are shown. |

png pdf |

Figure 35:

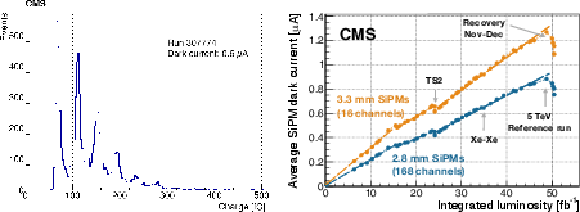

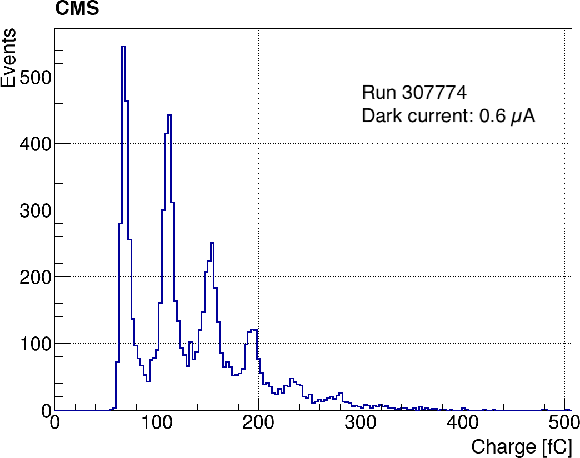

Left: pedestal distribution for a channel with QIE11 + SiPM readout. The charge is integrated in a time window of 100 ns. The QIE pedestal and photoelectron peaks are visible. Right: dark current increase with the integrated luminosity in 2017, where the slope of the fitted line is proportional to the SiPM area. The deviation from linear behavior is due to SiPM annealing in the absence of beam and variation in the instantaneous luminosity. |

png |

Figure 35-a:

Pedestal distribution for a channel with QIE11 + SiPM readout. The charge is integrated in a time window of 100 ns. The QIE pedestal and photoelectron peaks are visible. |

png pdf |

Figure 35-b:

Dark current increase with the integrated luminosity in 2017, where the slope of the fitted line is proportional to the SiPM area. The deviation from linear behavior is due to SiPM annealing in the absence of beam and variation in the instantaneous luminosity. |

png pdf |

Figure 36:

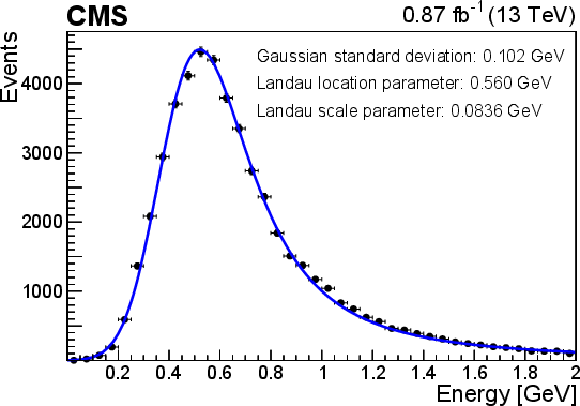

Left: energy deposit from muons in pp collision events in the HE tower corresponding to $ \text{ieta}= $ 20 and $ \text{depth}= $ 5. The energy spectrum is fitted using the convolution of a Gaussian function with a mean of zero and a Landau distribution. The fitting function has three free parameters: the Landau location parameter, the Landau scale parameter, and the width of the Gaussian. Right: the most probable value of the muon energy deposit per layer in HE towers as a function of depth for different $ \eta $ regions. The vertical bars represent the statistical uncertainty. Muons from collision events are considered when their trajectory is contained within a single HCAL tower. The muon signal peak is fitted with the convolution of a Gaussian and Landau functions. The Landau location parameter is divided by the number of scintillator layers in the considered depth. |

png pdf |

Figure 36-a:

Energy deposit from muons in pp collision events in the HE tower corresponding to $ \text{ieta}= $ 20 and $ \text{depth}= $ 5. The energy spectrum is fitted using the convolution of a Gaussian function with a mean of zero and a Landau distribution. The fitting function has three free parameters: the Landau location parameter, the Landau scale parameter, and the width of the Gaussian. |

png pdf |

Figure 36-b:

The most probable value of the muon energy deposit per layer in HE towers as a function of depth for different $ \eta $ regions. The vertical bars represent the statistical uncertainty. Muons from collision events are considered when their trajectory is contained within a single HCAL tower. The muon signal peak is fitted with the convolution of a Gaussian and Landau functions. The Landau location parameter is divided by the number of scintillator layers in the considered depth. |

png pdf |

Figure 37:

Left: HF signal arrival time, as measured in the TDC, versus the collected signal charge. All signals arriving within less than 5 ns are ``window events''. The color indicates the number of events using the scale to the right of each plot. The data were taken in early 2017. Right: charge asymmetry between the two channels of a PMT versus the total charge in both channels. The light from genuine collision events is well mixed in the light guides before falling on the PMT, hence similar signals are expected in all four anodes, which are grouped into two channels. The so-called ``window events'' due to Cherenkov radiation in the PMT window most likely fall on one or two anodes, producing asymmetric signals. |

png pdf |

Figure 37-a:

HF signal arrival time, as measured in the TDC, versus the collected signal charge. All signals arriving within less than 5 ns are ``window events''. The color indicates the number of events using the scale to the right of each plot. The data were taken in early 2017. |

png pdf |

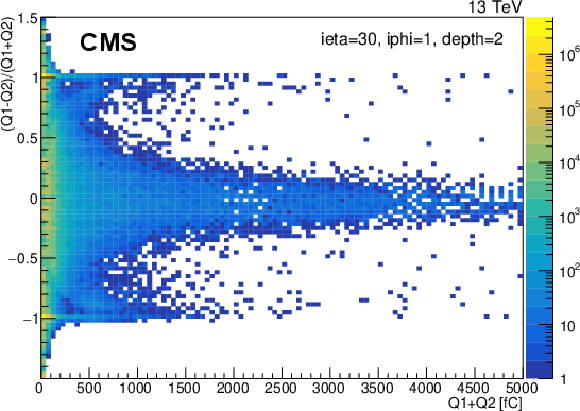

Figure 37-b:

Charge asymmetry between the two channels of a PMT versus the total charge in both channels. The light from genuine collision events is well mixed in the light guides before falling on the PMT, hence similar signals are expected in all four anodes, which are grouped into two channels. The so-called ``window events'' due to Cherenkov radiation in the PMT window most likely fall on one or two anodes, producing asymmetric signals. |

png pdf |

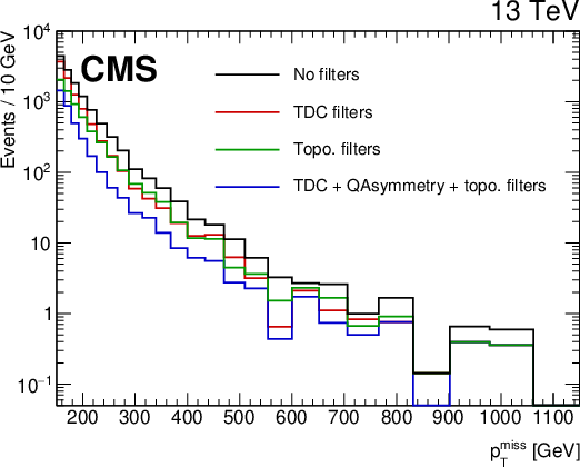

Figure 38:

Effect of filters on the HF anomalous energy contributions to the missing transverse momentum measurement. The methods developed based on hardware improvements installed as part of the Phase 1 upgrade are as effective as the topological selections used previously. Including both the new and old filters further reduces the anomalous missing transverse momentum. |

png pdf |

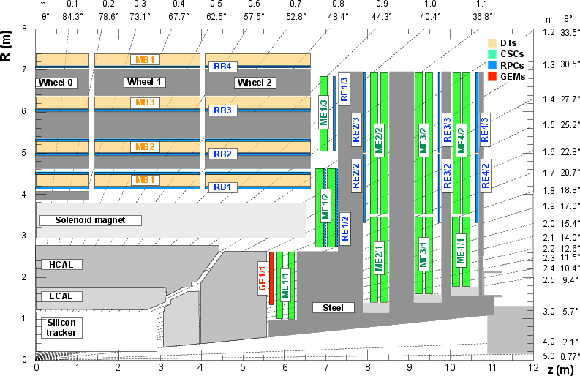

Figure 39:

Schematic view in the $ r $-$ z $ plane of a CMS detector quadrant at the start of Run 3. The interaction point is in the lower left corner. The locations of the various muon stations are shown in color: drift tubes (DTs) with labels MB, cathode strip chambers (CSCs) with labels ME, resistive plate chambers (RPCs) with labels RB and RE, and gas electron multipliers (GEMs) with labels GE. The M denotes muon, B stands for barrel, and E for endcap. The magnet yoke is represented by the dark gray areas. |

png pdf |

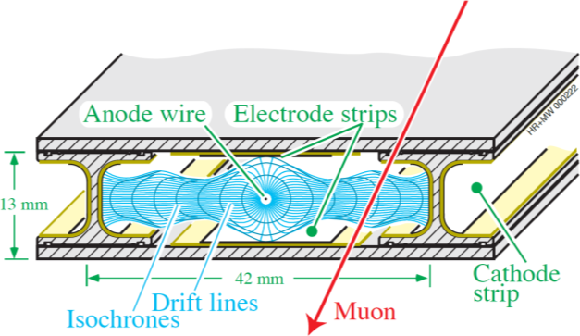

Figure 40:

Left: layout of a CMS DT cell showing the drift lines and isochrones. Right: schematic view of a DT chamber. |

png |

Figure 40-a:

Layout of a CMS DT cell showing the drift lines and isochrones. |

png |

Figure 40-b:

Schematic view of a DT chamber. |

png pdf |

Figure 41:

Schematic views of the Run 1 and Run 3 DT off-detector electronics architectures. |

png pdf |

Figure 42:

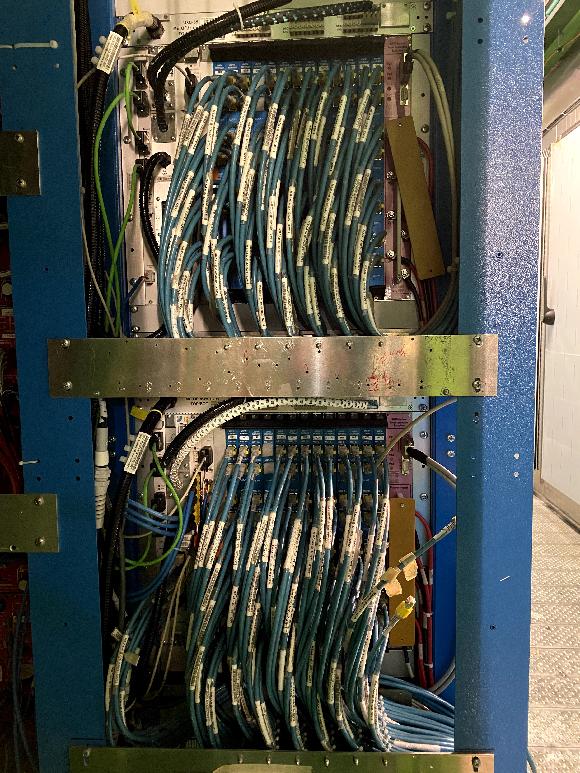

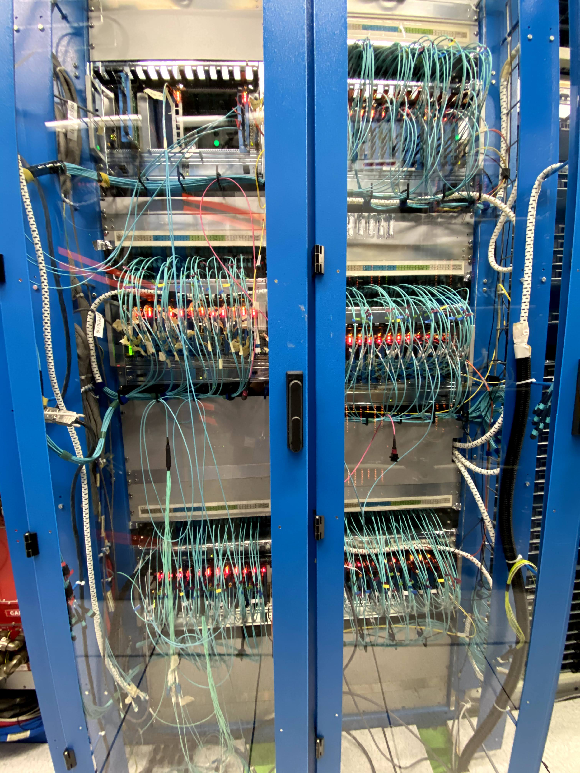

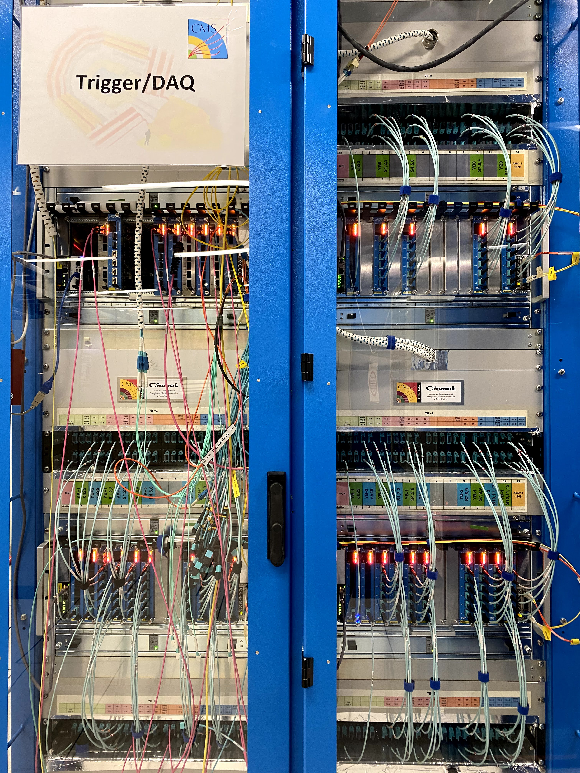

Left: front view of two out of the ten DT CUOF crates located in the UXC balconies surrounding the muon barrel (W-1). Center: front view of the five $ \mu $TCA crates of the TwinMux in the USC. Right: front view of the three $ \mu $TCA crates of the $ \mu $ROS in the USC. |

png |

Figure 42-a:

Front view of two out of the ten DT CUOF crates located in the UXC balconies surrounding the muon barrel (W-1). |

png |

Figure 42-b:

Front view of the five $ \mu $TCA crates of the TwinMux in the USC. |

png |

Figure 42-c:

Front view of the three $ \mu $TCA crates of the $ \mu $ROS in the USC. |

png pdf |

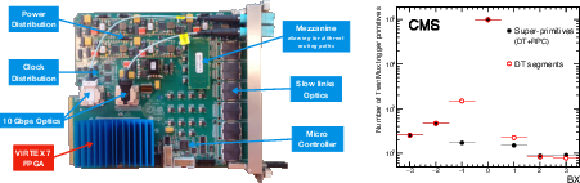

Figure 43:

Left: picture of a TM7 board with the main modules highlighted. Right: BX distribution of L1 trigger primitives reconstructed in the muon barrel [91]. Open (red) circles show the performance of trigger primitives reconstructed using information from the DT detector only. Filled (black) circles show the same performance figure for super-primitives built combining information from the DT and RPC detectors. |

png pdf |

Figure 43-a:

Picture of a TM7 board with the main modules highlighted. |

png pdf |

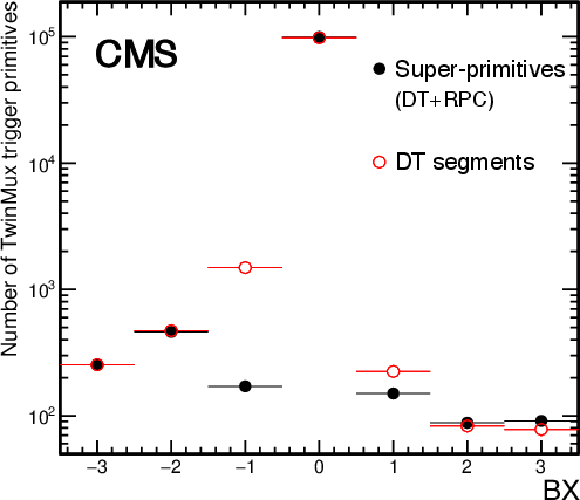

Figure 43-b:

BX distribution of L1 trigger primitives reconstructed in the muon barrel [91]. Open (red) circles show the performance of trigger primitives reconstructed using information from the DT detector only. Filled (black) circles show the same performance figure for super-primitives built combining information from the DT and RPC detectors. |

png pdf |

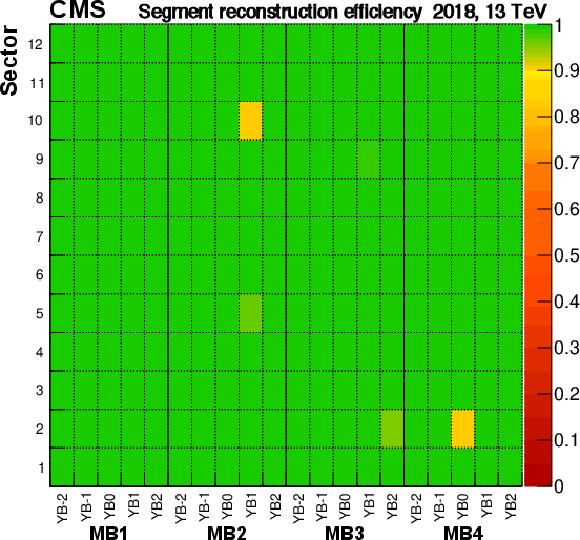

Figure 44:

DT segment reconstruction efficiency measured with the tag-and-probe method using data collected by CMS in 2017 (left) [93] and 2018 (right) [94], before and after the transition to the $ \mu $ROS. The efficiency is usually above 99% except for chambers affected by hardware problems, mostly coming from the DT readout. After the deployment of the $ \mu $ROS, the overall efficiency improves. |

png pdf |

Figure 44-a:

DT segment reconstruction efficiency measured with the tag-and-probe method using data collected by CMS in 2017 [93], before and after the transition to the $ \mu $ROS. The efficiency is usually above 99% except for chambers affected by hardware problems, mostly coming from the DT readout. After the deployment of the $ \mu $ROS, the overall efficiency improves. |

png pdf |

Figure 44-b:

DT segment reconstruction efficiency measured with the tag-and-probe method using data collected by CMS in 2018 [94], before and after the transition to the $ \mu $ROS. The efficiency is usually above 99% except for chambers affected by hardware problems, mostly coming from the DT readout. After the deployment of the $ \mu $ROS, the overall efficiency improves. |

png pdf |

Figure 45:

DT hit detection efficiency, computed as a function of the total CMS integrated luminosity, for the $ \phi $-SLs of MB1 chambers [94]. Different colors refer to different DT wheels. The plot summarizes how the efficiency evolved during Run 2, mostly as a consequence of the different updates of the detector HV and FE threshold settings. |

png pdf |

Figure 46:

Drift velocity measurement using the fresh gas analyzed by the VDC system. The variation on 8th March 2018 corresponds to the transition between closed-loop and open-loop operation of the DT gas system. |

png pdf |

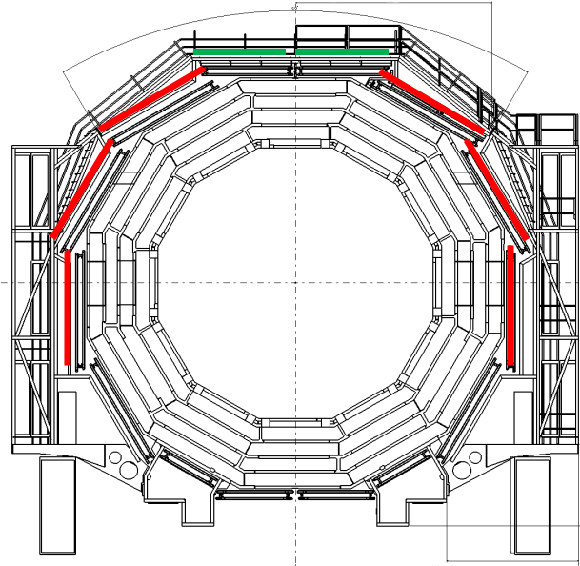

Figure 47:

Left: magnitudes of the linear dependence between the currents from each DT chamber of the MB and the LHC instantaneous luminosity [98]. Results are computed after the optimization of the operational working points, described in Section 6.1.3.1. Right: transverse view of an MB wheel highlighting the layout of the MB4 shield, as installed in W$-$2, $-$1, $+$1, and $+$2. Red (green) lines represent shield layers consisting of thin (thick) cassettes. |

png |

Figure 47-a:

Magnitudes of the linear dependence between the currents from each DT chamber of the MB and the LHC instantaneous luminosity [98]. Results are computed after the optimization of the operational working points, described in Section 6.1.3.1. |

png |

Figure 47-b:

Transverse view of an MB wheel highlighting the layout of the MB4 shield, as installed in W$-$2, $-$1, $+$1, and $+$2. Red (green) lines represent shield layers consisting of thin (thick) cassettes. |

png pdf |

Figure 48:

Left: layout of a CSC chamber, with seven trapezoidal panels forming six gas gaps. Only a few wires (lines running from left to right) and strips (gray band running from top to bottom) on the upper right corner are shown for illustration. Right: installation of the outer CSC chambers (ME4/2) during LS1. |

png |

Figure 48-a:

Layout of a CSC chamber, with seven trapezoidal panels forming six gas gaps. Only a few wires (lines running from left to right) and strips (gray band running from top to bottom) on the upper right corner are shown for illustration. |

png pdf |

Figure 49:

Emulation of the Run 1 algorithms compared to the upgraded Run 2 algorithms, as a function of the L1 muon $ \eta $. The most common L1 single-muon trigger threshold used in 2017 was $ p_{\mathrm{T}}^{\mu\text{,L1}}\geq $ 25 GeV. |

png pdf |

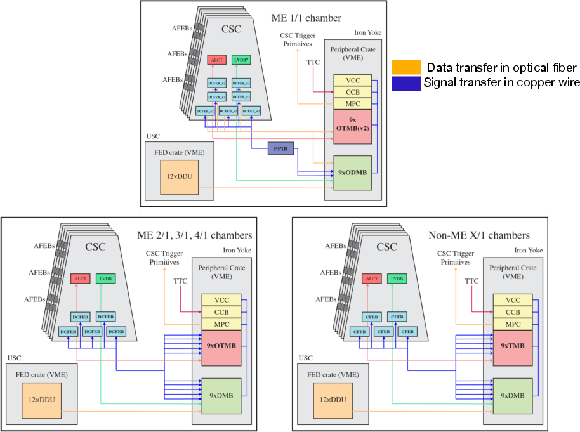

Figure 50:

Schematic of the CSC electronics readout systems for Run 3: ME1/1 (upper), ME234/1 (lower left), all other chambers, ME1234/2 and ME1/3 (lower right). |

png pdf |

Figure 51:

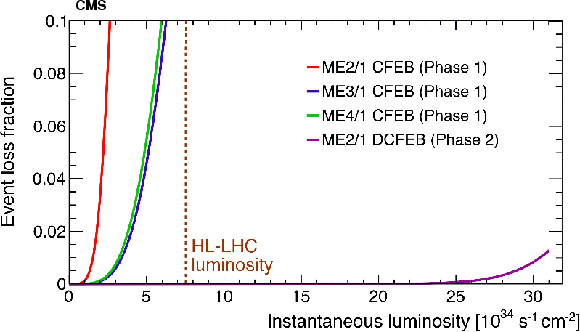

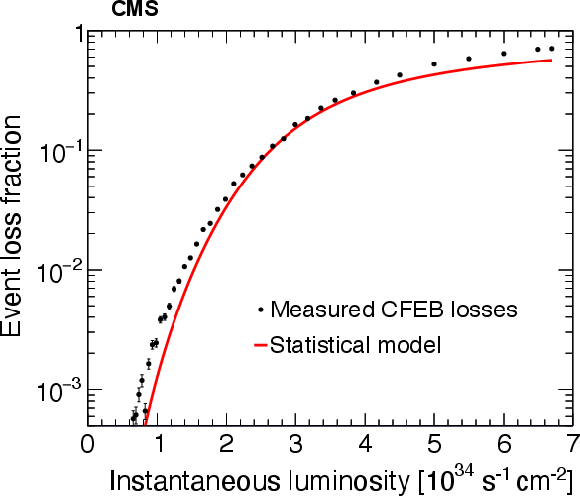

Left: event loss fraction as a function of instantaneous luminosity for different type chambers after different upgrades. The vertical dashed brown line indicates the design HL-LHC luminosity. Right: event loss rate measured in a CFEB under HL-LHC conditions for an ME2/1 chamber, compared to the statistical model [96]. |

png pdf |

Figure 51-a:

Event loss fraction as a function of instantaneous luminosity for different type chambers after different upgrades. The vertical dashed brown line indicates the design HL-LHC luminosity. |

png pdf |

Figure 51-b:

Event loss rate measured in a CFEB under HL-LHC conditions for an ME2/1 chamber, compared to the statistical model [96]. |

png pdf |

Figure 52:

Difference between the position of a reconstructed hit in layer 3 of an ME1/1a chamber and the position obtained by fitting a segment with hits from the other five layers for Run 1 (red) and Run 2 (blue). The spatial resolution is improved by 27% from $ \sigma=$ 64 $\mu$m$ in Run 1 to 46 m in Run 2. This is due to the removal of the triple-grouping of strips in ME1/1a, which reduces the capacitance and hence the frontend noise. |

png pdf |

Figure 53:

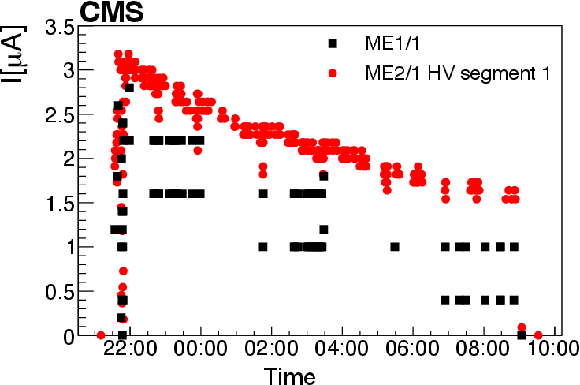

Left: instantaneous luminosity versus time for one of the LHC fills in 2016. Right: current (in nA) in an ME2/1 chamber (the HV segment closest to the beam) for the same fill, as measured with the custom-made HV subsystem used for non-ME1/1 chambers; current (in $ \mu $A) in an ME1/1 chamber (one plane) for the same fill, as measured with the commercial HV subsystem. |

png pdf |

Figure 53-a:

Instantaneous luminosity versus time for one of the LHC fills in 2016. |

png pdf |

Figure 53-b:

Current (in nA) in an ME2/1 chamber (the HV segment closest to the beam) for the same fill, as measured with the custom-made HV subsystem used for non-ME1/1 chambers; current (in $ \mu $A) in an ME1/1 chamber (one plane) for the same fill, as measured with the commercial HV subsystem. |

png pdf |

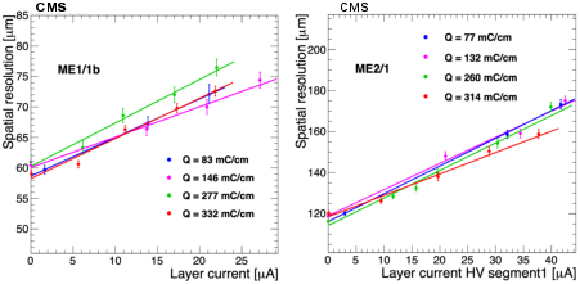

Figure 54:

Spatial resolutions for an ME1/1b (left) and ME2/1 (right) chamber using a muon beam while being uniformly illuminated by a $ ^{137}$Cs photon source to simulate the background from high luminosity pp collisions, as a function of the average current per layer in one HV segment. The results for four different accumulated charges per unit wire length are shown, along with linear fits to each set. |

png pdf |

Figure 54-a:

Spatial resolutions for an ME1/1b ME2/1 chamber using a muon beam while being uniformly illuminated by a $ ^{137}$Cs photon source to simulate the background from high luminosity pp collisions, as a function of the average current per layer in one HV segment. The results for four different accumulated charges per unit wire length are shown, along with linear fits to each set. |

png pdf |

Figure 54-b:

Spatial resolutions for an ME1/1b (left) and ME2/1 (right) chamber using a muon beam while being uniformly illuminated by a $ ^{137}$Cs photon source to simulate the background from high luminosity pp collisions, as a function of the average current per layer in one HV segment. The results for four different accumulated charges per unit wire length are shown, along with linear fits to each set. |

png pdf |

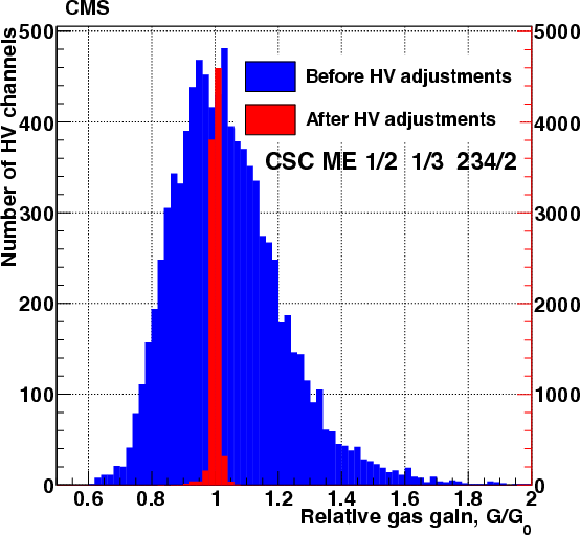

Figure 55:

Relative gas gain distribution in CSCs before and after the gas gain equalization campaign in 2016 [96]. Each entry in the histogram presents the mean value of gas gain in each HV channel. The scale of the blue histogram is on the left while the scale of the red histogram is on the right. |

png pdf |

Figure 56:

Left: schematic of the double-layer layout of the RPC chambers. Right: illustration of the RPC technology. |

png pdf |

Figure 56-a:

Schematic of the double-layer layout of the RPC chambers. |

png pdf |

Figure 56-b:

Illustration of the RPC technology. |

png pdf |

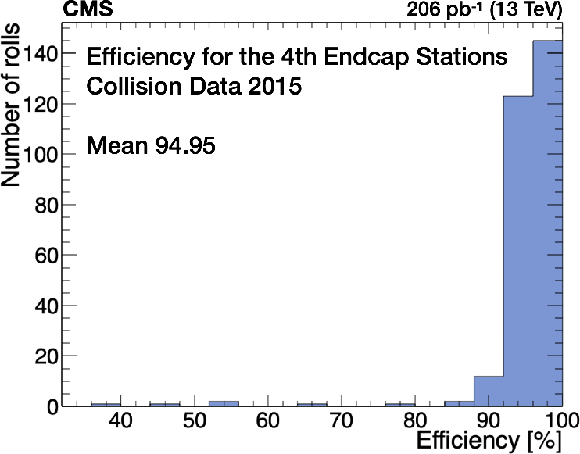

Figure 57:

Efficiency distribution of the RE$ \pm $4 stations in their first year of operation in 2015. |

png pdf |

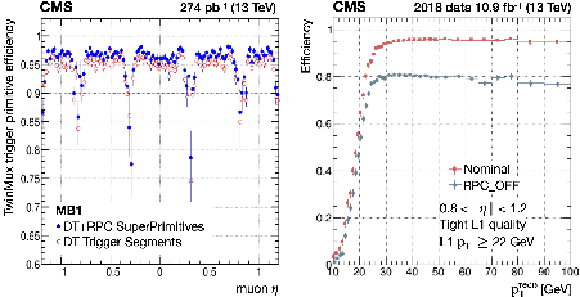

Figure 58:

Left: station-1 barrel trigger-primitive efficiency as a function of muon pseudorapidity. Right: trigger efficiency as a function of muon $ p_{\mathrm{T}} $ for the OMTF, derived from a trigger emulation applied to real data, using (red) and not using (blue) RPC information. |

png pdf |

Figure 58-a:

Station-1 barrel trigger-primitive efficiency as a function of muon pseudorapidity. |

png pdf |

Figure 58-b:

Trigger efficiency as a function of muon $ p_{\mathrm{T}} $ for the OMTF, derived from a trigger emulation applied to real data, using (red) and not using (blue) RPC information. |

png pdf |

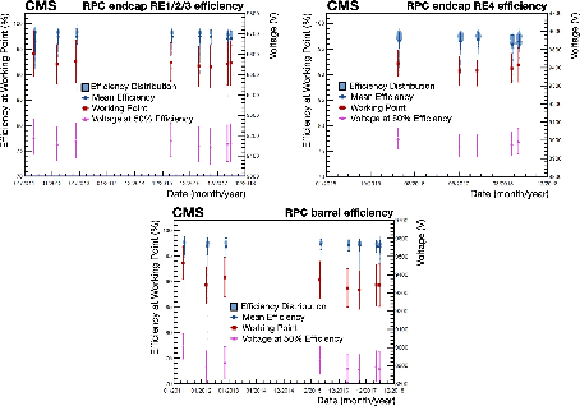

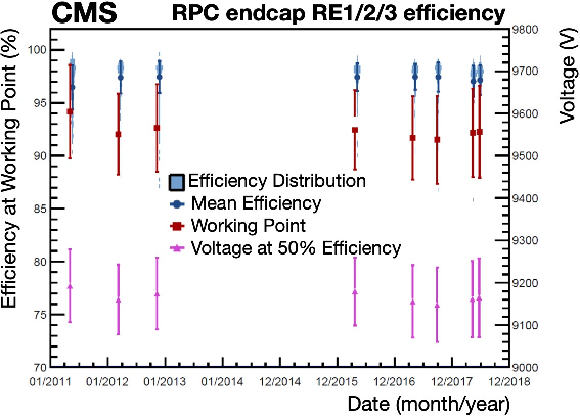

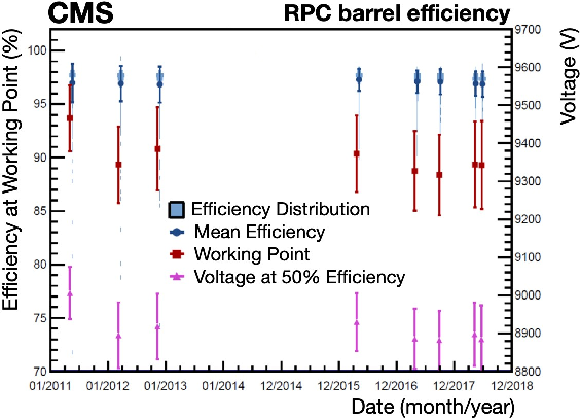

Figure 59:

Temporal evolution of efficiencies determined from HV scan data at the WP and at $ V_{50%} $, for RE1/2/3 (upper left), RE4 (upper right), and the barrel (lower). The light blue bands show the histograms of the distributions, where the width of the band represents the population of channels having the corresponding efficiency value. |

png pdf |

Figure 59-a:

Temporal evolution of efficiencies determined from HV scan data at the WP and at $ V_{50%} $, for RE1/2/3. The light blue bands show the histograms of the distributions, where the width of the band represents the population of channels having the corresponding efficiency value. |

png pdf |

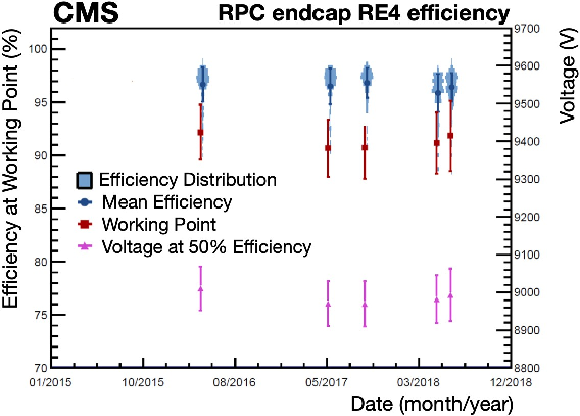

Figure 59-b:

Temporal evolution of efficiencies determined from HV scan data at the WP and at $ V_{50%} $, for RE4. The light blue bands show the histograms of the distributions, where the width of the band represents the population of channels having the corresponding efficiency value. |

png pdf |

Figure 59-c:

Temporal evolution of efficiencies determined from HV scan data at the WP and at $ V_{50%} $, for the barrel. The light blue bands show the histograms of the distributions, where the width of the band represents the population of channels having the corresponding efficiency value. |

png pdf |

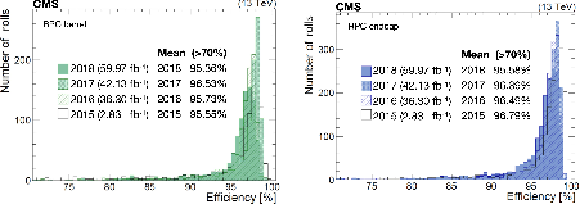

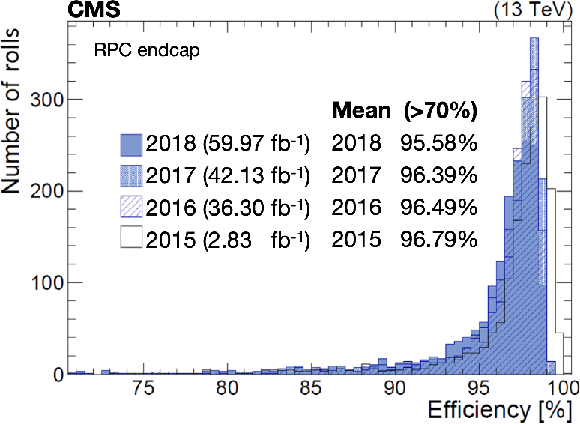

Figure 60:

Distributions of the overall RPC efficiencies in the barrel (left) and endcaps (right) during pp data taking in Run 2 [109]. |

png pdf |

Figure 60-a:

Distributions of the overall RPC efficiencies in the barrel during pp data taking in Run 2 [109]. |

png pdf |

Figure 60-b:

Distributions of the overall RPC efficiencies in the endcaps during pp data taking in Run 2 [109]. |

png pdf |

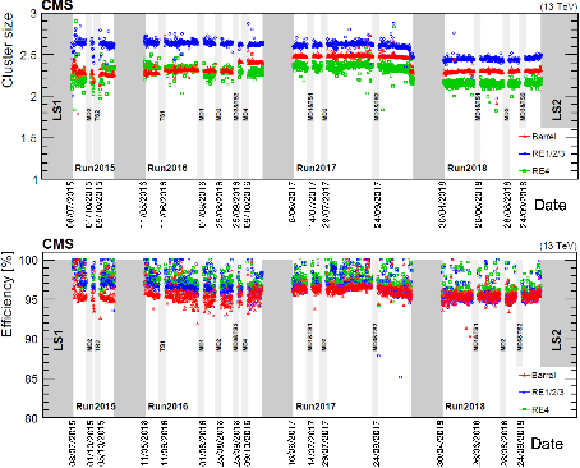

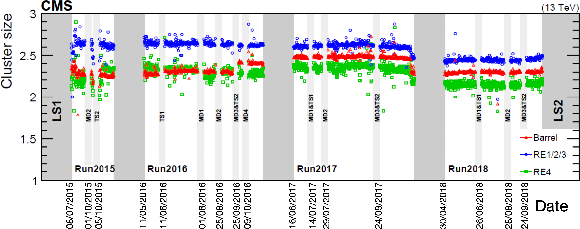

Figure 61:

History of the RPC efficiency (upper) and cluster size (lower) during Run 2. Gray areas correspond to the scheduled technical stops. |

png pdf |

Figure 61-a:

History of the RPC efficiency during Run 2. Gray areas correspond to the scheduled technical stops. |

png pdf |

Figure 61-b:

History of the RPC cluster size during Run 2. Gray areas correspond to the scheduled technical stops. |

png pdf |

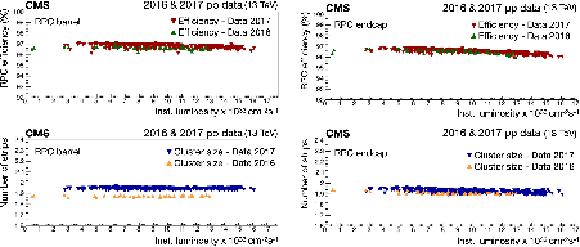

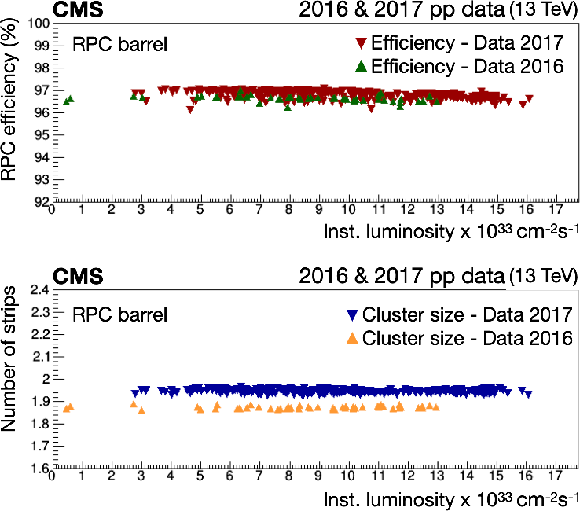

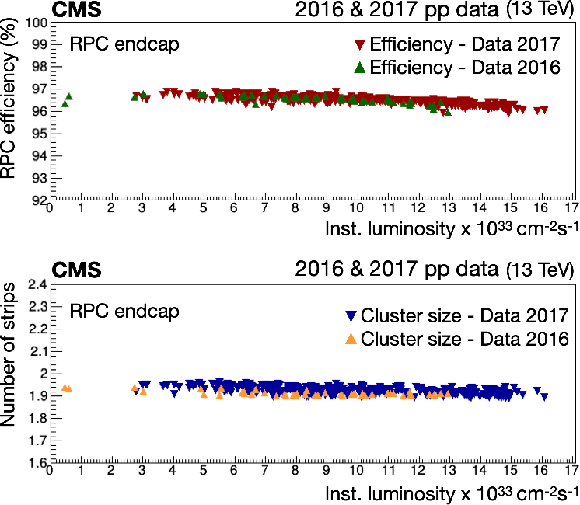

Figure 62:

RPC barrel and endcap efficiency (upper) and cluster size (lower) as a function of the LHC instantaneous luminosity for pp collisions in 2016 and 2017. The linear extrapolation to the instantaneous luminosity expected at the HL-LHC of 7.5 $ \times$ 10$^{34}$ cm$^{2}$s$^{-1}$ shows a 1.35% reduction in efficiency for the barrel and 3.5% for the endcap. |

png pdf |

Figure 62-a:

RPC barrel and endcap efficiency as a function of the LHC instantaneous luminosity for pp collisions in 2016 and 2017. The linear extrapolation to the instantaneous luminosity expected at the HL-LHC of 7.5 $ \times$ 10$^{34}$ cm$^{2}$s$^{-1}$ shows a 1.35% reduction in efficiency for the barrel and 3.5% for the endcap. |

png pdf |

Figure 62-b:

RPC barrel and endcap cluster size as a function of the LHC instantaneous luminosity for pp collisions in 2016 and 2017. The linear extrapolation to the instantaneous luminosity expected at the HL-LHC of 7.5 $ \times$ 10$^{34}$ cm$^{2}$s$^{-1}$ shows a 1.35% reduction in efficiency for the barrel and 3.5% for the endcap. |

png pdf |

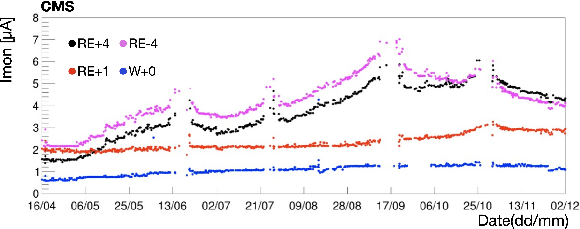

Figure 63:

Ohmic current history in W$ + $ 0, RE$ + $1, RE$ + $4, and RE$-$4. |

png pdf |

Figure 64:

RPC physics current history in RE$ \pm $1, RE$ \pm $2, RE$ \pm $3 and RE$ \pm $4. |

png pdf |

Figure 65:

Ohmic current as a function of HF concentration. |

png pdf |

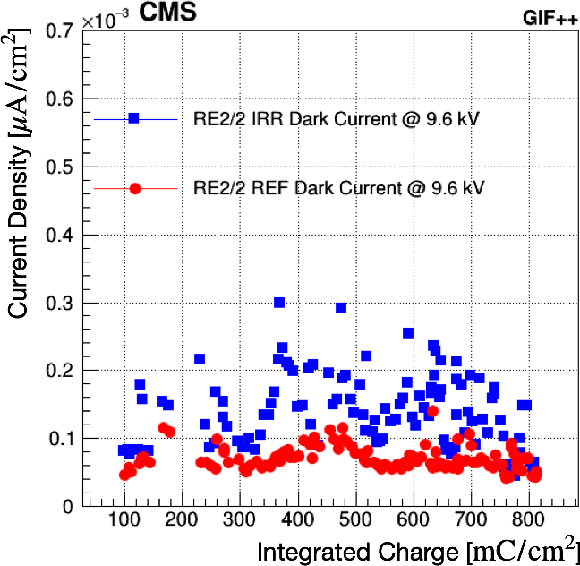

Figure 66:

Dark-current density for the irradiated (blue squares) and reference (red circles) RE2 chambers as a function of the collected integrated charge at 6.5 (left) and 9.6 kV (right). |

png pdf |

Figure 66-a:

Dark-current density for the irradiated (blue squares) and reference (red circles) RE2 chambers as a function of the collected integrated charge at 6.5 kV. |

png pdf |

Figure 66-b:

Dark-current density for the irradiated (blue squares) and reference (red circles) RE2 chambers as a function of the collected integrated charge at 9.6 kV. |

png pdf |

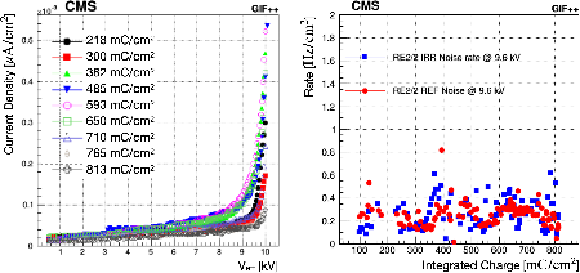

Figure 67:

Left: dark-current density monitored as a function of the effective high voltage at different values of the collected integrated charge for the irradiated RE2 chamber. Right: average noise rate as a function of the collected integrated charge for the irradiated (blue squares) and reference (red circles) RE2 chambers. |

png pdf |

Figure 67-a:

Dark-current density monitored as a function of the effective high voltage at different values of the collected integrated charge for the irradiated RE2 chamber. |

png pdf |

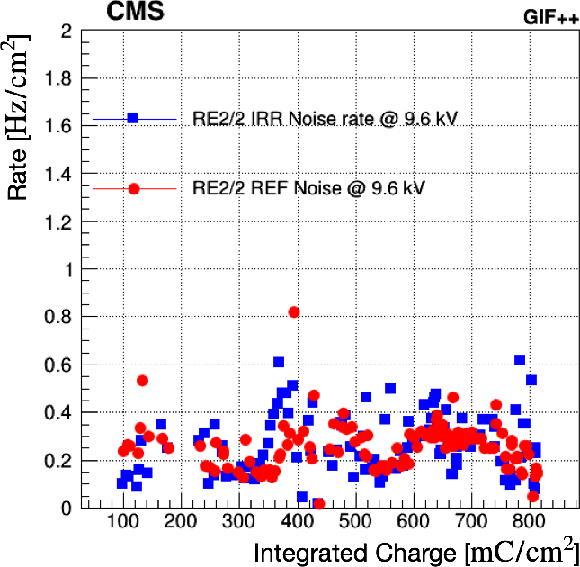

Figure 67-b:

Average noise rate as a function of the collected integrated charge for the irradiated (blue squares) and reference (red circles) RE2 chambers. |

png pdf |

Figure 68:

Resistivity ratio (blue squares) and current ratio (red circles) between the irradiated and reference RE2 chambers as a function of the collected integrated charge. |

png pdf |

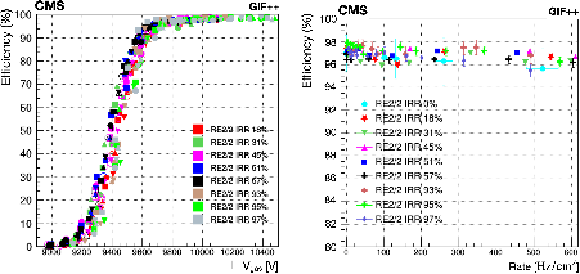

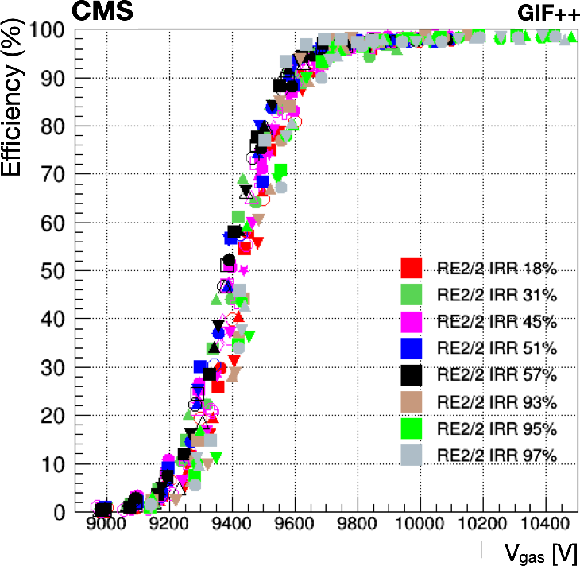

Figure 69:

Left: irradiated RE2 $ / $ 2 chamber efficiency as a function of $ V_{\text{gas}} $ for different background irradiation rates, up to 600 Hz/cm$^2 $, and different integrated charge values. Different marker shapes of the same color represent different background rates at the same integrated charge values. Right: irradiated RE2 chamber efficiency at the WP as a function of the background rate at different values of the collected integrated charge. |

png pdf |

Figure 69-a:

Irradiated RE2 $ / $ 2 chamber efficiency as a function of $ V_{\text{gas}} $ for different background irradiation rates, up to 600 Hz/cm$^2 $, and different integrated charge values. Different marker shapes of the same color represent different background rates at the same integrated charge values. |

png pdf |

Figure 69-b:

Irradiated RE2 chamber efficiency at the WP as a function of the background rate at different values of the collected integrated charge. |

png pdf |

Figure 70:

Photographs illustrating the gas-leak repair procedures. Left: scientists working on the repair procedure. Middle left: access to broken component. Middle right: repairing of components. Right: closing and validation. |

png pdf |

Figure 71:

Efficiency comparison between 2018 (red) and 2021 (blue) cosmic ray data for chambers with repaired gas leaks. |

png pdf |

Figure 72:

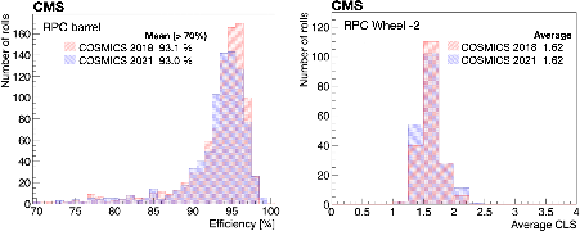

Left: distribution of the efficiency per roll in the RPC barrel chambers. Only chambers with rolls of efficiency greater than 70% are considered. Right: average cluster size for the RPC barrel chambers in wheel W-2. In both figures, the cosmic ray muon data from 2018 (2021) are indicated in red (blue). |

png pdf |

Figure 72-a:

Distribution of the efficiency per roll in the RPC barrel chambers. Only chambers with rolls of efficiency greater than 70% are considered. |

png pdf |

Figure 72-b:

Average cluster size for the RPC barrel chambers in wheel W-2. In both figures, the cosmic ray muon data from 2018 (2021) are indicated in red (blue). |

png pdf |

Figure 73:

Sketch of GE1/1 system of one endcap indicating its location relative to the full endcap muon system, the endcap calorimeter, and the shielding elements. |

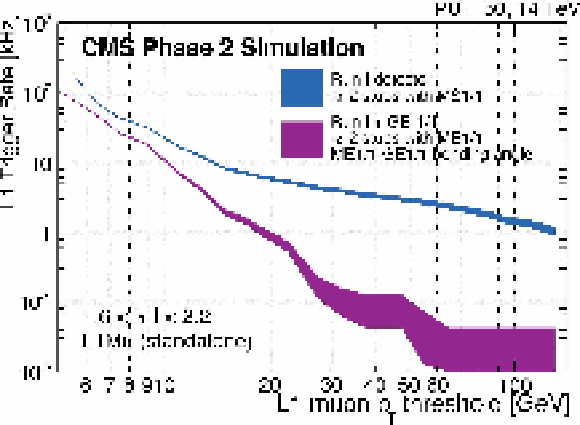

png pdf jpg |

Figure 74:

L1 muon trigger rate with and without the GE1/1 upgrade, assuming an instantaneous luminosity of 2 $ \times$ 10$^{34}$ cm$^{2}$s$^{-1}$, where MS1/1 indicates the first muon station [96]. |

png pdf |

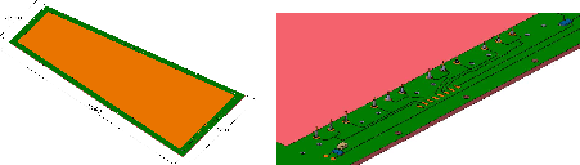

Figure 75:

Left: layout of the GE1/1 chambers along the endcap ring, indicating how the short and long chambers fit in the existing volume. Right: blowup of the trapezoidal detector, GEM foils, and readout planes, indicating the geometry and main elements of the GEM detectors [124]. |

png pdf |

Figure 75-b:

Blowup of the trapezoidal detector, GEM foils, and readout planes, indicating the geometry and main elements of the GEM detectors [124]. |

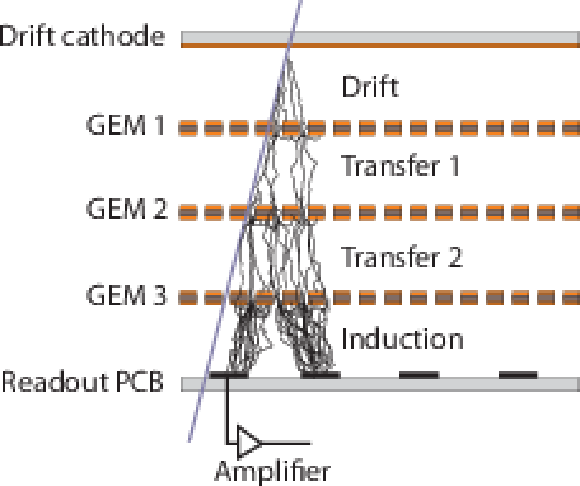

png pdf |

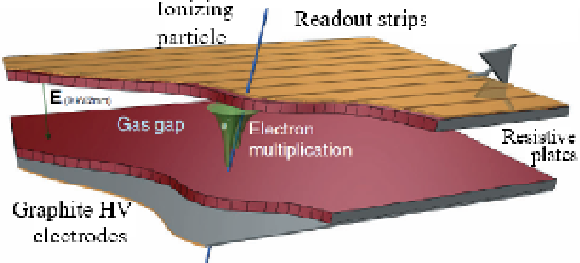

Figure 76:

Sketch of a triple GEM detector showing the three foils, cathode, readout PCB and amplification [96]. |

png pdf |

Figure 77:

GE1/1 drift board (left) and a magnified view of the drift board (right) showing the HV pins and the resistor and capacitor network connecting to the chamber HV supply. |

png pdf |

Figure 78:

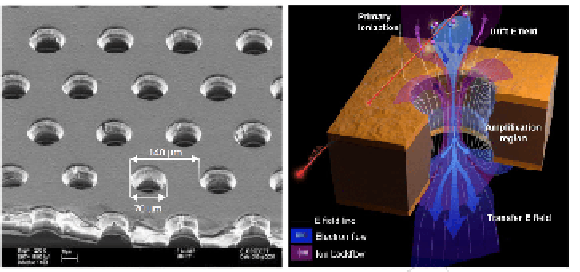

Electron scanning microscope image of a GE1/1 foil (left) and a diagram of the multiplication principle (right) [96]. |

png pdf |

Figure 79:

Left: periphery GE1/1 gas rack. Center: location of the gas lines feeding the GE1/1 chambers. Right: exploded view of a GE1/1 chamber showing the gas plug attached to the readout board. |

png |

Figure 79-a:

Periphery GE1/1 gas rack. Center: location of the gas lines feeding the GE1/1 chambers. |

png pdf |

Figure 80:

GE1/1 electronics overview showing the frontend electronics, VFAT, GEB, and opto-hybrid boards, as well as the optical links and backend readout with connections to the L1 trigger and DAQ. |

png pdf |

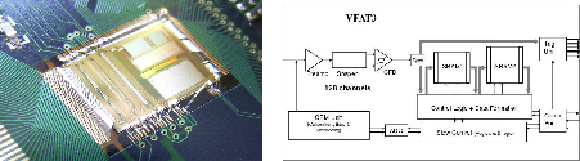

Figure 81:

Picture of the VFAT3 ASIC (left) and a high-level schematic (right). |

png pdf jpg |

Figure 82:

Photo of the GE1/1 opto-hybrid board with the Xilinx Virtex-6 FPGA at the center. |

png pdf |

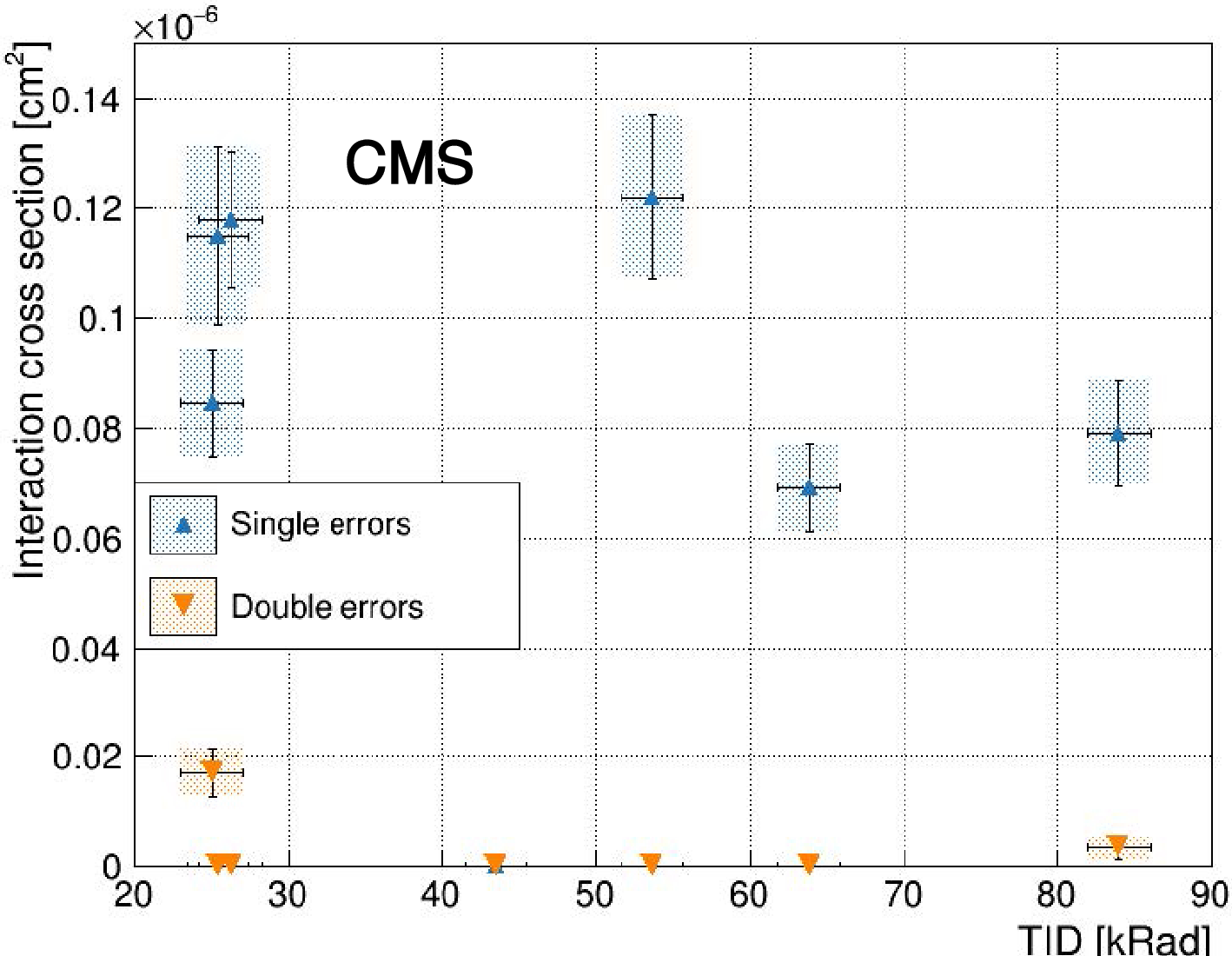

Figure 83:

Single- and double-error cross sections measured in irradiation tests as a function of the TID. |

png pdf |

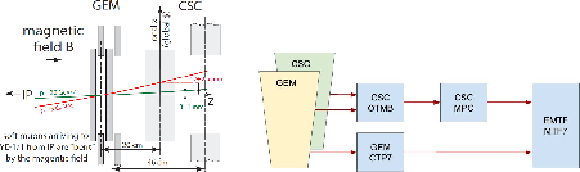

Figure 84:

Side view of the GEM-CSC trigger coincidence (left) and schematic overview of the data flow in the GEM and CSC trigger processor (right). The addition of the GE1/1 station significantly increases (by a factor of 3 to 5) the lever arm of the distance traveled in $ z $ by a muon originating from the interaction point. The bending angle between the entrance of the muon to the GE1/1 station and the exit from the ME1/1 CSC station can be used to estimate the momentum of the muon. |

png |

Figure 84-a:

Side view of the GEM-CSC trigger coincidence. |

png pdf |

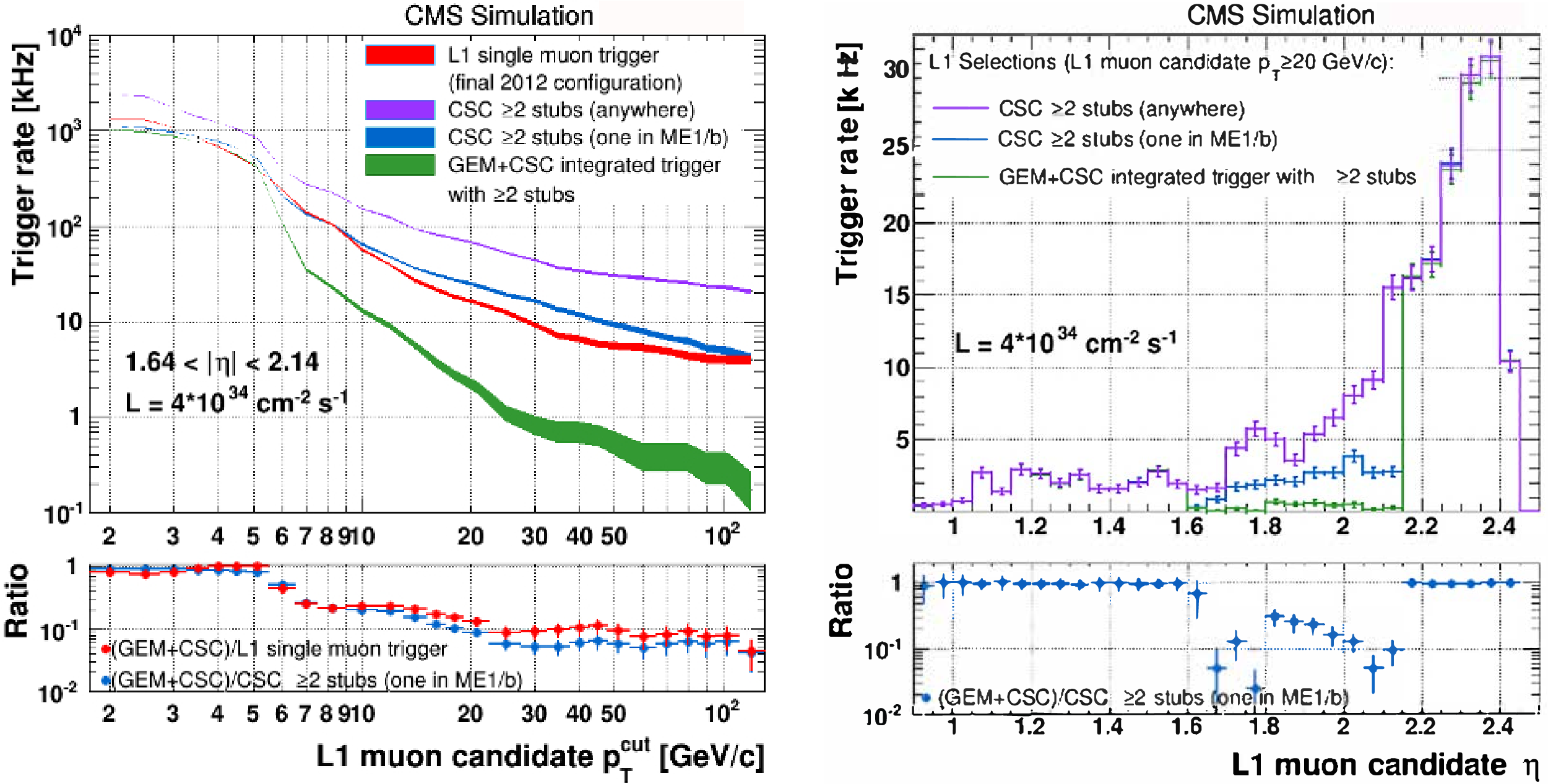

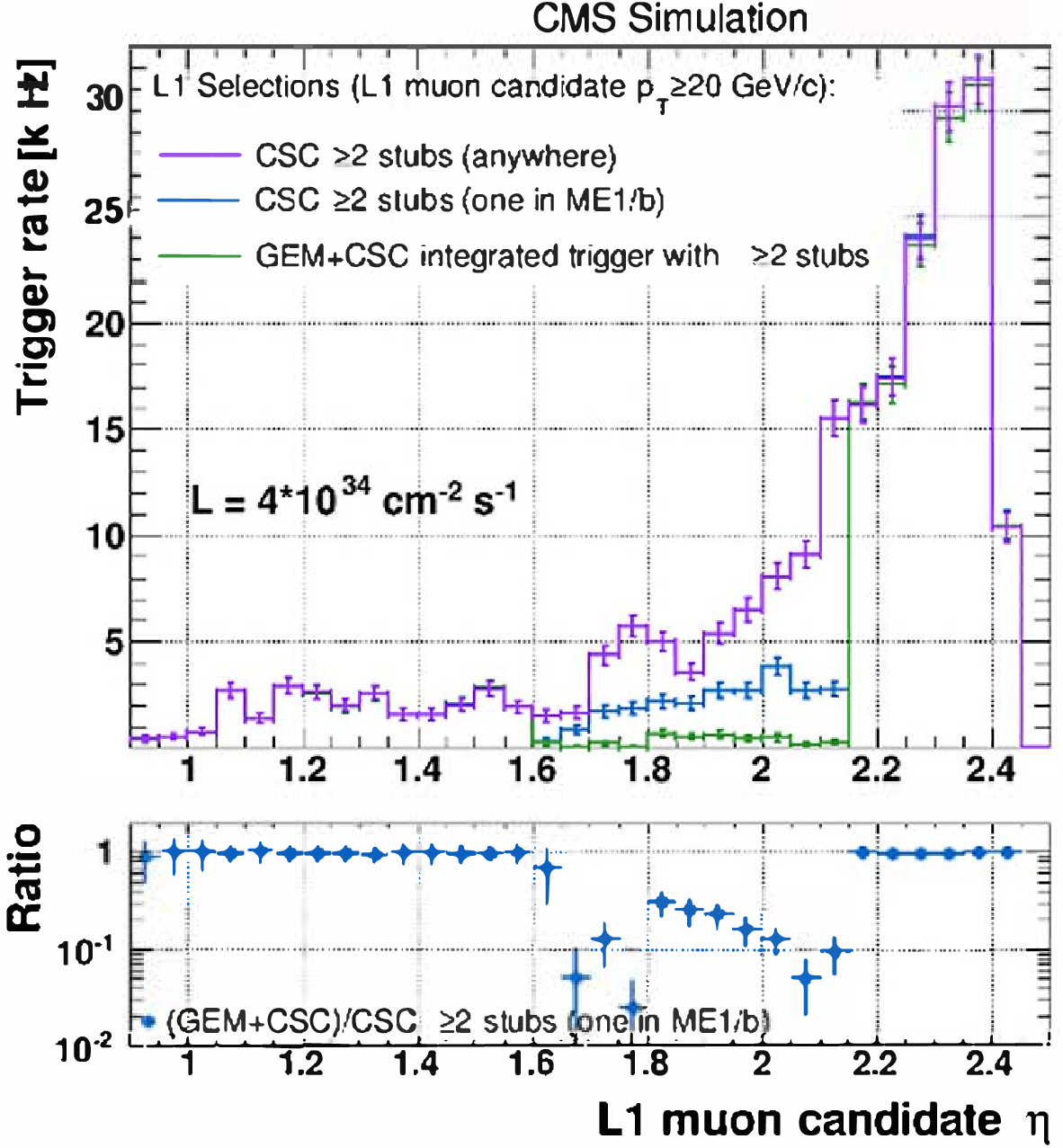

Figure 85:

Muon rate from simulation as a function of $ p_{\mathrm{T}} $ (left) and $ \eta $ (right) with and without the integration of the GEM chambers under various assumptions [96]. |

png pdf |

Figure 85-a:

Muon rate from simulation as a function of $ p_{\mathrm{T}} $ with and without the integration of the GEM chambers under various assumptions [96]. |

png pdf |

Figure 85-b:

Muon rate from simulation as a function of $ \eta $ with and without the integration of the GEM chambers under various assumptions [96]. |

png pdf |

Figure 86:

GEM test beam results showing the efficiency (left) and time resolution (right) as a function of the drift voltage placed on the GEM foils. The chosen gas mixture Ar/c (70/30) has very similar properties to Ar/CO$_2$/CF$_4$ (45/15/40) and was selected as CF$_4$, a greenhouse gas, is being phased out of industry. |

png |

Figure 86-a:

GEM test beam results showing the efficiency as a function of the drift voltage placed on the GEM foils. The chosen gas mixture Ar/c (70/30) has very similar properties to Ar/CO$_2$/CF$_4$ (45/15/40) and was selected as CF$_4$, a greenhouse gas, is being phased out of industry. |

png |

Figure 86-b:

GEM test beam results showing the time resolution as a function of the drift voltage placed on the GEM foils. The chosen gas mixture Ar/c (70/30) has very similar properties to Ar/CO$_2$/CF$_4$ (45/15/40) and was selected as CF$_4$, a greenhouse gas, is being phased out of industry. |

png pdf |

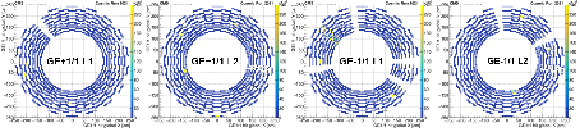

Figure 87:

GE1/1 occupancy in cosmic ray muon data recorded in 2021. |

png pdf |

Figure 88:

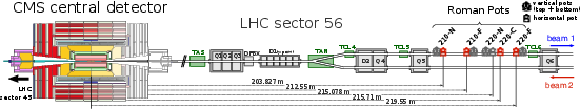

Schematic layout of the beam line between the interaction point and the RP locations in LHC sector 56, corresponding to the negative $ z $ direction in the CMS coordinate system. The accelerator magnets are indicated in gray; the collimator system elements in green. The RP units marked in red are those used by PPS during Run 2; the dark gray ones are part of the TOTEM experiment. In Run 3 the ``220 near'' horizontal unit is used in place of the ``210 near''. |

png pdf |

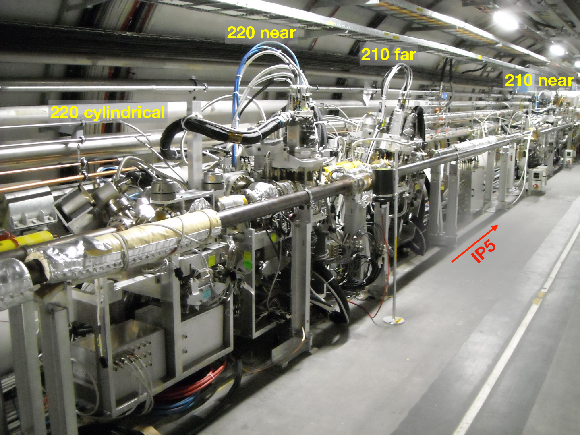

Figure 89:

View of a section of the LHC tunnel in sector 45, with part of the PPS and TOTEM RP stations along the beam line. |

png pdf |

Figure 90:

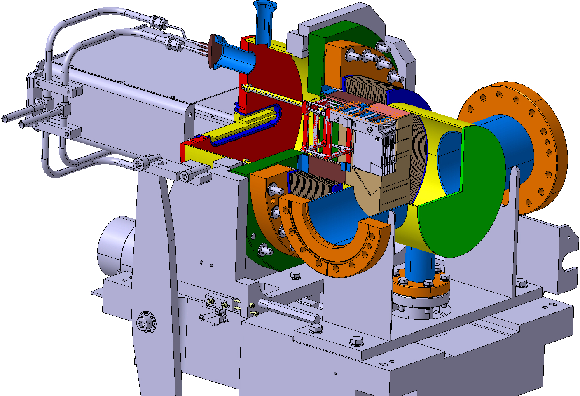

Sketches of the horizontal roman pots. Left: box-shaped pot, with the ferrite RF-shield in place. Center: cylindrical pot where the ferrite RF-shield is integrated as a ring in the flange. The thin window shapes, different for the two cases, are visible in the rightmost, vertically centered part of the pots. Right: overview of the insertion system of a box-shaped pot in a section of the LHC beam pipe. |

png |

Figure 90-a:

Box-shaped pot, with the ferrite RF-shield in place. |

png |

Figure 90-b:

Cylindrical pot where the ferrite RF-shield is integrated as a ring in the flange. The thin window shapes, different for the two cases, are visible in the rightmost, vertically centered part of the pots. |

png pdf |

Figure 90-c:

Overview of the insertion system of a box-shaped pot in a section of the LHC beam pipe. |

png pdf |

Figure 91:

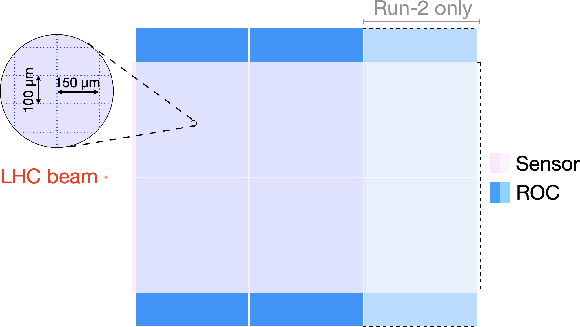

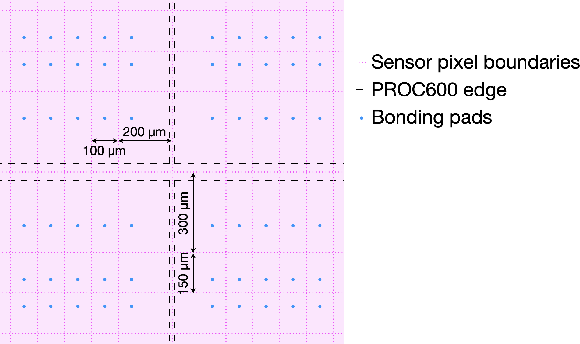

Geometry and arrangement of the pixel sensor modules. Upper left: arrangement of the sensor modules in a tracking station, relative to the LHC beam. Upper right: sensor, ROCs, and pixel geometry. The shaded areas with dashed contours refer to the larger, 3$ \times $2 modules used in Run 2. Lower: detail of pixels at internal ROC edges. |

png |

Figure 91-a:

Arrangement of the sensor modules in a tracking station, relative to the LHC beam. |

png |

Figure 91-b:

Sensor, ROCs, and pixel geometry. The shaded areas with dashed contours refer to the larger, 3$ \times $2 modules used in Run 2. |

png |

Figure 91-c:

Detail of pixels at internal ROC edges. |

png pdf |

Figure 92:

Schematic layout of the columns of 3D pixels. Left: double-sided technology, used in Run 2. Right: single-sided technology, used in Run 3. Metal bumps on top are used for interconnection with the readout chips; p-type surface implants, such as p-stop or p-spray, provide isolation between contiguous pixels. Reproduced from Ref. [149]. |

png pdf |

Figure 93:

Schematic diagram of the PPS pixel tracker readout chain. The various components shown are described in Ref. [14]. |

png pdf |

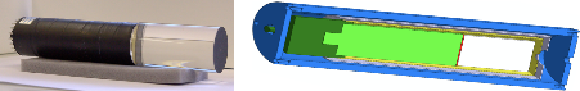

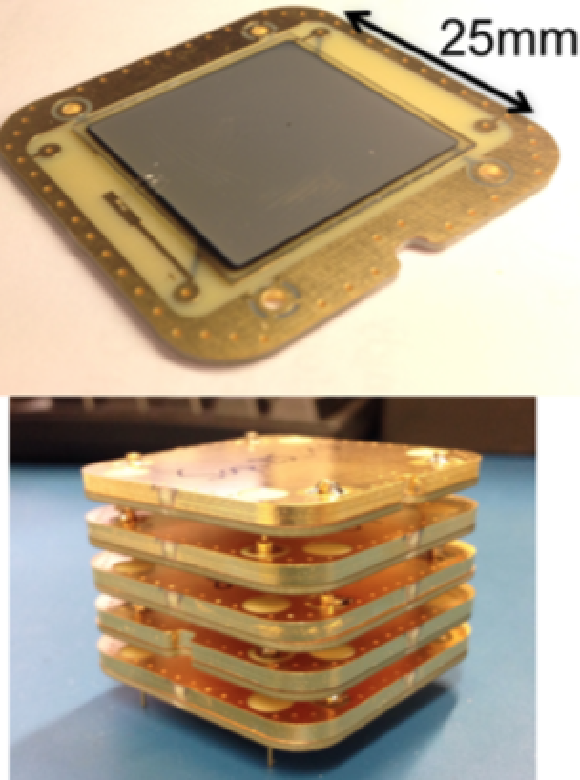

Figure 94:

The detector module of the silicon pixel tracker, in its Run 2 (upper) and Run 3 (lower) versions. |

png pdf jpg |

Figure 95:

The pixel PortCard for Run 3; the small flange near the bottom acts as a feed-through for the board. The section below is housed inside the secondary vacuum volume of the pot. |

png pdf |

Figure 96:

Details of the pixel detector package. Left: Run 2 version. Center: Run 3 version. Right: mechanical, cooling, and electrical connections to the upper part of the RP in Run 3: the vacuum section of the PortCard is visible, as well as the cooling circuit and the connections to the environmental sensors. |

png pdf |

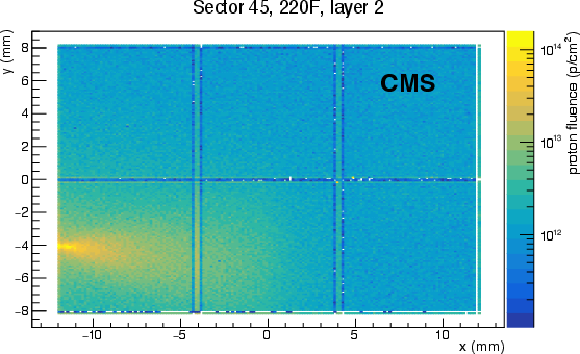

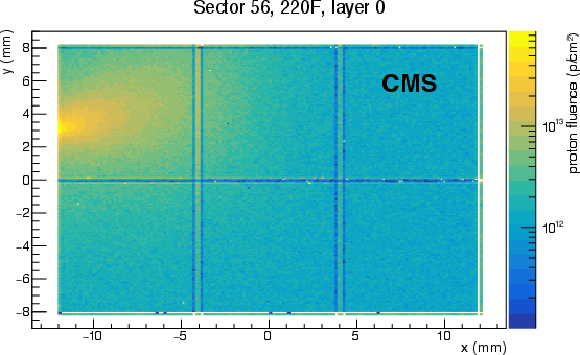

Figure 97:

Proton fluence in two planes (one per arm) of the PPS pixel tracker in a sample run in 2017, normalized to an integrated luminosity of 1 fb$ ^{-1} $. The horizontal and vertical lines in the distributions are an artifact due to the different pixel size of the sensors in those regions. |

png pdf |

Figure 97-a:

Proton fluence in one plane of the PPS pixel tracker in a sample run in 2017, normalized to an integrated luminosity of 1 fb$ ^{-1} $. The horizontal and vertical lines in the distributions are an artifact due to the different pixel size of the sensors in those regions. |

png pdf |

Figure 97-b:

Proton fluence in one plane of the PPS pixel tracker in a sample run in 2017, normalized to an integrated luminosity of 1 fb$ ^{-1} $. The horizontal and vertical lines in the distributions are an artifact due to the different pixel size of the sensors in those regions. |

png pdf |

Figure 98:

The system for internal motion of the pixel detector package. Left: detail of a partially assembled detector package: the stepper motor is the black object on top; the blue object below is the potentiometer used to monitor the position; both of them have their body tied up to the sliding slit on top, while their mobile tip is screwed to the support structure for the modules. Right: results of a motion test inside a RP at standard working conditions ($-$20$^{o}$C and about 5 mbar), with measured versus nominal position. Two sets of points can be identified for forward and backward movements, revealing a hysteresis effect. |

png pdf |

Figure 98-b:

Results of a motion test inside a RP at standard working conditions ($-$20$^{o}$C and about 5 mbar), with measured versus nominal position. Two sets of points can be identified for forward and backward movements, revealing a hysteresis effect. |

png pdf |

Figure 99:

Left: details of the four-pad and two-pad segmentation of the diamond sensors used in the Run 3 modules. Right: arrangement of the four crystals in a Run 3 module (adapted from Ref. [155]), where the position of the beam is indicated by the spot on the left. |

png pdf |

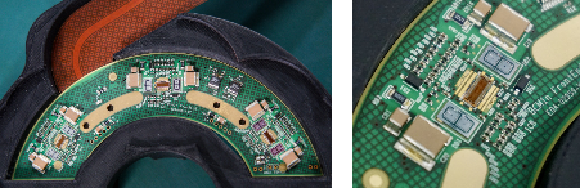

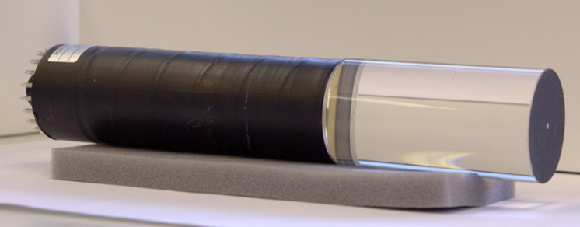

Figure 100:

Left: the hybrid board for the Run 3 timing detectors' readout; the lower, wider section hosts the sensors and is housed inside the secondary vacuum volume of the pot. Right: detail of the diamond sensors on one side, connected via wire bonds to the frontend electronics. |

png pdf |

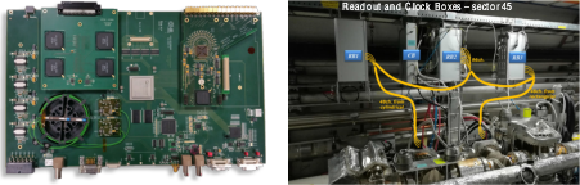

Figure 101:

Left: the digital readout unit. In this example both an HPTDC mezzanine (upper left) and a SAMPIC mezzanine (upper right) are mounted on the motherboard. Right: location of PPS mini-crates installed above the LHC beam pipe, for both the cylindrical timing RP already used in Run 2, and the rectangular 220-N RP newly equipped for timing in Run 3. The readout boxes (labeled ``RB'') contain the NINO boards and DRUs as described in the text. The box labeled ``CB'' holds components for the precise reference clock. |

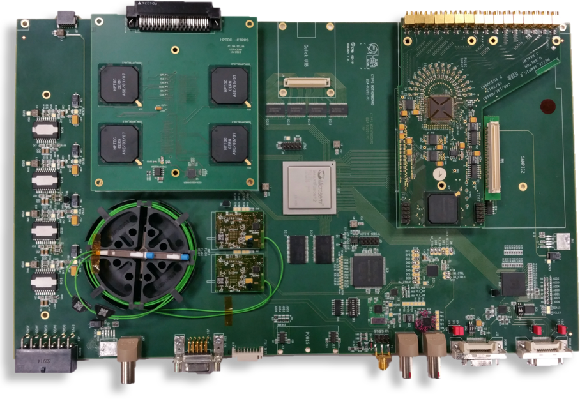

png pdf |

Figure 101-a:

The digital readout unit. In this example both an HPTDC mezzanine (upper left) and a SAMPIC mezzanine (upper right) are mounted on the motherboard. |

png pdf |

Figure 101-b:

Location of PPS mini-crates installed above the LHC beam pipe, for both the cylindrical timing RP already used in Run 2, and the rectangular 220-N RP newly equipped for timing in Run 3. The readout boxes (labeled ``RB'') contain the NINO boards and DRUs as described in the text. The box labeled ``CB'' holds components for the precise reference clock. |

png pdf |

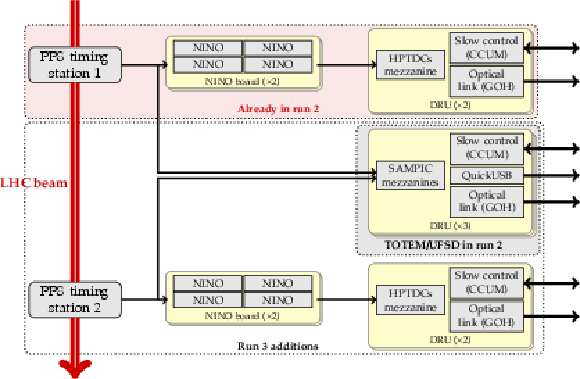

Figure 102:

Schematic diagram of the full readout chain for the PPS timing detectors in Run 3, also showing the configuration used in Run 2. The arrows on the right represent electrical connections to the slow-control ring and optical connections to components in the underground service cavern of CMS, described in Section 7.4. The central set of DRUs (3 units) was already present in Run 2, but was employed for the diamond detectors equipping the TOTEM experiment at that time, and for one layer of UFSD detectors used in 2017 for the PPS timing. |

png pdf |

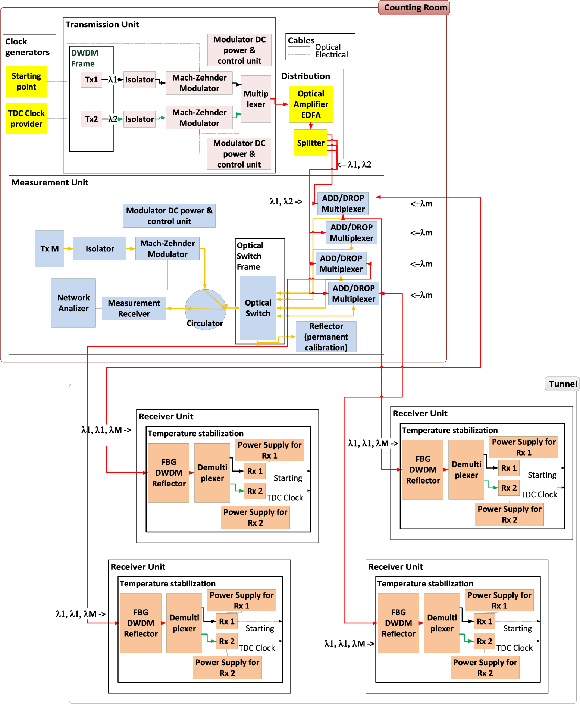

Figure 103:

A block scheme of the clock distribution system for the PPS timing detectors. The four receiver units correspond each to a timing station in the tunnel; the remaining elements of the system are all located in the counting room. Different colors of the fiber lines represent the different wavelength carriers used by the system, $ \lambda_1 $, $ \lambda_2 $, $ \lambda_\text{M} $ (black, green, and yellow, respectively), and the multiplexed signals (red). |

png pdf |

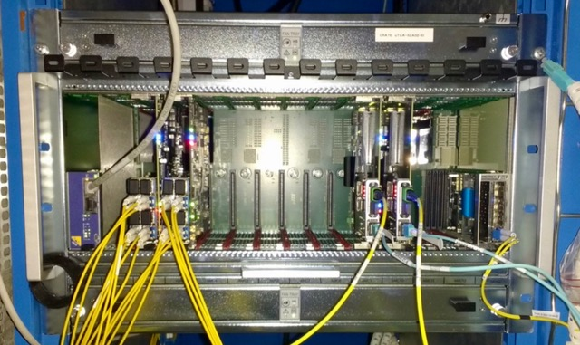

Figure 104:

Left: the PPS pixel DAQ crate. On the left are two FC7 FECs, sending clock, trigger and slow-control commands; in the center-right are two FC7 FEDs, receiving data from the two arms of the PPS spectrometer; on the far left is the MCH crate controller, on the far right is the AMC13. Right: the timing and strips DAQ crate. The SLinks are placed in the backplane of the OptoRx boards delivering data to upstream CMS DAQ. |

png pdf |

Figure 104-a:

The PPS pixel DAQ crate. On the left are two FC7 FECs, sending clock, trigger and slow-control commands; in the center-right are two FC7 FEDs, receiving data from the two arms of the PPS spectrometer; on the far left is the MCH crate controller, on the far right is the AMC13. |

png pdf |

Figure 105:

Luminosity-leveling trajectories for typical LHC fills in the $ (\alpha/2, \beta^\ast) $ plane for 2022 (dashed line) and 2023 (continuous line). |

png pdf |

Figure 106:

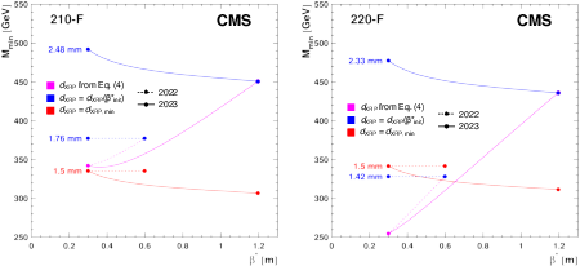

RP insertion distance $ d_{\text{XRP}} $ for the leveling trajectories of 2022 (dashed lines) and 2023 (continuous lines) from Fig. 105, evaluated for the pots 210-F (left) and 220-F (right) in the case of the TCT fixed to $ d_{\text{TCT}} = $ 8.5$\sigma_{x,\text{TCT}}$ ($\beta^\ast=$ 30 cm). The magenta line shows the nominal distance according to Eq. (7). The blue lines show the most conservative constant RP distance, i.e., never closer to the beam than the nominal distance. If this blue distance is smaller than the limit of 1.5 mm (red line), which is the case for 220-F, the pot has to stay at that limit. The fill evolves from the right to the left. |

png pdf |

Figure 106-a:

RP insertion distance $ d_{\text{XRP}} $ for the leveling trajectories of 2022 (dashed lines) and 2023 (continuous lines) from Fig. 105, evaluated for the pots 210-F in the case of the TCT fixed to $ d_{\text{TCT}} = $ 8.5$\sigma_{x,\text{TCT}}$ ($\beta^\ast=$ 30 cm). The magenta line shows the nominal distance according to Eq. (7). The blue lines show the most conservative constant RP distance, i.e., never closer to the beam than the nominal distance. |

png pdf |

Figure 106-b:

RP insertion distance $ d_{\text{XRP}} $ for the leveling trajectories of 2022 (dashed lines) and 2023 (continuous lines) from Fig. 105, evaluated for the pots 220-F in the case of the TCT fixed to $ d_{\text{TCT}} = $ 8.5$\sigma_{x,\text{TCT}}$ ($\beta^\ast=$ 30 cm). The magenta line shows the nominal distance according to Eq. (7). The blue lines show the most conservative constant RP distance, i.e., never closer to the beam than the nominal distance. If this blue distance is smaller than the limit of 1.5 mm (red line), the pot has to stay at that limit. The fill evolves from the right to the left. |

png pdf |

Figure 107:

Minimum accepted central mass $ M_{\text{min}} $ in the RPs 210-F (left) and 220-F (right) for the old collimation scheme with the TCTs fixed at $ d_{\text{TCT}}=$ 8.5$\sigma_{x,\text{TCT}}$ ($\beta^\ast=$ 30 cm) and two cases for the RP positions. Magenta lines: RPs moving according to Eq. (7) and Fig. 106. Blue lines: RP positions fixed on the most distant point of the nominal trajectory. The red lines correspond to the 1.5 mm distance limit. The fill evolves from the right to the left. |

png pdf |

Figure 107-a:

Minimum accepted central mass $ M_{\text{min}} $ in the RPs 210-F for the old collimation scheme with the TCTs fixed at $ d_{\text{TCT}}=$ 8.5$\sigma_{x,\text{TCT}}$ ($\beta^\ast=$ 30 cm) and two cases for the RP positions. Magenta lines: RPs moving according to Eq. (7) and Fig. 106. Blue lines: RP positions fixed on the most distant point of the nominal trajectory. The red lines correspond to the 1.5 mm distance limit. The fill evolves from the right to the left. |

png pdf |

Figure 107-b:

Minimum accepted central mass $ M_{\text{min}} $ in the RPs 220-F for the old collimation scheme with the TCTs fixed at $ d_{\text{TCT}}=$ 8.5$\sigma_{x,\text{TCT}}$ ($\beta^\ast=$ 30 cm) and two cases for the RP positions. Magenta lines: RPs moving according to Eq. (7) and Fig. 106. Blue lines: RP positions fixed on the most distant point of the nominal trajectory. The red lines correspond to the 1.5 mm distance limit. The fill evolves from the right to the left. |

png pdf |

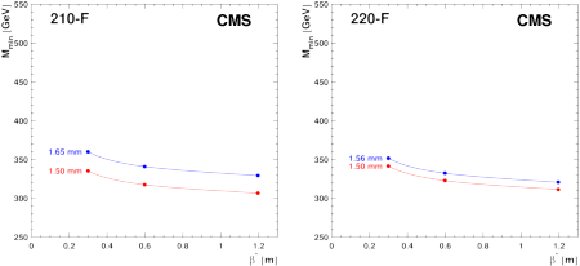

Figure 108:

Minimum accepted central mass $ M_{\text{min}} $ in the RPs 210-F (left) and 220-F (right) for the new collimation scheme with moving TCTs and fixed RP positions. The red lines correspond to the 1.5 mm distance limit. The fill evolves from the right to the left. |

png pdf |

Figure 108-a:

Minimum accepted central mass $ M_{\text{min}} $ in the RPs 210-F for the new collimation scheme with moving TCTs and fixed RP positions. The red lines correspond to the 1.5 mm distance limit. The fill evolves from the right to the left. |

png pdf |

Figure 108-b:

Minimum accepted central mass $ M_{\text{min}} $ in the RPs 220-F for the new collimation scheme with moving TCTs and fixed RP positions. The red lines correspond to the 1.5 mm distance limit. The fill evolves from the right to the left. |

png pdf |

Figure 109:

Upper mass cut-off in Run 3 caused by the debris collimators TCL4 and TCL5 for the settings explained in the text as a function of the crossing angle. The fill evolves from the left to the right between the magenta lines. |

png pdf |

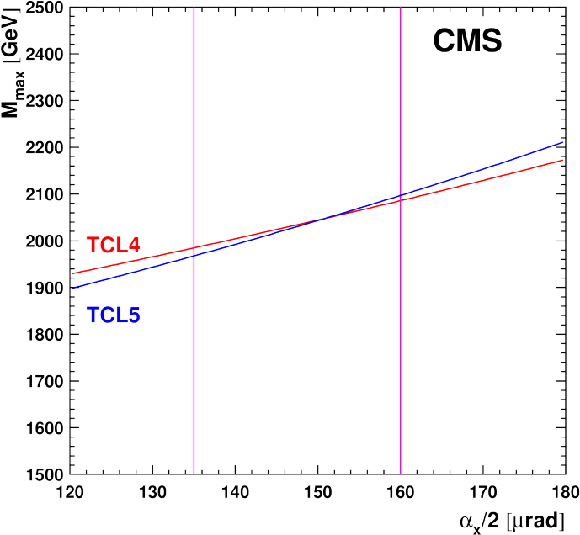

Figure 110:

Left: sketch of the general PLT geometry. The sensors are indicated by the purple squares. Right: the actual PLT detector at one end of CMS, showing the arrangement of the eight telescopes around the beam pipe. |

png pdf |

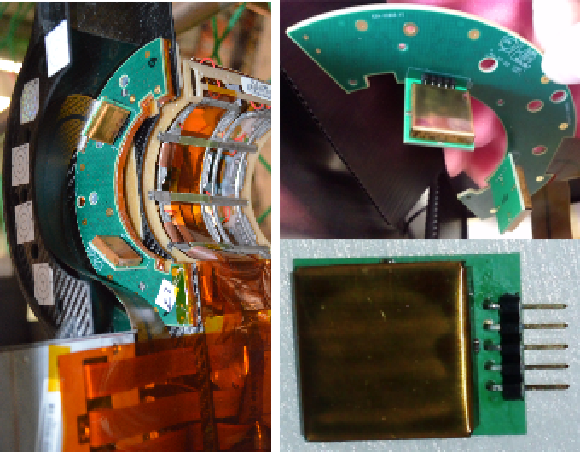

Figure 111:

Photographs of the BCM1F detector used in Run 3. Left: one full BCM1F C-shape printed circuit board. Right: a closeup of the frontend module, which includes the processing chip with two silicon double-diode sensors on each side. |

png pdf |

Figure 112:

Diagram comparing the DT readout (RO) and trigger chains in the Phase 1 system used for data taking in Run 3 and in the DT slice test that acts as a demonstrator of the Phase 2 system in Run 3 [84]. The central part of the figure indicates the number of Phase 2 on-board DT (OBDT) boards installed in each DT chamber. The left part of the figure shows how the Phase 1 readout and trigger-primitive generation are performed by the legacy on-board DT electronics (minicrates). Information, transmitted by optical fibers from the detector balconies to the counting room, is further processed independently for the readout ($ \mu $ROS ) and trigger (TwinMux). The right part of the figure illustrates how the slice test TDC data are streamed by each OBDT to AB7 boards hosted in the counting room, which are used for event building and trigger-primitive generation. |

png pdf |

Figure 113:

Left: photograph of the active element of one BHM detector unit, which is a 100 mm-long by 52 mm-diameter cylindrical quartz radiator, connected to a Hamamatsu R2059 photomultiplier. Right: shielding of a BHM detector unit, consisting of a low-carbon steel tube shown in blue, a mu-metal tube in gray, and permalloy in yellow. The quartz radiator, photomultiplier tube, and socket are shown in white, light green, and dark green, respectively [202]. |

png |

Figure 113-a:

Photograph of the active element of one BHM detector unit, which is a 100 mm-long by 52 mm-diameter cylindrical quartz radiator, connected to a Hamamatsu R2059 photomultiplier. |

png |

Figure 113-b:

Shielding of a BHM detector unit, consisting of a low-carbon steel tube shown in blue, a mu-metal tube in gray, and permalloy in yellow. The quartz radiator, photomultiplier tube, and socket are shown in white, light green, and dark green, respectively [202]. |

png pdf |

Figure 114:

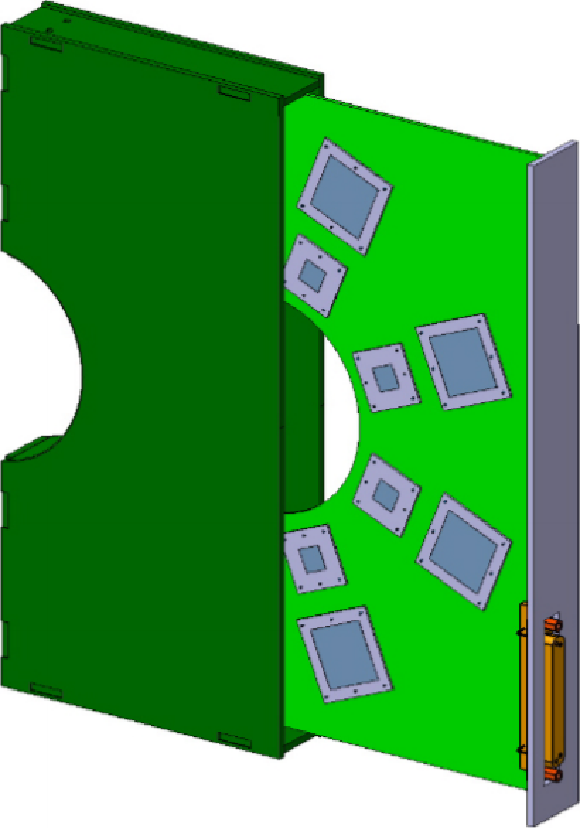

Mechanics for the BCML1 detector system. Left: a BCML1 mounted on the BCM1F C-shape PCB, attached to the PLT support structure. Right: a BCML1 mounted on a C-shape PCB (upper right), and a single sensor in a Faraday cage (lower right). |

png pdf |

Figure 115:

Mechanics for the BCML2 detector system. Left: the support structure of the BCML2 detectors, which also serves as mechanical protection. This structure is compatible with the Phase 2 beam pipe. Right: single-sensor base plate PCB for the large sensors used in BCML2 (upper right), and a complete sensor box assembly, with a stack of five sensors (lower right). |

png |

Figure 115-a:

The support structure of the BCML2 detectors, which also serves as mechanical protection. This structure is compatible with the Phase 2 beam pipe. |

png |

Figure 115-b:

Single-sensor base plate PCB for the large sensors used in BCML2 (upper right), and a complete sensor box assembly, with a stack of five sensors (lower right). |

png pdf |

Figure 116:

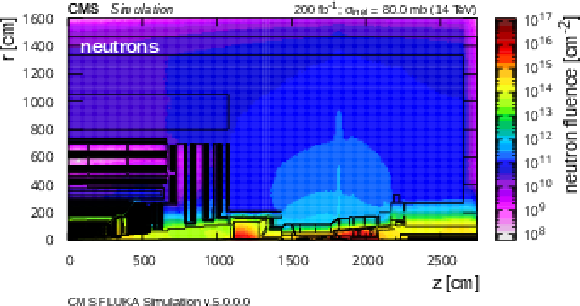

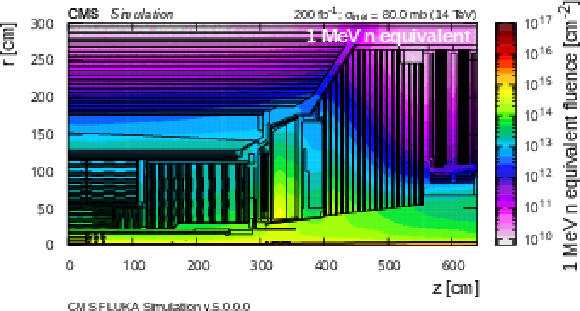

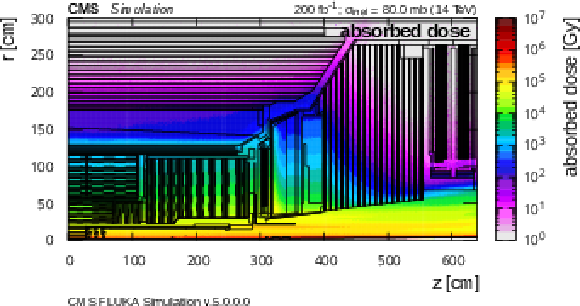

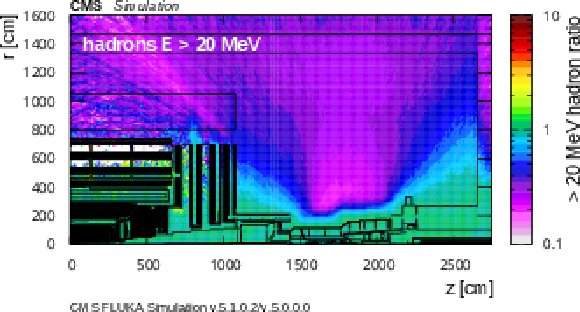

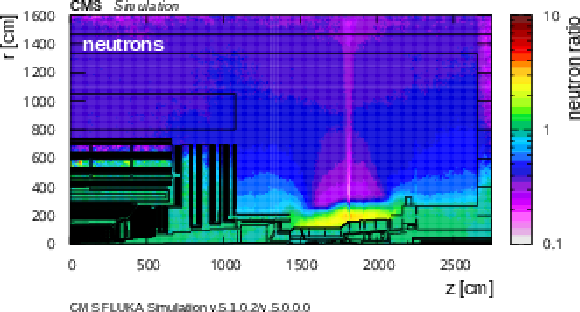

The FLUKA predictions for the expected Run 3 fluence of hadrons with energies greater than 20 MeV (upper left) and neutron fluence (upper right) normalized to an integrated luminosity of 200 fb$ ^{-1} $ at 7 TeV per beam are shown for the CMS cavern and detector. For the central part of CMS, 1 MeV-neutron-equivalent fluence (middle left), and absorbed-dose (middle right) are also presented. The lower two plots show the expected effect of the new forward shield as the ratio of hadron (left) and neutron fluences (right) in the CMS cavern comparing the Run 3 FLUKA simulation results of v5.1.0.2 with the Run 3 baseline of v5.0.0.0. |

png pdf |

Figure 116-a:

The FLUKA predictions for the expected Run 3 fluence of hadrons with energies greater than 20 MeV normalized to an integrated luminosity of 200 fb$ ^{-1} $ at 7 TeV per beam are shown for the CMS cavern and detector. |

png pdf |

Figure 116-b:

The FLUKA predictions for the expected Run 3 neutron fluence normalized to an integrated luminosity of 200 fb$ ^{-1} $ at 7 TeV per beam are shown for the CMS cavern and detector. |

png pdf |

Figure 116-c:

For the central part of CMS, 1 MeV-neutron-equivalent fluence. |

png pdf |

Figure 116-d:

For the central part of CMS, absorbed-dose. |

png pdf |

Figure 116-e:

Expected effect of the new forward shield as the ratio of hadron fluence in the CMS cavern comparing the Run 3 FLUKA simulation results of v5.1.0.2 with the Run 3 baseline of v5.0.0.0. |

png pdf |

Figure 116-f:

Expected effect of the new forward shield as the ratio of neutron fluence in the CMS cavern comparing the Run 3 FLUKA simulation results of v5.1.0.2 with the Run 3 baseline of v5.0.0.0. |

png pdf |

Figure 117:

Diagram of the Run 3 DAQ system. The total numbers of cabled elements are given, including elements used in the MiniDAQ systems described in Section 9.11 and elements installed as hot spares or for contingency. In typical global data-taking configurations, a subset is used, as described in the text. |

png pdf |

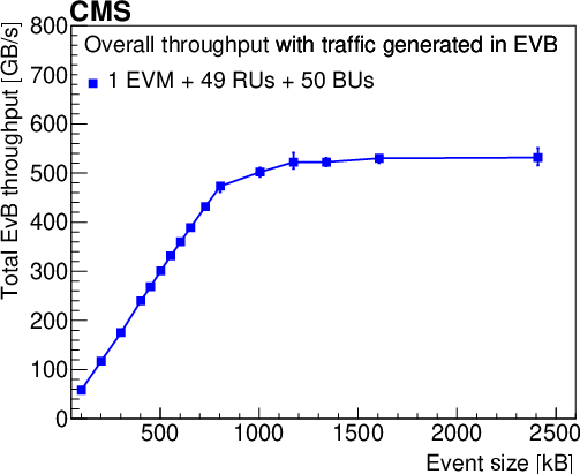

Figure 118:

Core event building throughput (left) and event rate (right) of the full-scale RU/BU EVB setup shown for a range of built event sizes. Emulated input data are generated in the RU/BUs and discarded after the event building stage. |

png pdf |

Figure 118-a:

Core event building throughput of the full-scale RU/BU EVB setup shown for a range of built event sizes. Emulated input data are generated in the RU/BUs and discarded after the event building stage. |

png pdf |

Figure 118-b:

Event rate of the full-scale RU/BU EVB setup shown for a range of built event sizes. Emulated input data are generated in the RU/BUs and discarded after the event building stage. |

png pdf |

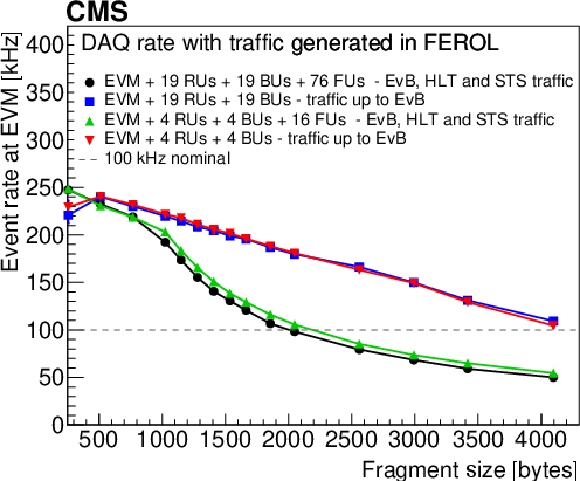

Figure 119:

The DAQ throughput (left) and event rate (right) per RU/BU node for a range of uniform fragment sizes using a mode with the data discarded after the EVB and with the traffic flow through the HLT and STS. Emulated input data are generated at the FEROLs with 20 fragments concentrated per RU/BU. |

png pdf |

Figure 119-a:

The DAQ throughput per RU/BU node for a range of uniform fragment sizes using a mode with the data discarded after the EVB and with the traffic flow through the HLT and STS. Emulated input data are generated at the FEROLs with 20 fragments concentrated per RU/BU. |

png pdf |

Figure 119-b:

Event rate per RU/BU node for a range of uniform fragment sizes using a mode with the data discarded after the EVB and with the traffic flow through the HLT and STS. Emulated input data are generated at the FEROLs with 20 fragments concentrated per RU/BU. |

png pdf |

Figure 120:

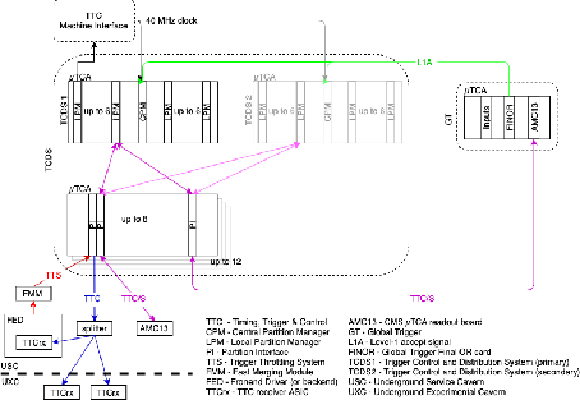

Overview of the CMS trigger control and distribution system (TCDS). |

png pdf |

Figure 121:

Comparison of the L1 $ p_{\mathrm{T}}^\text{miss} $ trigger efficiency using pileup mitigation in 2018 (circles) and in Run 3 (squares) for thresholds that provide a rate of 4.3 kHz, for $ \mathrm{Z}\to\mu\mu $ events. |

png pdf |

Figure 122:

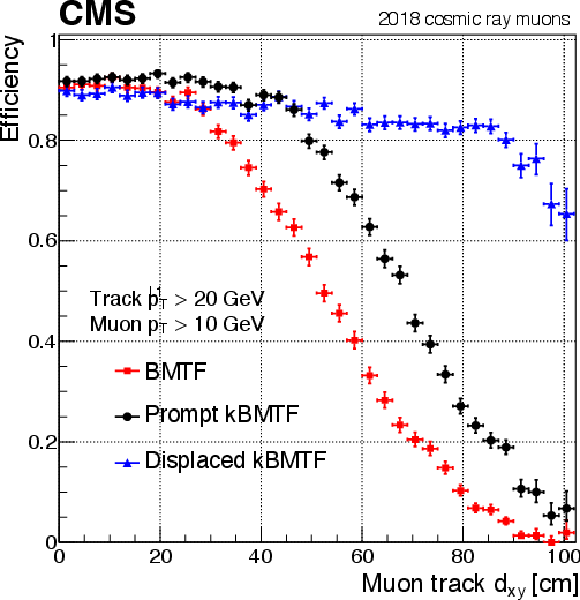

Displaced (blue) and prompt (black) kBMTF trigger efficiencies compared to the prompt BMTF (red) trigger efficiency with respect to the muon track $ d_{xy} $, obtained using a sample of cosmic ray muons from 2018 data. The efficiencies are measured using muon candidates with $ p_{\mathrm{T}} > $ 10 GeV. The prompt kBMTF improves BMTF efficiencies up to about 90% for up to 50 cm displacements, while displaced kBMTF retains efficiencies above 80% for up to 90 cm displacements. |

png pdf |

Figure 123:

The OMTF trigger efficiencies for displaced and prompt algorithms with respect to muon track $ d_{xy} $ obtained using a displaced-muon gun sample. The efficiency curves are plotted for different values of the $ p_{\mathrm{T}} $ estimate from the prompt algorithm (red, yellow, and blue), for the displaced algorithm (green), and for the combination (black). The prompt algorithm underestimates the $ p_{\mathrm{T}} $ of displaced tracks, causing most of the tracks to have $ p_{\mathrm{T}} < $ 10 GeV. The displaced algorithm can recover these tracks and improve the efficiencies to be around 80% for up to 200 cm displacements. |

png pdf |

Figure 124:

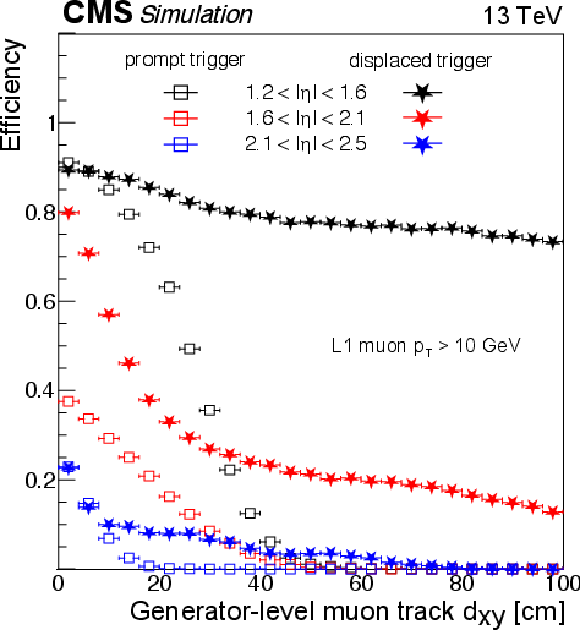

The EMTF trigger efficiencies for prompt and displaced-muon algorithms for L1 $ p_{\mathrm{T}} > $ 10 GeV with respect to muon track $ d_{xy} $ obtained using a displaced-muon gun sample. The solid stars show displaced NN performance while hollow squares show the prompt BDT performance. The different colors show different $ \eta $ regions: 1.2 $ < |\eta| < $ 1.6 (black), 1.6 $ < |\eta| < $ 2.1 (red), and 2.1 $ < |\eta| < $ 2.5 (blue). |

png pdf jpg |

Figure 125:

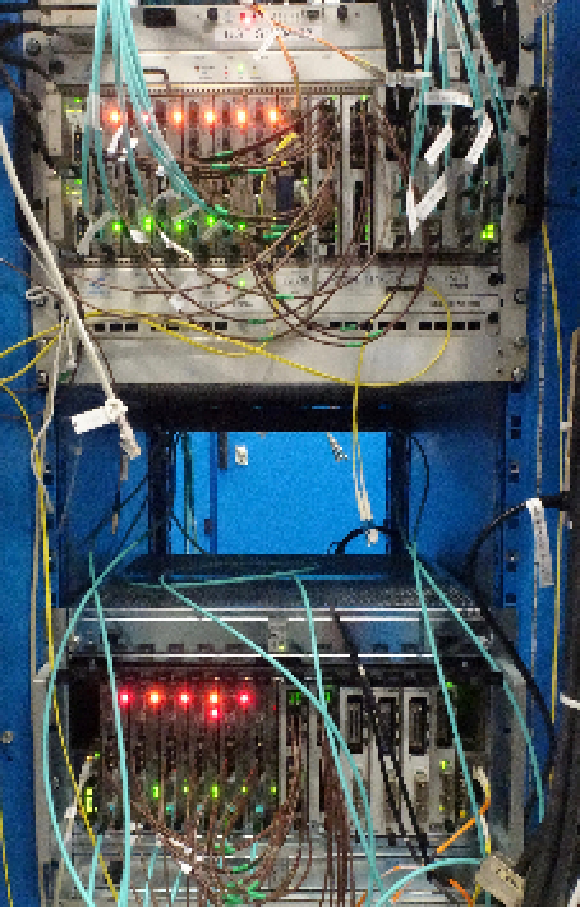

Production (upper) and the new test crate (lower) of the $ \mu $GT. |

png pdf |

Figure 126:

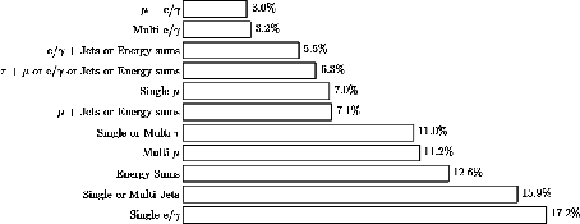

Fractions of the 100 kHz rate allocation for single- and multi-object triggers and cross triggers in the baseline Run 3 menu, calculated using Run 3 Monte Carlo simulation samples of inclusive pp events with appropriate pileup. |

png pdf |

Figure 127:

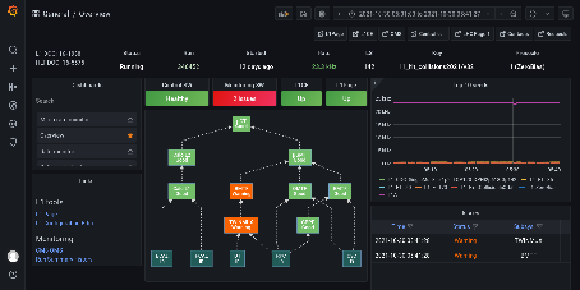

Screenshot of the Grafana L1 trigger monitoring dashboard. |

png pdf |

Figure 128:

Architecture of the Run 3 L1 scouting prototype system. The input system, located in the experiment service cavern, consists of a combination of different types of FPGA receiver boards, hosted in the PCIe bus of I/O server nodes or extender. The boards receive and pre-process data from the different trigger systems. Two of the boards (KCU1500 and SB852) use DMA to move their data to host memory, from where they are transmitted to the surface data center over 100 Gb/s Ethernet links. The VCU128 implements a TCP/IP core in the FPGA and directly trasmits data to the surface system. In the surface data center, links from the L1 scouting input system are connected to a switched network. Data streams are received through the said network by L1 scouting buffer servers (DSBU) and buffered in files on large RAMdisks. The L1 scouting processing units (DSPU) access buffered data from the DSBUs to perform data reduction and analysis. The processed data are finally moved to a Lustre cluster file system for long-term storage. |

png pdf |

Figure 129:

Illustration of the correlation between the impact parameter $ d_{xy} $, highlighted in red, and the difference between the angles measured for the incoming and outgoing legs of a cosmic muon. |

png pdf |

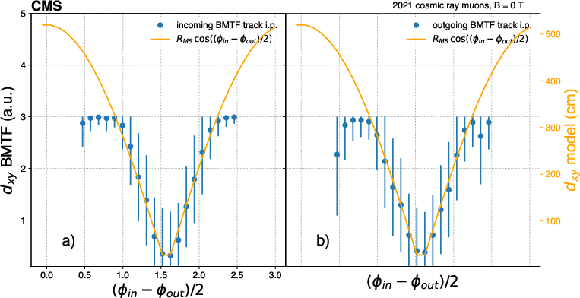

Figure 130:

The impact parameter $ d_{xy} $ of the incoming (left) and outgoing (right) cosmic ray muon tracks as measured by the BMTF as a function of the difference of azimuthal coordinates of the incoming and outgoing legs. The BMTF firmware encodes the impact parameter in two bits, hence the range of values on the left $ y $ axis. The orange curves model this dependence of the actual impact parameter as $ R_{MS}\cos{(\phi_{in}-\phi_{out})/2} $, where $ R_{MS} $ is the radius at which the BMTF measures the $ \phi $ coordinate of the track. The right hand side $ y $ axis shows the $ d_{xy} $ values (in cm) as predicted by this model, which exhibits remarkable consistency with the measurement (for the values within the range), if one assumes one unit of the left axis to correspond to an impact parameter of about 100 cm. |

png pdf |

Figure 131:

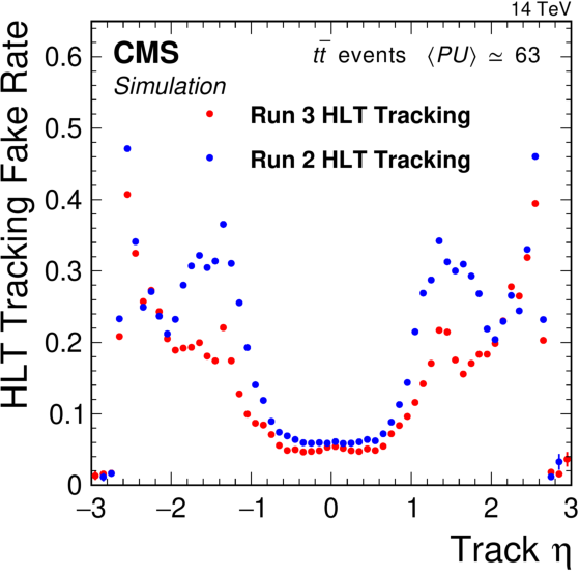

Tracking efficiency for the Run 2 HLT tracking (blue) and the Run 3 HLT single-iteration tracking (red) as a function of the simulated track $ p_{\mathrm{T}} $ (upper left) and track $ \eta $ (upper right). Only simulated tracks with $ |\eta| < $ 3.0 are considered in the efficiency measurement, with $ p_{\mathrm{T}} > $ 0.4 (0.9) GeV required for the upper left (right) plots. The tracking fake rate (lower) is shown as a function of the reconstructed track $ \eta $ for the Run 2 HLT tracking (blue) and the Run 3 HLT single-iteration tracking (red). |

png pdf |

Figure 131-a:

Tracking efficiency for the Run 2 HLT tracking (blue) and the Run 3 HLT single-iteration tracking (red) as a function of the simulated track $ p_{\mathrm{T}} $. $ \eta $. Only simulated tracks with $ |\eta| < $ 3.0 are considered in the efficiency measurement, with $ p_{\mathrm{T}} > $ 0.4 0.9 GeV required. |

png pdf |

Figure 131-b:

Tracking efficiency for the Run 2 HLT tracking (blue) and the Run 3 HLT single-iteration tracking (red) as a function of the simulated track $ p_{\mathrm{T}} $ (upper left) and track $ \eta $ (upper right). Only simulated tracks with $ |\eta| < $ 3.0 are considered in the efficiency measurement, with $ p_{\mathrm{T}} > $ 0.4 (0.9) GeV required for the upper left (right) plots. The tracking fake rate (lower) is shown as a function of the reconstructed track $ \eta $ for the Run 2 HLT tracking (blue) and the Run 3 HLT single-iteration tracking (red). |

png pdf |

Figure 131-c:

Tracking efficiency for the Run 2 HLT tracking (blue) and the Run 3 HLT single-iteration tracking (red) as a function of the simulated track $ p_{\mathrm{T}} $ (upper left) and track $ \eta $ (upper right). Only simulated tracks with $ |\eta| < $ 3.0 are considered in the efficiency measurement, with $ p_{\mathrm{T}} > $ 0.4 (0.9) GeV required for the upper left (right) plots. The tracking fake rate (lower) is shown as a function of the reconstructed track $ \eta $ for the Run 2 HLT tracking (blue) and the Run 3 HLT single-iteration tracking (red). |

png pdf |

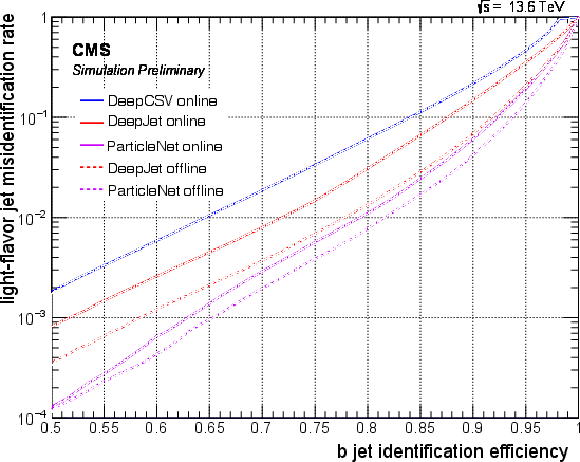

Figure 132:

Light-flavor jet misidentification rate versus the b jet efficiency for the various b tagging algorithms. The solid curves show the performance of the DeepCSV (blue), DeepJet (red), and ParticleNet (magenta) algorithms in the HLT. The dashed curves show the corresponding offline performance for DeepJet (red) and ParticleNet (magenta) taggers using offline reconstruction and training. |

png pdf |

Figure 133:

The HLT rate allocation by physics group for the Run 3 menu deployed in November 2022, scaled to a luminosity of 2.0 $ \times$ 10$^{34}$ cm$^{2}$s$^{-1}$. The total rate (blue bar) is the inclusive rate of all triggers used by a physics group and the pure rate (green bar) is the exclusive rate of all triggers unique to that group. The shared rate (orange bar) is the rate calculated by dividing the rate of each trigger equally among all physics groups that use it, before summing the total group rate. |

png pdf |

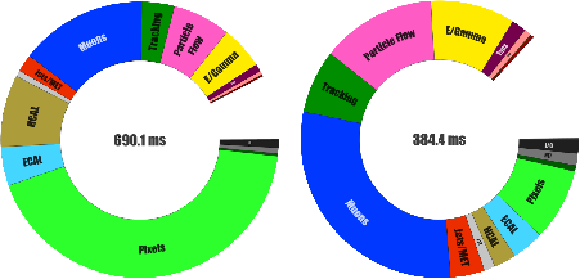

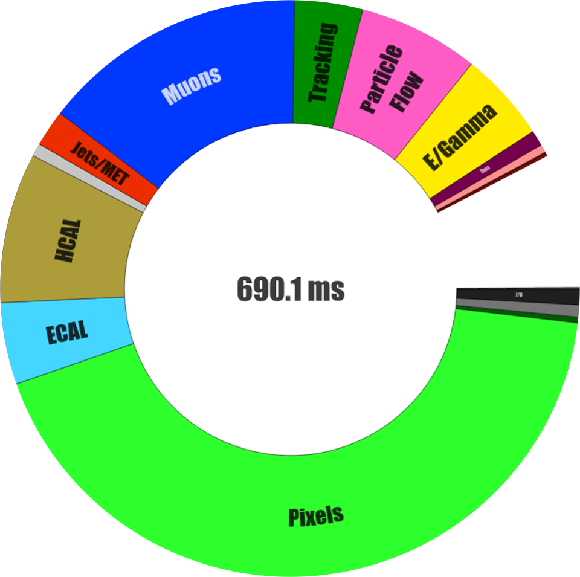

Figure 134:

Pie chart distributions of the processing time for the HLT reconstruction running only on CPUs (left) and offloading part of the reconstruction to GPUs (right). The slices represent the time spent in different physics object or detector reconstruction modules. The empty slice indicates the time spent outside of the individual algorithms. |

png |

Figure 134-a:

Pie chart distribution of the processing time for the HLT reconstruction running only on CPUs. The slices represent the time spent in different physics object or detector reconstruction modules. The empty slice indicates the time spent outside of the individual algorithms. |

png |

Figure 134-b:

Pie chart distribution of offloading part of the reconstruction to GPUs. The slices represent the time spent in different physics object or detector reconstruction modules. The empty slice indicates the time spent outside of the individual algorithms. |

png pdf |

Figure 135:

The HLT rates for promptly reconstructed data streams (blue) and parked data (black) as a function of time during an LHC fill in 2023. |

png pdf |

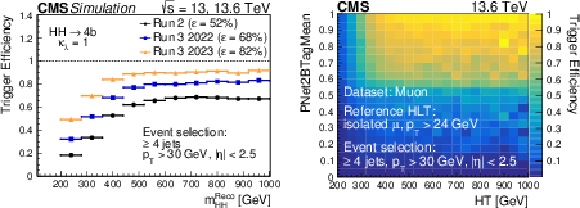

Figure 136:

Left: comparison of the trigger efficiency of the $ \mathrm{H}\mathrm{H}\to\mathrm{b}\overline{\mathrm{b}} $ trigger among the three different strategies used in Run 2 (black), 2022 (blue), and 2023 (orange) using the signal MC sample. Right: trigger efficiency of the $ \mathrm{H}\mathrm{H}\to\mathrm{b}\overline{\mathrm{b}} $ trigger using events collected by the single muon trigger in 2023. |

png pdf |

Figure 136-a:

Comparison of the trigger efficiency of the $ \mathrm{H}\mathrm{H}\to\mathrm{b}\overline{\mathrm{b}} $ trigger among the three different strategies used in Run 2 (black), 2022 (blue), and 2023 (orange) using the signal MC sample. |

png pdf |

Figure 136-b:

Trigger efficiency of the $ \mathrm{H}\mathrm{H}\to\mathrm{b}\overline{\mathrm{b}} $ trigger using events collected by the single muon trigger in 2023. |

png pdf |

Figure 137:

Timeline of the major data processing and computing software improvements put in production since 2010. |

png pdf |

Figure 138:

The evolution of the CMS computing model from a hierarchical (left) to fully connected structure (right). |

png pdf |

Figure 139:

Schematic diagram of the submission infrastructure, including multiple distributed central processing and production (WMAgent) and analysis (CRAB) job submission agents (schedds). Computing resources allocated from diverse origins (green boxes) are grouped into HTCondor pools (gray boxes), federated via workload flocking. The collector and negotiator agents (yellow boxes) keep the state of each pool and perform the workload-to-resource matchmaking. |

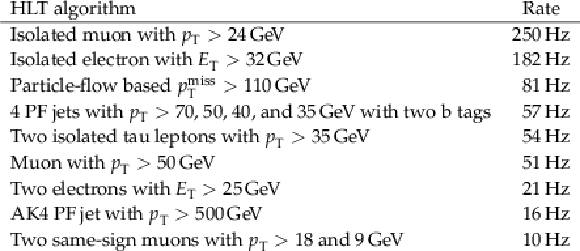

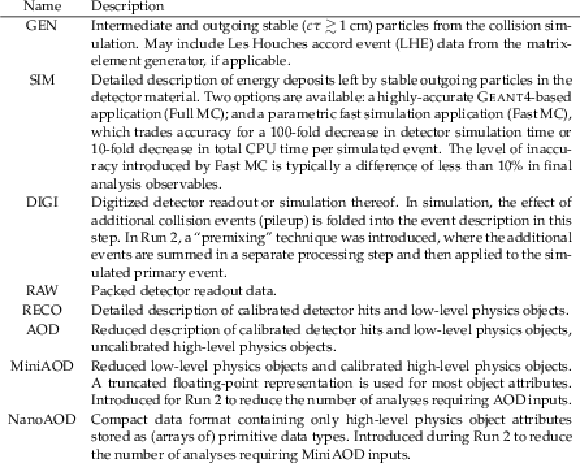

| Tables | |

png pdf |

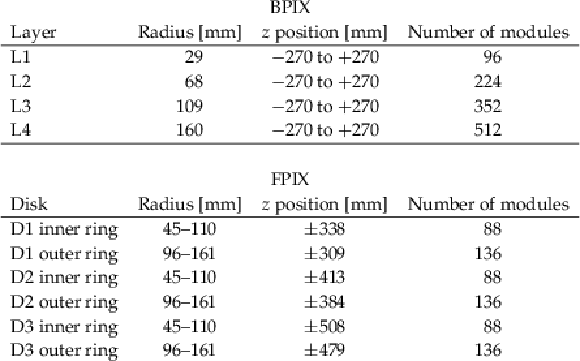

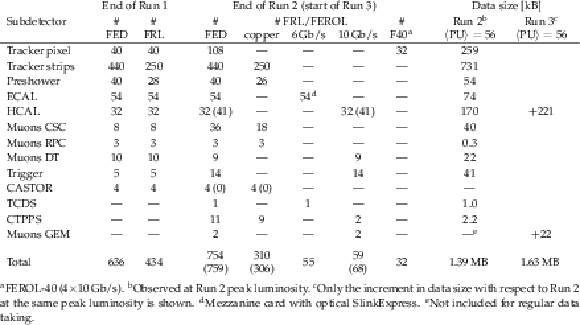

Table 1:

Summary of the average radius and $ z $ position, as well as the number of modules for the four BPIX layers and six FPIX rings for the Phase 1 pixel detector. |

png pdf |

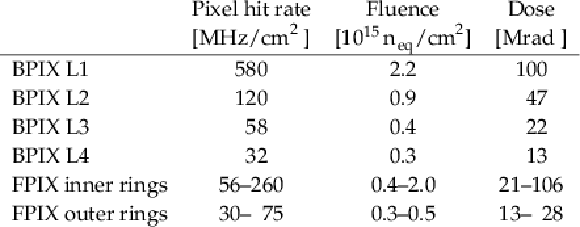

Table 2:

Expected hit rate, fluence, and radiation dose for the BPIX layers and FPIX rings. The hit rate corresponds to an instantaneous luminosity of 2.0 $ \times$ 10$^{34}$ cm$^{2}$s$^{-1}$ [15]. The fluence and radiation dose are shown for integrated luminosities of 300 fb$ ^{-1} $ for the BPIX L1 and 500 fb$ ^{-1} $ for the other BPIX layers and FPIX disks, well beyond the expected integrated luminosities for the detectors at the end of Run 3, of 250 and 370 fb$ ^{-1} $, respectively. |

png pdf |

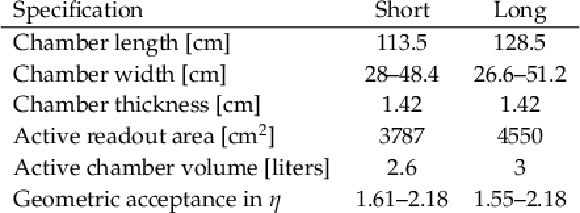

Table 3:

Parameters and design requirements for the PSI46dig and PROC600. |

png pdf |

Table 4:

Overview of module types used in the Phase 1 pixel detector. |

png pdf |

Table 5:

Radiation requirements for the Phase 1 upgrade. The HE numbers are for Run 3, while the HB and HF values correspond to the full HL-LHC duration. |

png pdf |

Table 6:

HCAL SiPM requirements for the Phase 1 upgrade. The design (measured) parameter values are shown. For the design (measured) value of the BV stability, the RMS (peak-to-peak) is quoted. |

png pdf |

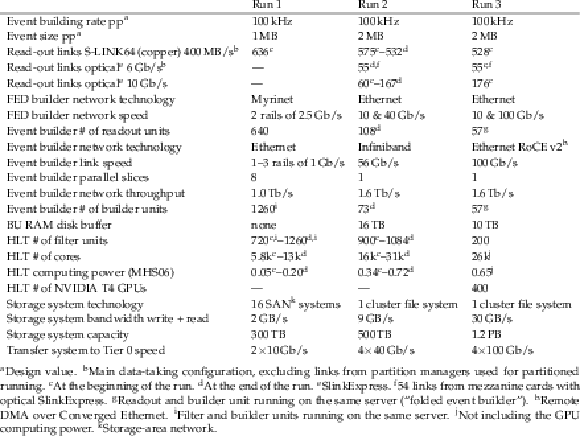

Table 7: