Compact Muon Solenoid

LHC, CERN

| CMS-MLG-23-001 ; CERN-EP-2023-303 | ||

| Portable acceleration of CMS computing workflows with coprocessors as a service | ||

| CMS Collaboration | ||

| 23 February 2024 | ||

| Comput. Softw. Big Sci. 8 (2024) 17 | ||

| Abstract: Computing demands for large scientific experiments, such as the CMS experiment at the CERN LHC, will increase dramatically in the next decades. To complement the future performance increases of software running on central processing units (CPUs), explorations of coprocessor usage in data processing hold great potential and interest. Coprocessors are a class of computer processors that supplement CPUs, often improving the execution of certain functions due to architectural design choices. We explore the approach of Services for Optimized Network Inference on Coprocessors (SONIC) and study the deployment of this as-a-service approach in large-scale data processing. In the studies, we take a data processing workflow of the CMS experiment and run the main workflow on CPUs, while offloading several machine learning (ML) inference tasks onto either remote or local coprocessors, specifically graphics processing units (GPUs). With experiments performed at Google Cloud, the Purdue Tier-2 computing center, and combinations of the two, we demonstrate the acceleration of these ML algorithms individually on coprocessors and the corresponding throughput improvement for the entire workflow. This approach can be easily generalized to different types of coprocessors and deployed on local CPUs without decreasing the throughput performance. We emphasize that the SONIC approach enables high coprocessor usage and enables the portability to run workflows on different types of coprocessors. | ||

| Links: e-print arXiv:2402.15366 [hep-ex] (PDF) ; CDS record ; inSPIRE record ; CADI line (restricted) ; | ||

| Figures | |

png pdf |

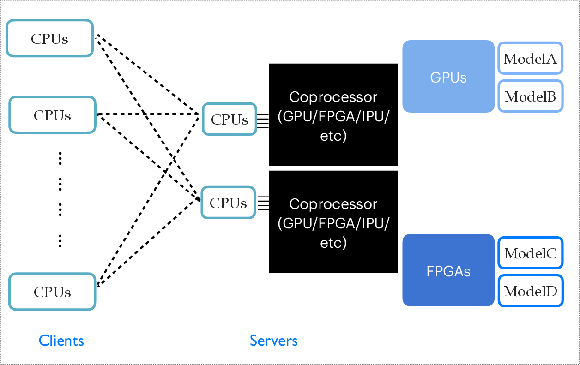

Figure 1:

An example inference as a service setup with multiple coprocessor servers. Clients usually run on CPUs, shown on the left side; servers hosting different models run on coprocessors, shown on the right side. |

png pdf |

Figure 2:

Illustration of the SONIC implementation of IaaS in CMSSW. The figure also shows the possibility of an additional load-balancing layer in the SONIC scheme. For example, if multiple coprocessor-enabled machines are used to host servers, a Kubernetes engine can be set up to distribute inference calls across the machines [84]. Image adapted from Ref. [63]. |

png pdf |

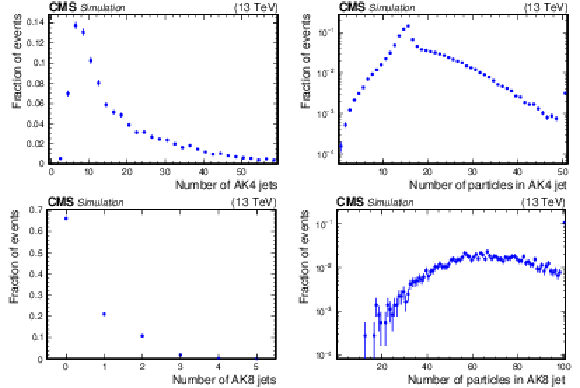

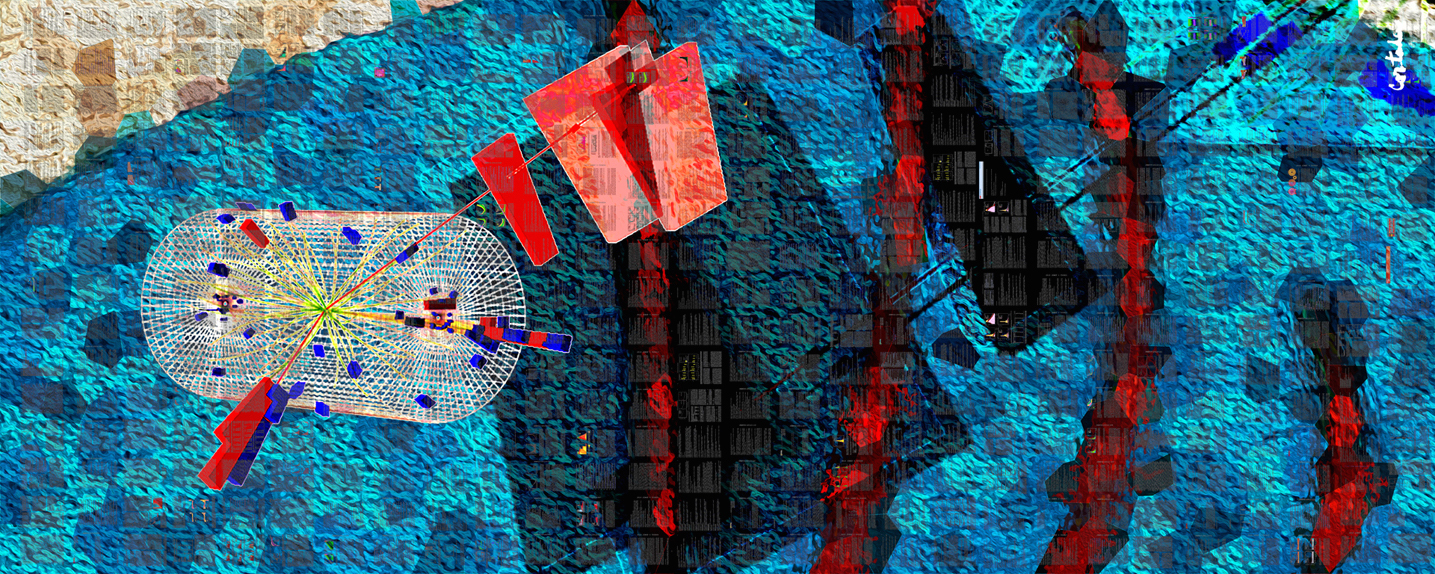

Figure 3:

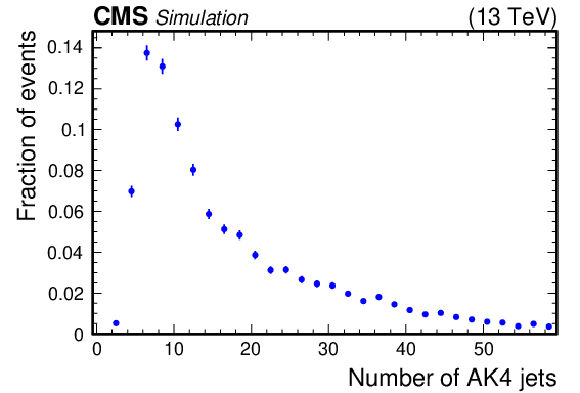

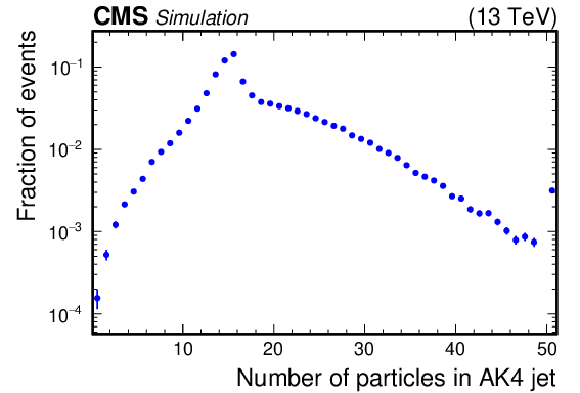

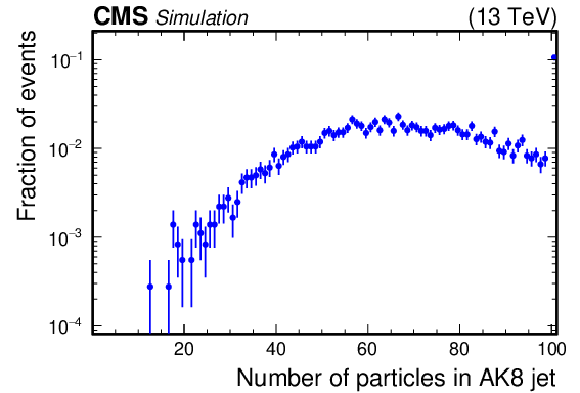

Illustration of the jet information in the Run 2 simulated $ \mathrm{t} \overline{\mathrm{t}} $ data set used in subsequent studies. Distributions of the number of jets per event (left) and the number of particles per jet (right) are shown for AK4 jets (upper) and AK8 jets (lower). For the distributions of the number of particles, the rightmost bin is an overflow bin. |

png pdf |

Figure 3-a:

Illustration of the jet information in the Run 2 simulated $ \mathrm{t} \overline{\mathrm{t}} $ data set used in subsequent studies. Distributions of the number of jets per event (left) and the number of particles per jet (right) are shown for AK4 jets (upper) and AK8 jets (lower). For the distributions of the number of particles, the rightmost bin is an overflow bin. |

png pdf |

Figure 3-b:

Illustration of the jet information in the Run 2 simulated $ \mathrm{t} \overline{\mathrm{t}} $ data set used in subsequent studies. Distributions of the number of jets per event (left) and the number of particles per jet (right) are shown for AK4 jets (upper) and AK8 jets (lower). For the distributions of the number of particles, the rightmost bin is an overflow bin. |

png pdf |

Figure 3-c:

Illustration of the jet information in the Run 2 simulated $ \mathrm{t} \overline{\mathrm{t}} $ data set used in subsequent studies. Distributions of the number of jets per event (left) and the number of particles per jet (right) are shown for AK4 jets (upper) and AK8 jets (lower). For the distributions of the number of particles, the rightmost bin is an overflow bin. |

png pdf |

Figure 3-d:

Illustration of the jet information in the Run 2 simulated $ \mathrm{t} \overline{\mathrm{t}} $ data set used in subsequent studies. Distributions of the number of jets per event (left) and the number of particles per jet (right) are shown for AK4 jets (upper) and AK8 jets (lower). For the distributions of the number of particles, the rightmost bin is an overflow bin. |

png pdf |

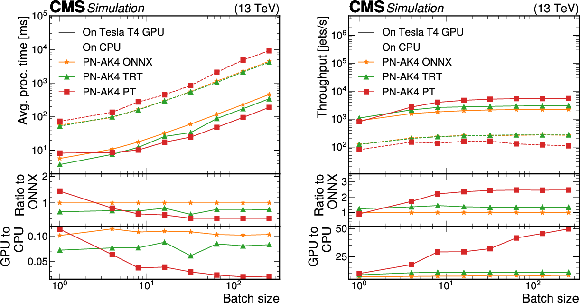

Figure 4:

Average processing time (left) and throughput (right) of the PN-AK4 algorithm served by a TRITON server running on one NVIDIA Tesla T4 GPU, presented as a function of the batch size. Values are shown for different inference backends: ONNX (orange), ONNX with TRT (green), and PYTORCH (red). Performance values for these backends when running on a CPU-based TRITON server are given in dashed lines, with the same color-to-backend correspondence. |

png pdf |

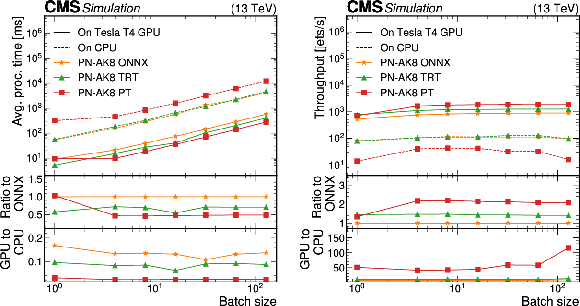

Figure 5:

Average processing time (left) and throughput (right) of one of the AK8 ParticleNet algorithms served by a TRITON server running on one NVIDIA Tesla T4 GPU, presented as a function of the batch size. Values are shown for different inference backends: ONNX (orange), ONNX with TRT (green), and PYTORCH (red). Performance values for these backends when running on a CPU-based TRITON server are given in dashed lines, with the same color-to-backend correspondence. |

png pdf |

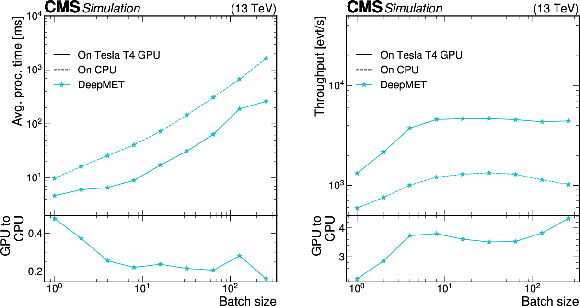

Figure 6:

Average processing time (left) and throughput (right) of the DeepMET algorithm served by a TRITON server running on one NVIDIA Tesla T4 GPU, presented as a function of the batch size. Similar performance when running on a CPU-based TRITON server is given in dashed lines. |

png pdf |

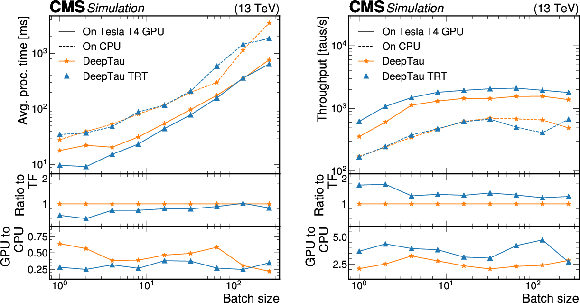

Figure 7:

Average processing time (left) and throughput (right) of the DeepTau algorithm served by a TRITON server running on one NVIDIA Tesla T4 GPU, presented as a function of the batch size. Values are shown for different inference backends: TENSORFLOW (TF) (orange), and TENSORFLOW with TRT (blue). Performance values for these backends when running on a CPU-based TRITON server are given in dashed lines, with the same color-to-backend correspondence. |

png pdf |

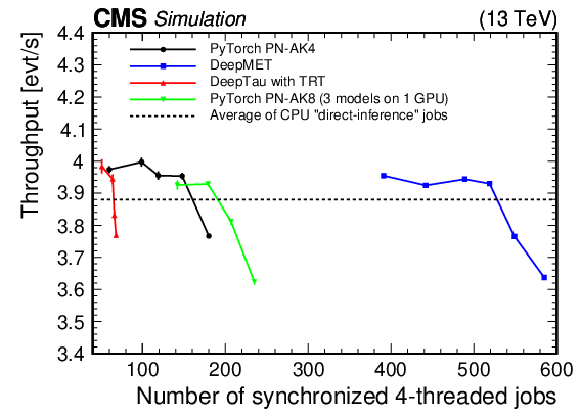

Figure 8:

The GPU saturation scan performed in GCP, where the per-event throughput is shown as a function of the number of parallel CPU clients for the PYTORCH version of PN-AK4 (black), DeepMET (blue), DeepTau optimized with TRT (red), and all PYTORCH versions of PN-AK8 on a single GPU (green). Each of the parallel jobs was run in a four-threaded configuration. The CPU tasks ran in four-threaded GCP VMs, and the TRITON servers were hosted on separate single GPU VMs also in GCP. The line for direct-inference jobs represents the baseline configuration measured by running all algorithms without the use of the SONIC approach or any GPUs. Each solid line represents running one of the specified models on GPU via the SONIC approach. |

png pdf |

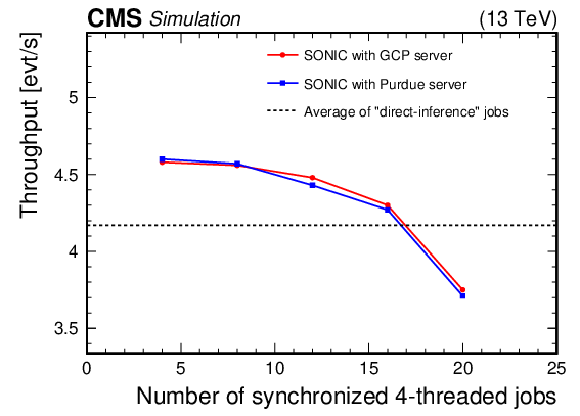

Figure 9:

Production tests across different sites. The CPU tasks always run at Purdue, while the servers with GPU inference tasks for all the models run at Purdue (blue) and at GCP in Iowa (red). The throughput values are higher than those shown in Fig. 8 because the CPUs at Purdue are more powerful than those comprising the GCP VMs. |

png pdf |

Figure 10:

Scale-out test results on Google Cloud. The average throughput of the workflow with the SONIC approach is 4.0 events/s (solid blue), while the average throughput of the direct-inference workflow is 3.5 events/s (dashed red). |

png pdf |

Figure 11:

Throughput (upper) and throughput ratio (lower) between the SONIC approach and direct inference in the local CPU tests at the Purdue Tier-2 cluster. To ensure the CPUs are always saturated, the number of threads per job multiplied by the number of jobs is set to 20. |

| Tables | |

png pdf |

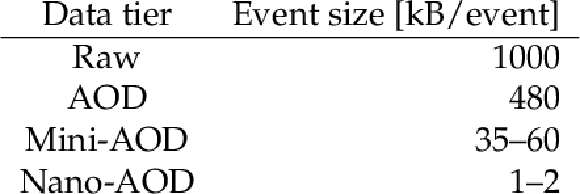

Table 1:

Average event size of different CMS data tiers with Run 2 data-taking conditions [81,69,78]. |

png pdf |

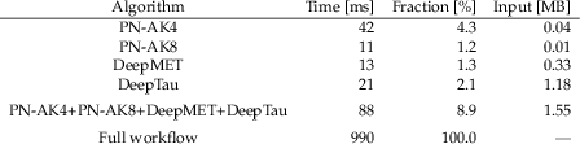

Table 2:

The average time of the Mini-AOD processing (without the SONIC approach) with one thread on one single CPU core. The average processing times of the algorithms supported by the SONIC approach are listed in the column labeled ``Time.'' The column labeled ``Fraction'' refers to the fraction of the full workflow's processing time that the algorithm in question consumes. Together, the algorithms currently supported by the SONIC approach consume about 9% of the total processing time. This table also contains the expected server input for each model type created per event in Run 2 $ \mathrm{t} \overline{\mathrm{t}} $ events in the column labeled ``Input.'' |

png pdf |

Table 3:

Memory usage with direct inference and the SONIC approach in the local CPU tests at the Purdue Tier-2 cluster. The last column is calculated as the sum of client and server memory usage divided by the direct inference memory usage. To ensure the CPUs are always saturated, the number of threads $ n_\text{T} $ per job multiplied by the number of jobs is set to 20. |

| Summary |

| Within the next decade, the data-taking rate at the LHC will increase dramatically, straining the expected computing resources of the LHC experiments. At the same time, more algorithms that run on these resources will be converted into either machine learning or domain algorithms that are easily accelerated with the use of coprocessors, such as graphics processing units (GPUs). By pursuing heterogeneous architectures, it is possible to alleviate potential shortcomings of available central processing unit (CPU) resources. Inference as a service (IaaS) is a promising scheme to integrate coprocessors into CMS computing workflows. In IaaS, client code simply assembles the input data for an algorithm, sends that input to an inference server running either locally or remotely, and retrieves output from the server. The implementation of IaaS discussed throughout this paper is called the Services for Optimized Network Inference on Coprocessors (SONIC) approach, which employs NVIDIA TRITON Inference Servers to host models on coprocessors, as demonstrated here in studies on GPUs, CPUs, and Graphcore Intelligence Processing Units (IPUs). In this paper, the SONIC approach in the CMS software framework ( CMSSW ) is demonstrated in a sample Mini-AOD workflow, where algorithms for jet tagging, tau lepton identification, and missing transverse momentum regression are ported to run on inference servers. These algorithms account for nearly 10% of the total processing time per event in a simulated data set of top quark-antiquark events. After model profiling, which is used to optimize server performance and determine the needed number of GPUs for a given number of client jobs, the expected 10% decrease in per-event processing time was achieved in a large-scale test of Mini-AOD production with the SONIC approach that used about 10\,000 CPU cores and 100 GPUs. The network bandwidth is large enough to support high input-output model inference for the workflow tested, and it will be monitored as the fraction of algorithms using remote GPUs increases. In addition to meeting performance expectations, we demonstrated that the throughput results are not highly sensitive to the physical client-to-server distance, at least up to distances of hundreds of kilometers. Running inference through TRITON servers on local CPU resources does not affect the throughput compared with the standard approach of running inference directly on CPUs in the job thread. We also performed a test using GraphCore IPUs to demonstrate the flexibility of the SONIC approach. The SONIC approach for IaaS represents a flexible method to accelerate algorithms, which is increasingly valuable for LHC experiments. Using a realistic workflow, we highlighted many of the benefits of the SONIC approach, including the use of remote resources, workflow acceleration, and portability to different processor technologies. To make it a viable and robust paradigm for CMS computing in the future, additional studies are ongoing or planned for monitoring and mitigating potential issues such as excessive network and memory usage or server failures. |

| References | ||||

| 1 | L. Evans and P. Bryant | LHC machine | JINST 3 (2008) S08001 | |

| 2 | ATLAS Collaboration | The ATLAS experiment at the CERN Large Hadron Collider | JINST 3 (2008) S08003 | |

| 3 | CMS Collaboration | The CMS experiment at the CERN LHC | JINST 3 (2008) S08004 | |

| 4 | ATLAS Collaboration | Observation of a new particle in the search for the Standard Model Higgs boson with the ATLAS detector at the LHC | PLB 716 (2012) 1 | 1207.7214 |

| 5 | CMS Collaboration | Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC | PLB 716 (2012) 30 | CMS-HIG-12-028 1207.7235 |

| 6 | CMS Collaboration | Observation of a new boson with mass near 125 GeV in pp collisions at $ \sqrt{s} = $ 7 and 8 TeV | JHEP 06 (2013) 081 | CMS-HIG-12-036 1303.4571 |

| 7 | CMS Collaboration | Search for supersymmetry in proton-proton collisions at 13 TeV in final states with jets and missing transverse momentum | JHEP 10 (2019) 244 | CMS-SUS-19-006 1908.04722 |

| 8 | CMS Collaboration | Combined searches for the production of supersymmetric top quark partners in proton-proton collisions at $ \sqrt{s} = $ 13 TeV | EPJC 81 (2021) 970 | CMS-SUS-20-002 2107.10892 |

| 9 | CMS Collaboration | Search for higgsinos decaying to two Higgs bosons and missing transverse momentum in proton-proton collisions at $ \sqrt{s} = $ 13 TeV | JHEP 05 (2022) 014 | CMS-SUS-20-004 2201.04206 |

| 10 | CMS Collaboration | Search for supersymmetry in final states with two oppositely charged same-flavor leptons and missing transverse momentum in proton-proton collisions at $ \sqrt{s} = $ 13 TeV | JHEP 04 (2021) 123 | CMS-SUS-20-001 2012.08600 |

| 11 | ATLAS Collaboration | Search for squarks and gluinos in final states with hadronically decaying $ \tau $-leptons, jets, and missing transverse momentum using pp collisions at $ \sqrt{s} = $ 13 TeV with the ATLAS detector | PRD 99 (2019) 012009 | 1808.06358 |

| 12 | ATLAS Collaboration | Search for top squarks in events with a Higgs or Z boson using 139 fb$ ^{-1} $ of pp collision data at $ \sqrt{s}= $ 13 TeV with the ATLAS detector | EPJC 80 (2020) 1080 | 2006.05880 |

| 13 | ATLAS Collaboration | Search for charginos and neutralinos in final states with two boosted hadronically decaying bosons and missing transverse momentum in pp collisions at $ \sqrt{s} = $ 13 TeV with the ATLAS detector | PRD 104 (2021) 112010 | 2108.07586 |

| 14 | ATLAS Collaboration | Search for direct pair production of sleptons and charginos decaying to two leptons and neutralinos with mass splittings near the W-boson mass in $ \sqrt{s} = $ 13 TeV pp collisions with the ATLAS detector | JHEP 06 (2023) 031 | 2209.13935 |

| 15 | ATLAS Collaboration | Search for new phenomena in events with an energetic jet and missing transverse momentum in pp collisions at $ \sqrt{s}= $ 13 TeV with the ATLAS detector | PRD 103 (2021) 112006 | 2102.10874 |

| 16 | CMS Collaboration | Search for new particles in events with energetic jets and large missing transverse momentum in proton-proton collisions at $ \sqrt{s}= $ 13 TeV | JHEP 11 (2021) 153 | CMS-EXO-20-004 2107.13021 |

| 17 | ATLAS Collaboration | Search for new resonances in mass distributions of jet pairs using 139 fb$ ^{-1} $ of pp collisions at $ \sqrt{s}= $ 13 TeV with the ATLAS detector | JHEP 03 (2020) 145 | 1910.08447 |

| 18 | ATLAS Collaboration | Search for high-mass dilepton resonances using 139 fb$ ^{-1} $ of pp collision data collected at $ \sqrt{s}= $ 13 TeV with the ATLAS detector | PLB 796 (2019) 68 | 1903.06248 |

| 19 | CMS Collaboration | Search for narrow and broad dijet resonances in proton-proton collisions at $ \sqrt{s}= $ 13 TeV and constraints on dark matter mediators and other new particles | JHEP 08 (2018) 130 | CMS-EXO-16-056 1806.00843 |

| 20 | CMS Collaboration | Search for high mass dijet resonances with a new background prediction method in proton-proton collisions at $ \sqrt{s}= $ 13 TeV | JHEP 05 (2020) 033 | CMS-EXO-19-012 1911.03947 |

| 21 | CMS Collaboration | Search for resonant and nonresonant new phenomena in high-mass dilepton final states at $ \sqrt{s}= $ 13 TeV | JHEP 07 (2021) 208 | CMS-EXO-19-019 2103.02708 |

| 22 | O. Aberle et al. | High-Luminosity Large Hadron Collider (HL-LHC): Technical design report | CERN Yellow Rep. Monogr. 10 (2020) | |

| 23 | O. Brüning and L. Rossi, eds | The High Luminosity Large Hadron Collider: the new machine for illuminating the mysteries of universe | World Scientific, ISBN~978-981-4675-46-8, 978-981-4678-14-8 link |

|

| 24 | CMS Collaboration | The Phase-2 upgrade of the CMS level-1 trigger | CMS Technical Design Report CERN-LHCC-2020-004, CMS-TDR-021, 2020 CDS |

|

| 25 | ATLAS Collaboration | Technical design report for the Phase-II upgrade of the ATLAS TDAQ system | ATLAS Technical Design Report CERN-LHCC-2017-020, ATLAS-TDR-029, 2017 link |

|

| 26 | A. Ryd and L. Skinnari | Tracking triggers for the HL-LHC | ARNPS 70 (2020) 171 | 2010.13557 |

| 27 | ATLAS Collaboration | Operation of the ATLAS trigger system in Run 2 | JINST 15 (2020) P10004 | 2007.12539 |

| 28 | CMS Collaboration | The CMS trigger system | JINST 12 (2017) P01020 | CMS-TRG-12-001 1609.02366 |

| 29 | CMS Collaboration | The Phase-2 upgrade of the CMS data acquisition and high level trigger | CMS Technical Design Report CERN-LHCC-2021-007, CMS-TDR-022, 2021 CDS |

|

| 30 | CMS Offline Software and Computing Group | CMS Phase-2 computing model: Update document | CMS Note CMS-NOTE-2022-008, 2022 | |

| 31 | ATLAS Collaboration | ATLAS software and computing HL-LHC roadmap | LHCC Public Document CERN-LHCC-2022-005, LHCC-G-182, 2022 | |

| 32 | R. H. Dennard et al. | Design of ion-implanted MOSFET's with very small physical dimensions | IEEE J. Solid-, 1974 State Circuits 9 (1974) 256 |

|

| 33 | Graphcore | Intelligence processing unit | link | |

| 34 | Z. Jia, B. Tillman, M. Maggioni, and D. P. Scarpazza | Dissecting the Graphcore IPU architecture via microbenchmarking | 1912.03413 | |

| 35 | D. Guest, K. Cranmer, and D. Whiteson | Deep learning and its application to LHC physics | ARNPS 68 (2018) 161 | 1806.11484 |

| 36 | K. Albertsson et al. | Machine learning in high energy physics community white paper | JPCS 1085 (2018) 022008 | 1807.02876 |

| 37 | D. Bourilkov | Machine and deep learning applications in particle physics | Int. J. Mod. Phys. A 34 (2020) 1930019 | 1912.08245 |

| 38 | A. J. Larkoski, I. Moult, and B. Nachman | Jet substructure at the Large Hadron Collider: A review of recent advances in theory and machine learning | Phys. Rept. 841 (2020) 1 | 1709.04464 |

| 39 | Feickert, Matthew and Nachman, Benjamin | A living review of machine learning for particle physics | 2102.02770 | |

| 40 | P. Harris et al. | Physics community needs, tools, and resources for machine learning | in Proc. 2021 US Community Study on the Future of Particle Physics, 2022 link |

2203.16255 |

| 41 | C. Savard et al. | Optimizing high throughput inference on graph neural networks at shared computing facilities with the NVIDIA Triton Inference Server | Accepted by Comput. Softw. Big Sci, 2023 | 2312.06838 |

| 42 | S. Farrell et al. | Novel deep learning methods for track reconstruction | in 4th International Workshop Connecting The Dots (2018) | 1810.06111 |

| 43 | S. Amrouche et al. | The Tracking Machine Learning challenge: Accuracy phase | Springer Cham, 4, 2019 link |

1904.06778 |

| 44 | X. Ju et al. | Performance of a geometric deep learning pipeline for HL-LHC particle tracking | EPJC 81 (2021) 876 | 2103.06995 |

| 45 | G. DeZoort et al. | Charged particle tracking via edge-classifying interaction networks | CSBS 5 (2021) 26 | 2103.16701 |

| 46 | S. R. Qasim, J. Kieseler, Y. Iiyama, and M. Pierini | Learning representations of irregular particle-detector geometry with distance-weighted graph networks | EPJC 79 (2019) 608 | 1902.07987 |

| 47 | J. Kieseler | Object condensation: one-stage grid-free multi-object reconstruction in physics detectors, graph and image data | EPJC 80 (2020) 886 | 2002.03605 |

| 48 | CMS Collaboration | GNN-based end-to-end reconstruction in the CMS Phase 2 high-granularity calorimeter | JPCS 2438 (2023) 012090 | 2203.01189 |

| 49 | J. Pata et al. | MLPF: Efficient machine-learned particle-flow reconstruction using graph neural networks | EPJC 81 (2021) 381 | 2101.08578 |

| 50 | CMS Collaboration | Machine learning for particle flow reconstruction at CMS | JPCS 2438 (2023) 012100 | 2203.00330 |

| 51 | F. Mokhtar et al. | Progress towards an improved particle flow algorithm at CMS with machine learning | in Proc. 21st Intern. Workshop on Advanced Computing and Analysis Techniques in Physics Research: AI meets Reality, 2023 | 2303.17657 |

| 52 | F. A. Di Bello et al. | Reconstructing particles in jets using set transformer and hypergraph prediction networks | EPJC 83 (2023) 596 | 2212.01328 |

| 53 | J. Pata et al. | Improved particle-flow event reconstruction with scalable neural networks for current and future particle detectors | 2309.06782 | |

| 54 | E. A. Moreno et al. | JEDI-net: a jet identification algorithm based on interaction networks | EPJC 80 (2020) 58 | 1908.05318 |

| 55 | H. Qu and L. Gouskos | ParticleNet: Jet tagging via particle clouds | PRD 101 (2020) 056019 | 1902.08570 |

| 56 | E. A. Moreno et al. | Interaction networks for the identification of boosted $ \mathrm{H}\to\mathrm{b}\overline{\mathrm{b}} $ decays | PRD 102 (2020) 012010 | 1909.12285 |

| 57 | E. Bols et al. | Jet flavour classification using DeepJet | JINST 15 (2020) P12012 | 2008.10519 |

| 58 | CMS Collaboration | Identification of heavy, energetic, hadronically decaying particles using machine-learning techniques | JINST 15 (2020) P06005 | CMS-JME-18-002 2004.08262 |

| 59 | H. Qu, C. Li, and S. Qian | Particle transformer for jet tagging | in Proc. 39th Intern. Conf. on Machine Learning, K. Chaudhuri et al., eds., volume 162, 2022 | 2202.03772 |

| 60 | CMS Collaboration | Muon identification using multivariate techniques in the CMS experiment in proton-proton collisions at $ \sqrt{s} = $ 13 TeV | Submitted to JINST, 2023 | CMS-MUO-22-001 2310.03844 |

| 61 | J. Duarte et al. | FPGA-accelerated machine learning inference as a service for particle physics computing | CSBS 3 (2019) 13 | 1904.08986 |

| 62 | D. Rankin et al. | FPGAs-as-a-service toolkit (FaaST) | in Proc. 2020 IEEE/ACM Intern. Workshop on Heterogeneous High-performance Reconfigurable Computing (H2RC) link |

|

| 63 | J. Krupa et al. | GPU coprocessors as a service for deep learning inference in high energy physics | MLST 2 (2021) 035005 | 2007.10359 |

| 64 | M. Wang et al. | GPU-accelerated machine learning inference as a service for computing in neutrino experiments | Front. Big Data 3 (2021) 604083 | 2009.04509 |

| 65 | T. Cai et al. | Accelerating machine learning inference with GPUs in ProtoDUNE data processing | Comput. Softw. Big Sci. 7 (2023) 11 | 2301.04633 |

| 66 | A. Gunny et al. | Hardware-accelerated inference for real-time gravitational-wave astronomy | Nature Astron. 6 (2022) 529 | 2108.12430 |

| 67 | ALICE Collaboration | Real-time data processing in the ALICE high level trigger at the LHC | CPC 242 (2019) 25 | 1812.08036 |

| 68 | R. Aaij et al. | Allen: A high level trigger on GPUs for LHCb | CSBS 4 (2020) 7 | 1912.09161 |

| 69 | LHCb Collaboration | The LHCb upgrade I | 2305.10515 | |

| 70 | A. Bocci et al. | Heterogeneous reconstruction of tracks and primary vertices with the CMS pixel tracker | Front. Big Data 3 (2020) 601728 | 2008.13461 |

| 71 | CMS Collaboration | CMS high level trigger performance comparison on CPUs and GPUs | J. Phys. Conf. Ser. 2438 (2023) 012016 | |

| 72 | D. Vom Bruch | Real-time data processing with GPUs in high energy physics | JINST 15 (2020) C06010 | 2003.11491 |

| 73 | CMS Collaboration | Mini-AOD: A new analysis data format for CMS | JPCS 664 (2015) 7 | 1702.04685 |

| 74 | CMS Collaboration | CMS physics: Technical design report volume 1: Detector performance and software | CMS Technical Design Report CERN-LHCC-2006-001, CMS-TDR-8-1, 2006 CDS |

|

| 75 | CMS Collaboration | CMSSW on Github | link | |

| 76 | CMS Collaboration | Performance of the CMS Level-1 trigger in proton-proton collisions at $ \sqrt{s} = $ 13 TeV | JINST 15 (2020) P10017 | CMS-TRG-17-001 2006.10165 |

| 77 | CMS Collaboration | Electron and photon reconstruction and identification with the CMS experiment at the CERN LHC | JINST 16 (2021) P05014 | CMS-EGM-17-001 2012.06888 |

| 78 | CMS Collaboration | Description and performance of track and primary-vertex reconstruction with the CMS tracker | JINST 9 (2014) P10009 | CMS-TRK-11-001 1405.6569 |

| 79 | CMS Collaboration | Particle-flow reconstruction and global event description with the CMS detector | JINST 12 (2017) P10003 | CMS-PRF-14-001 1706.04965 |

| 80 | oneAPI | Threading Building Blocks | link | |

| 81 | A. Bocci et al. | Bringing heterogeneity to the CMS software framework | EPJWC 245 (2020) 05009 | 2004.04334 |

| 82 | CMS Collaboration | A further reduction in CMS event data for analysis: the NANOAOD format | EPJWC 214 (2019) 06021 | |

| 83 | CMS Collaboration | Extraction and validation of a new set of CMS PYTHIA8 tunes from underlying-event measurements | EPJC 80 (2020) 4 | CMS-GEN-17-001 1903.12179 |

| 84 | CMS Collaboration | Pileup mitigation at CMS in 13 TeV data | JINST 15 (2020) P09018 | CMS-JME-18-001 2003.00503 |

| 85 | CMS Collaboration | NANOAOD: A new compact event data format in CMS | EPJWC 245 (2020) 06002 | |

| 86 | NVIDIA | Triton Inference Server | link | |

| 87 | gRPC | A high performance, open source universal RPC framework | link | |

| 88 | Kubernetes | Kubernetes documentation | link | |

| 89 | K. Pedro et al. | SonicCore | link | |

| 90 | K. Pedro et al. | SonicTriton | link | |

| 91 | K. Pedro | SonicCMS | link | |

| 92 | A. M. Caulfield et al. | A cloud-scale acceleration architecture | in Proc. 49th Annual IEEE/ACM Intern. Symp. on Microarchitecture (MICRO), 2016 link |

|

| 93 | V. Kuznetsov | vkuznet/TFaaS: First public version | link | |

| 94 | V. Kuznetsov, L. Giommi, and D. Bonacorsi | MLaaS4HEP: Machine learning as a service for HEP | CSBS 5 (2021) 17 | 2007.14781 |

| 95 | KServe | Documentation website | link | |

| 96 | NVIDIA | Triton Inference Server README (release 22.08) | link | |

| 97 | A. Paszke et al. | PyTorch: An imperative style, high-performance deep learning library | Advances in Neural Information Processing Systems 3 (2019) 8024 link |

1912.01703 |

| 98 | NVIDIA | TensorRT | link | |

| 99 | ONNX | Open Neural Network Exchange (ONNX) | link | |

| 100 | M. Abadi et al. | TensorFlow: A system for large-scale machine learning | 1605.08695 | |

| 101 | T. Chen and C. Guestrin | XGBoost: A scalable tree boosting system | in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Association for Computing Machinery, 2016 link |

1603.02754 |

| 102 | NVIDIA | Triton Inference Server Model Analyzer | link | |

| 103 | P. Buncic et al. | CernVM -- a virtual software appliance for LHC applications | JPCS 219 (2010) 042003 | |

| 104 | S. D. Guida et al. | The CMS condition database system | JPCS 664 (2015) 042024 | |

| 105 | L. Bauerdick et al. | Using Xrootd to federate regional storage | JPCS 396 (2012) 042009 | |

| 106 | CMS Collaboration | Performance of missing transverse momentum reconstruction in proton-proton collisions at $ \sqrt{s}= $ 13 TeV using the CMS detector | JINST 14 (2019) P07004 | CMS-JME-17-001 1903.06078 |

| 107 | CMS Collaboration | ParticleNet producer in CMSSW | link | |

| 108 | CMS Collaboration | ParticleNet SONIC producer in CMSSW | link | |

| 109 | M. Cacciari, G. P. Salam, and G. Soyez | The anti-$ k_{\mathrm{T}} $ jet clustering algorithm | JHEP 04 (2008) 063 | 0802.1189 |

| 110 | M. Cacciari, G. P. Salam, and G. Soyez | FastJet user manual | EPJC 72 (2012) 1896 | 1111.6097 |

| 111 | CMS Collaboration | Performance of the ParticleNet tagger on small and large-radius jets at high level trigger in Run 3 | CMS Detector Performance Note CMS-DP-2023-021, 2023 CDS |

|

| 112 | CMS Collaboration | Identification of highly Lorentz-boosted heavy particles using graph neural networks and new mass decorrelation techniques | CMS Detector Performance Note CMS-DP-2020-002, 2020 CDS |

|

| 113 | CMS Collaboration | Mass regression of highly-boosted jets using graph neural networks | CMS Detector Performance Note CMS-DP-2021-017, 2021 CDS |

|

| 114 | Y. Feng | A new deep-neural-network-based missing transverse momentum estimator, and its application to W recoil | PhD thesis, University of Maryland, College Park, 2020 link |

|

| 115 | CMS Collaboration | Identification of hadronic tau lepton decays using a deep neural network | JINST 17 (2022) P07023 | CMS-TAU-20-001 2201.08458 |

| 116 | NVIDIA Corporation | NVIDIA T4 70W low profile PCIe GPU accelerator | ||

| 117 | B. Holzman et al. | HEPCloud, a New Paradigm for HEP Facilities: CMS Amazon Web Services Investigation | CSBS 1 (2017) 1 | 1710.00100 |

| 118 | I. Corporation | Intel 64 and IA-32 architectures software developer's manual | Intel Corporation, Santa Clara, 2023 | |

| 119 | SchedMD | Slurm workload manager | link | |

| 120 | Advanced Micro Devices, Inc. | AMD EPYC 7002 series processors power electronic health record solutions | Advanced Micro Devices, Inc., Santa Clara, 2020 | |

|

Compact Muon Solenoid LHC, CERN |

|

|

|

|

|

|