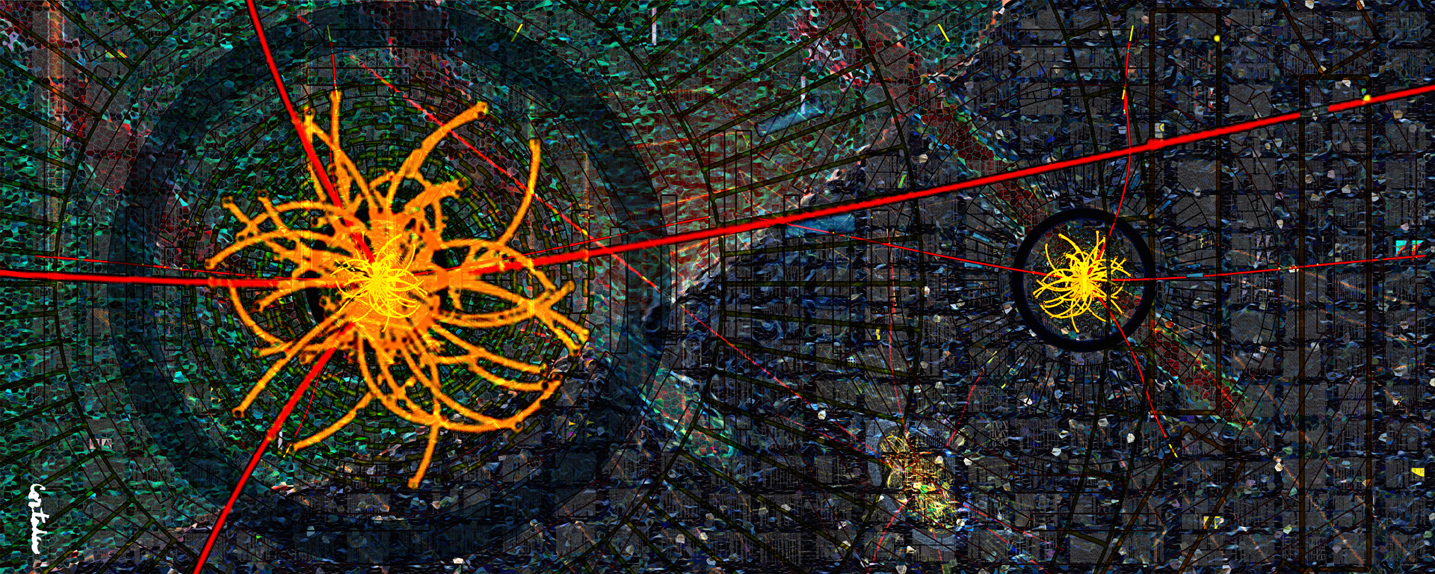

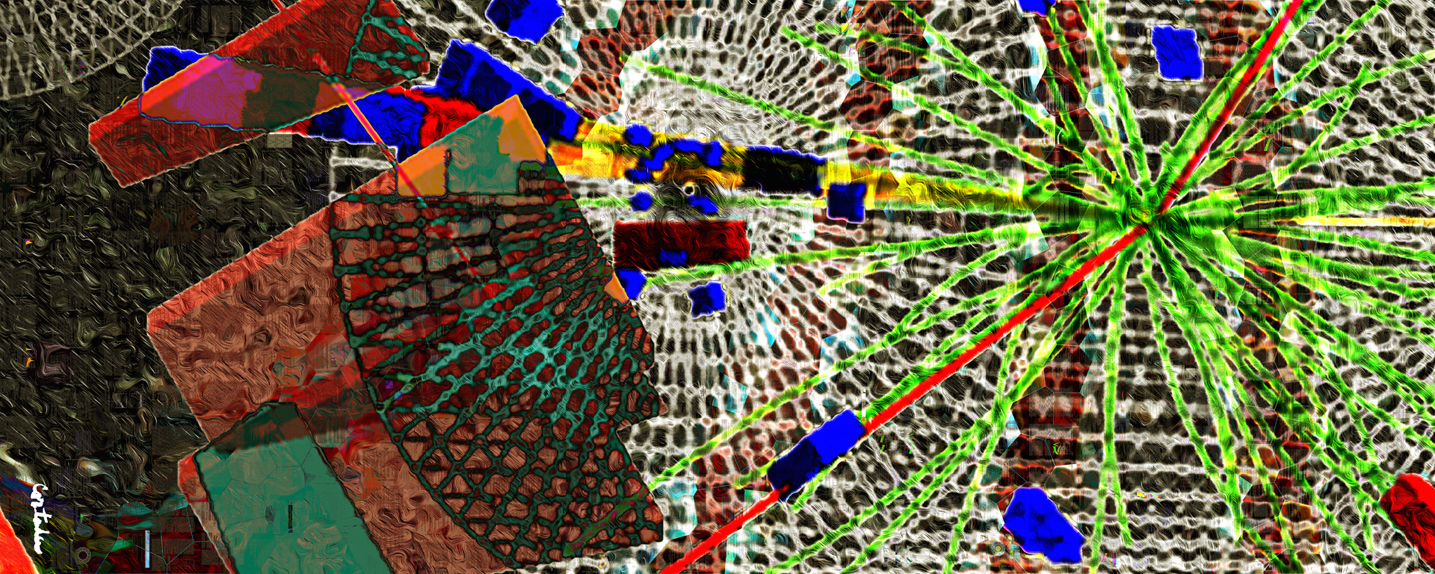

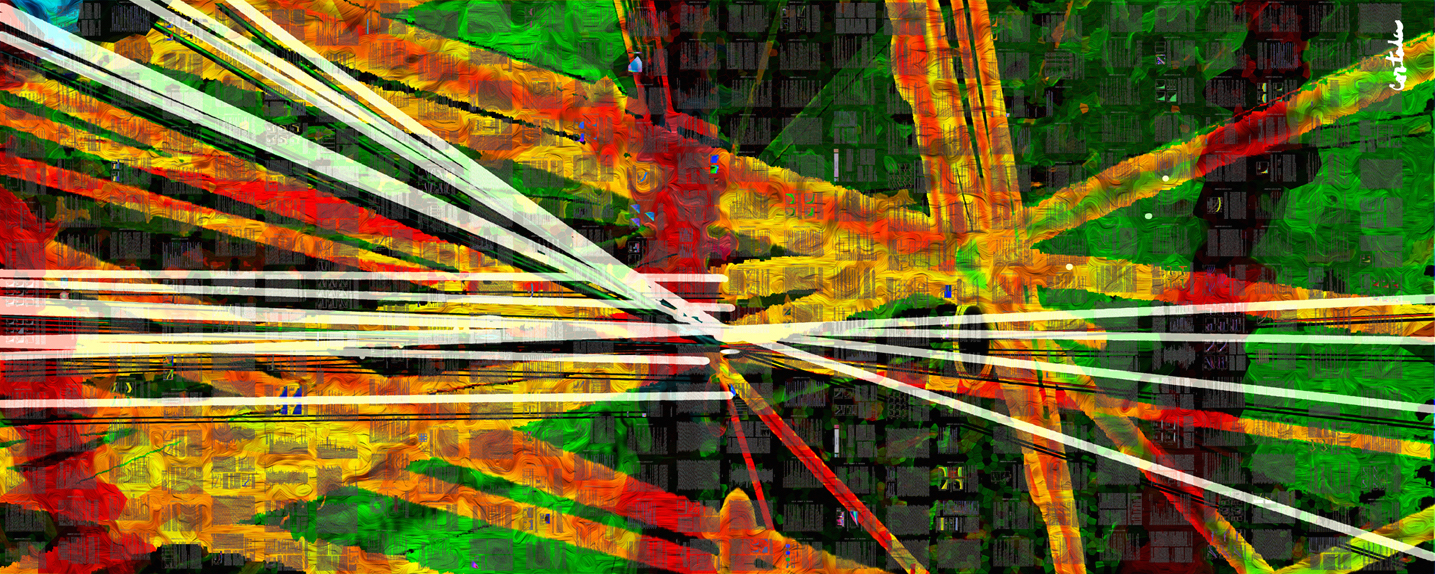

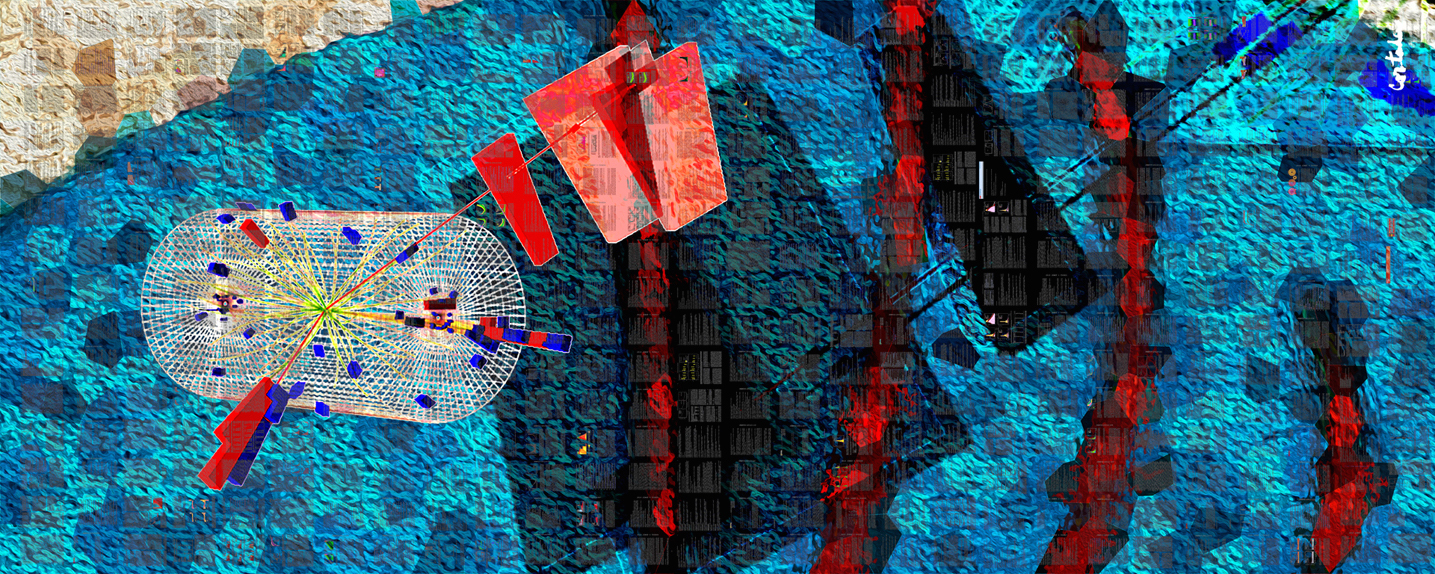

Compact Muon Solenoid

LHC, CERN

| CMS-PAS-MLG-24-002 | ||

| Wasserstein normalized autoencoder | ||

| CMS Collaboration | ||

| 26 September 2024 | ||

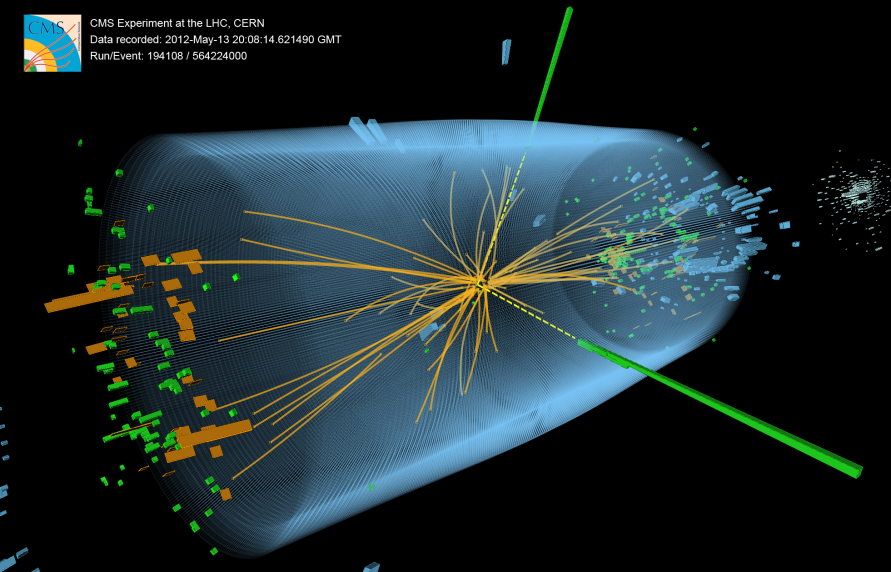

| Abstract: A novel approach to unsupervised jet tagging is presented for the CMS experiment at the CERN LHC. The Wasserstein normalized autoencoder (WNAE) is a normalized probabilistic model that minimizes the Wasserstein distance between the probability distribution of the training data and the Boltzmann distribution of the reconstruction error of the autoencoder. Trained on jets of particles from simulated standard model processes, the WNAE is shown to learn the probability distribution of the input data, in a fully unsupervised fashion, in order to effectively identify new physics jets as anomalies. This algorithm has been developed and applied in the context of a recent search for semivisible jets. The model consistently demonstrates stable, convergent training and achieves strong classification performance across a wide range of signals, improving upon standard normalized autoencoders, while remaining agnostic to the signal. The WNAE directly tackles the problem of outlier reconstruction, a common failure mode of autoencoders in anomaly detection tasks. | ||

|

Links:

CDS record (PDF) ;

CADI line (restricted) ;

These preliminary results are superseded in this paper, Submitted to Machine Learning: Science and Technology . The superseded preliminary plots can be found here. |

||

|

Compact Muon Solenoid LHC, CERN |

|

|

|

|

|

|