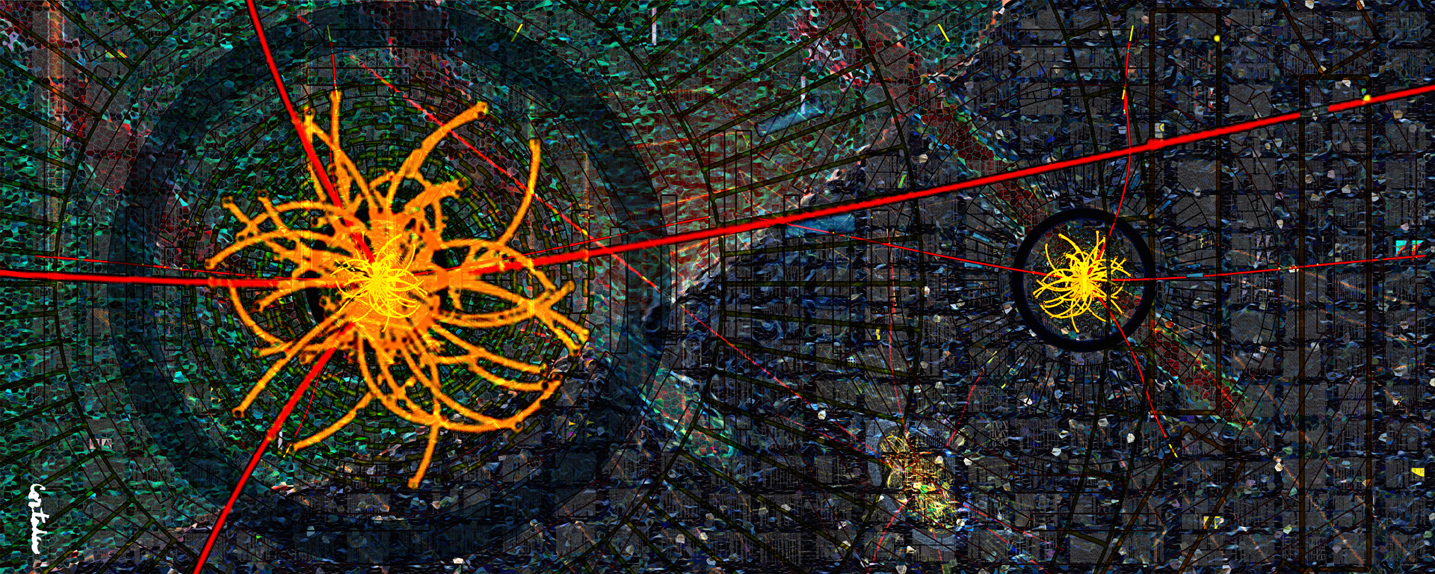

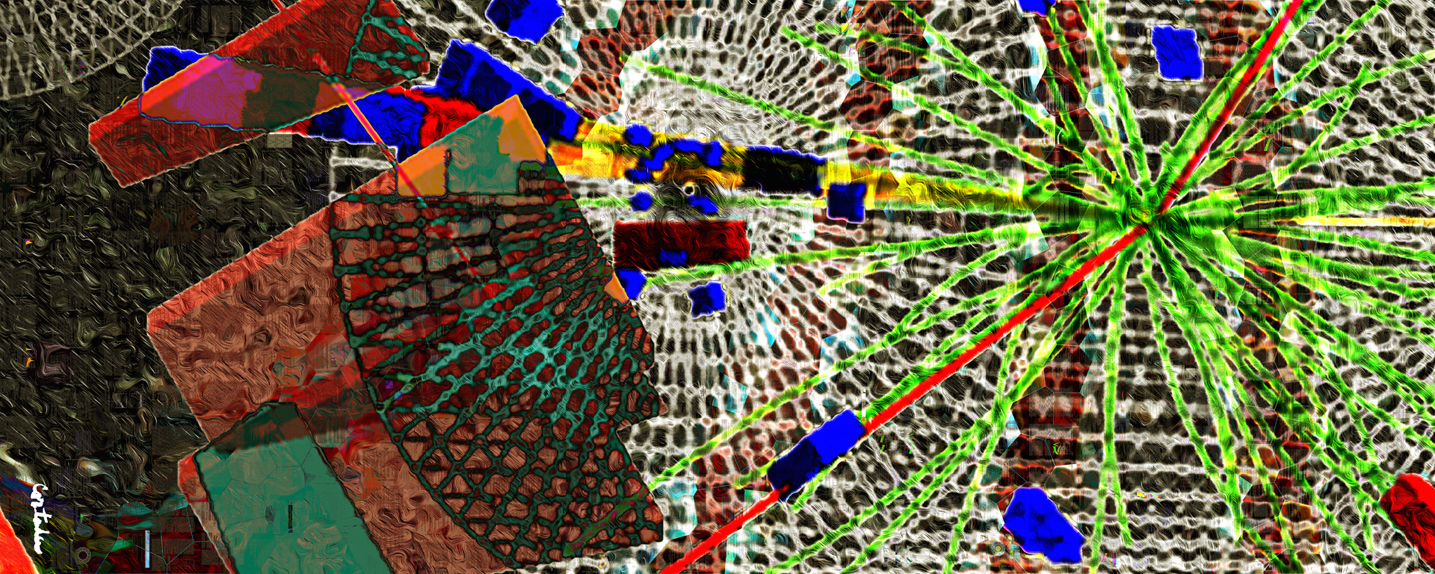

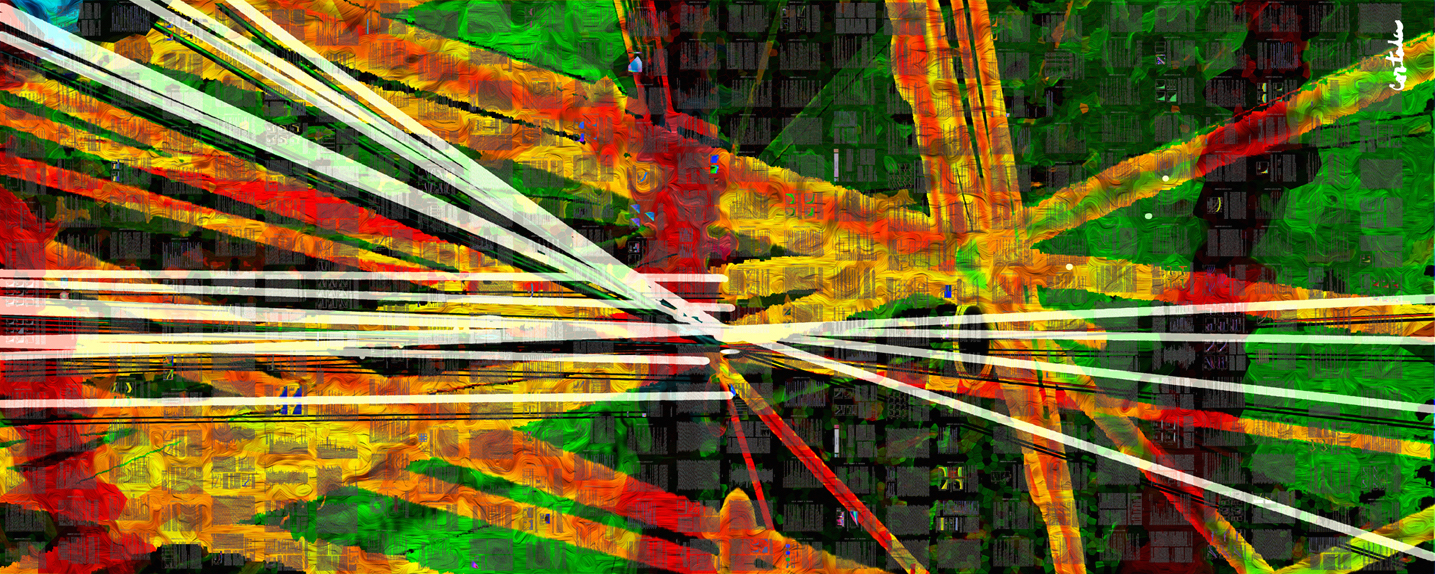

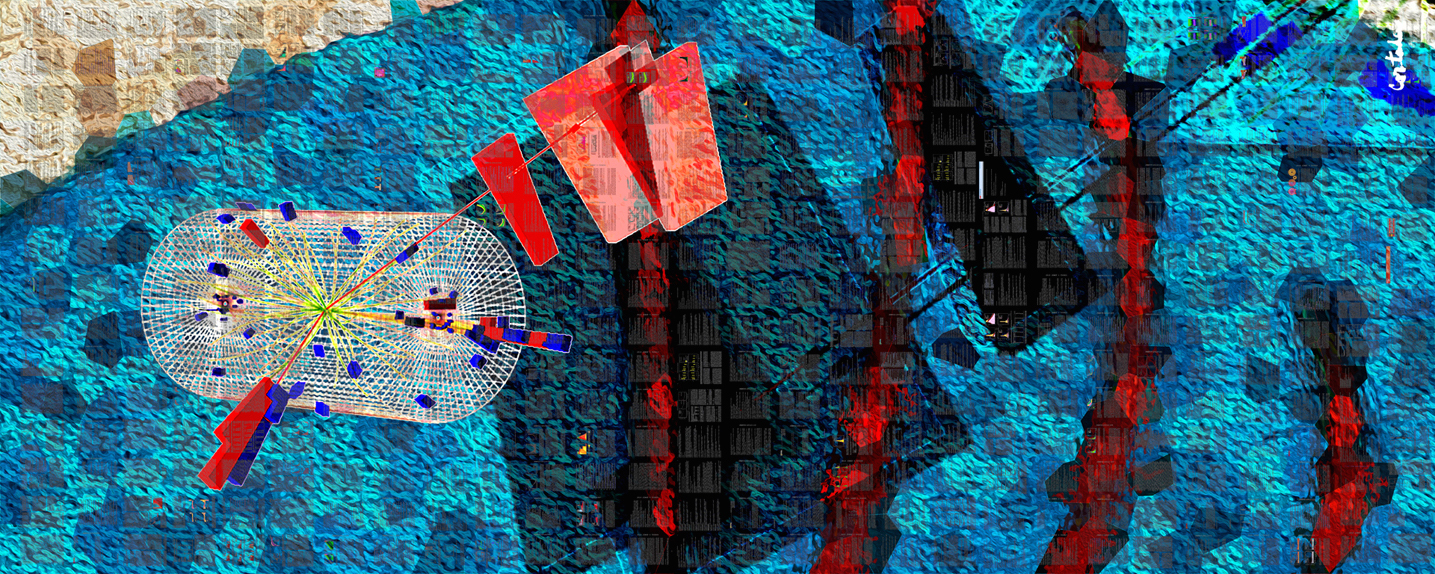

Compact Muon Solenoid

LHC, CERN

| CMS-PAS-MLG-23-005 | ||

| Development of systematic-aware neural network trainings for binned-likelihood-analyses at the LHC | ||

| CMS Collaboration | ||

| 25 July 2024 | ||

| Abstract: We demonstrate a neural network training, capable of accounting for the effects of systematic variations of the utilized data model in the training process and describe its extension towards neural network multiclass classification. Trainings for binary and multiclass classification with seven output classes are performed, based on a comprehensive data model with 86 nontrivial shape-altering systematic variations, as used for a previous measurement. The neural network output functions are used to infer the signal strengths for inclusive Higgs boson production, as well as for Higgs boson production via gluon-fusion ($ r_{\mathrm{ggH}} $) and vector boson fusion ($ r_{\mathrm{qqH}} $). With respect to a conventional training, based on cross-entropy, we observe improvements of 12 and 16%, for the sensitivity in $ r_{\mathrm{ggH}} $ and $ r_{\mathrm{qqH}} $, respectively. | ||

|

Links:

CDS record (PDF) ;

CADI line (restricted) ;

These preliminary results are superseded in this paper, Submitted to CSBS. The superseded preliminary plots can be found here. |

||

|

Compact Muon Solenoid LHC, CERN |

|

|

|

|

|

|