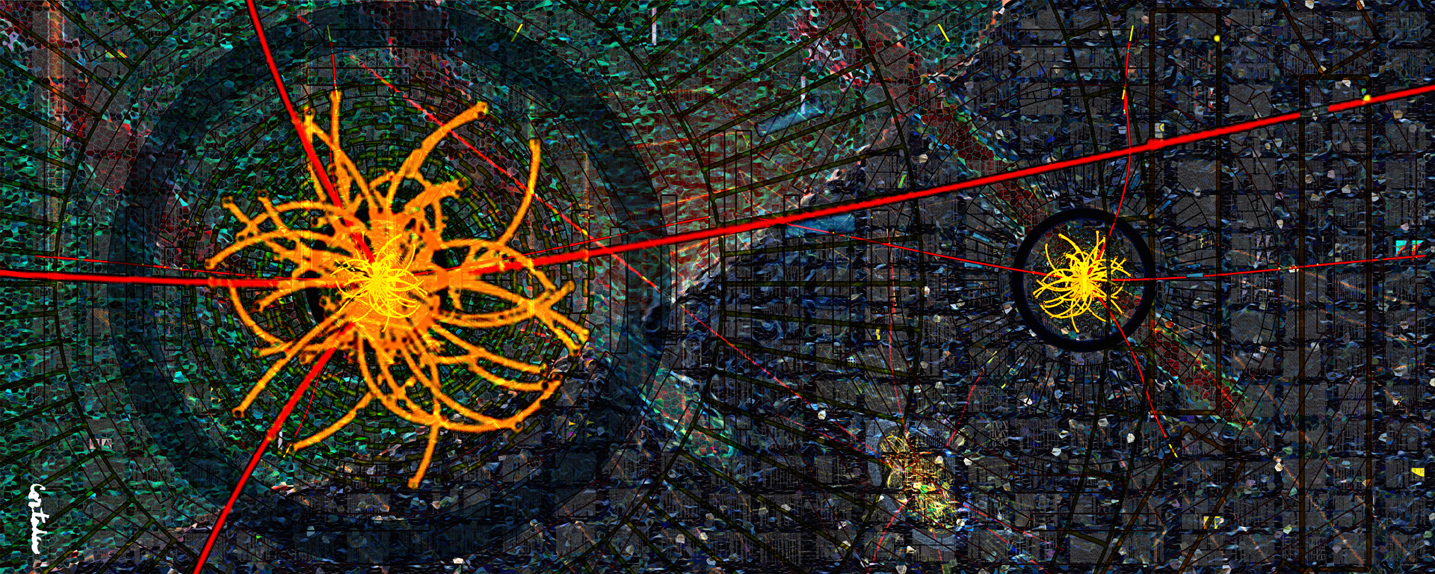

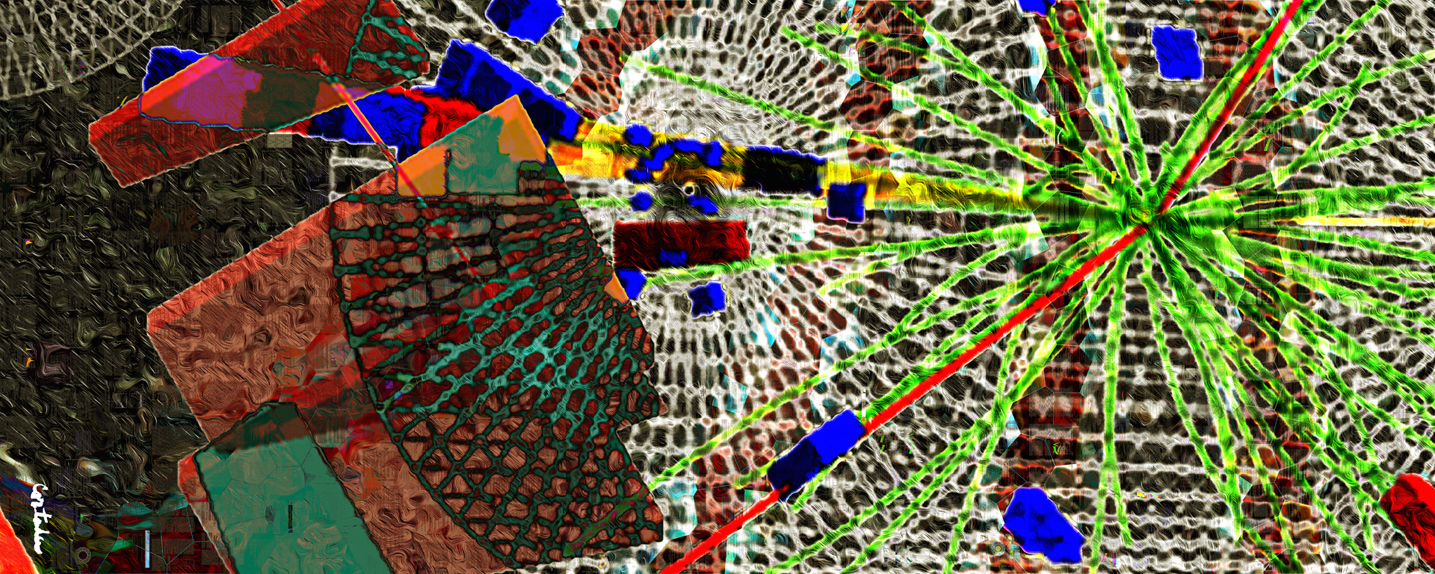

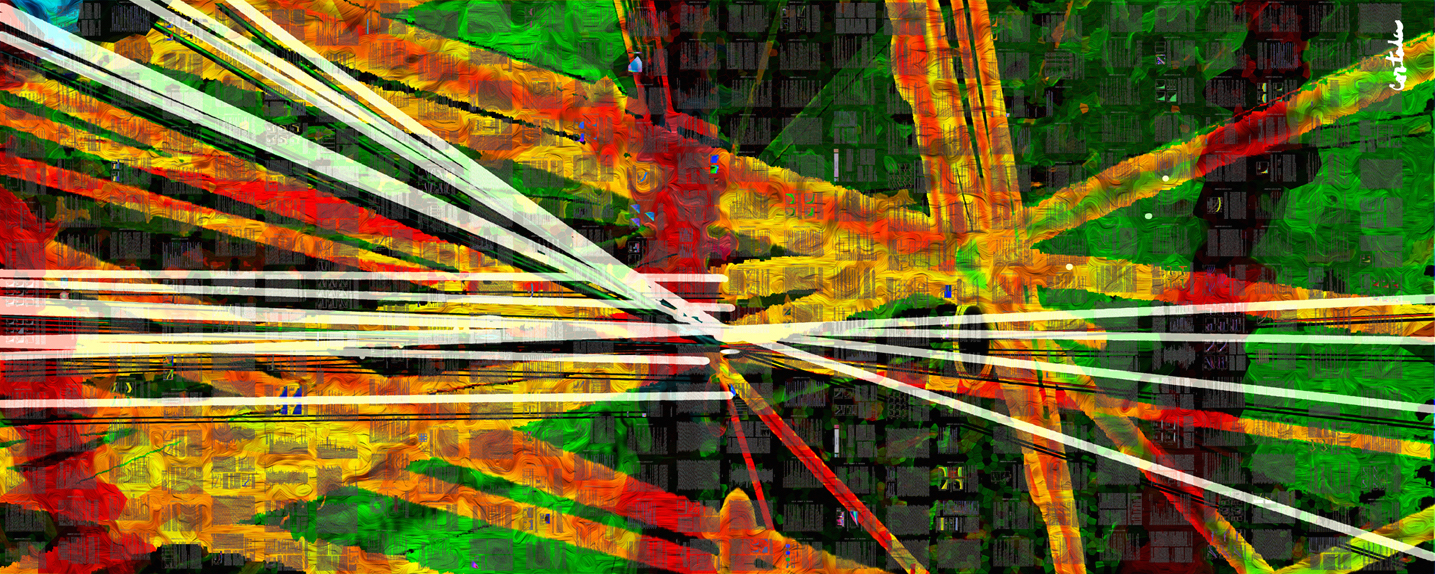

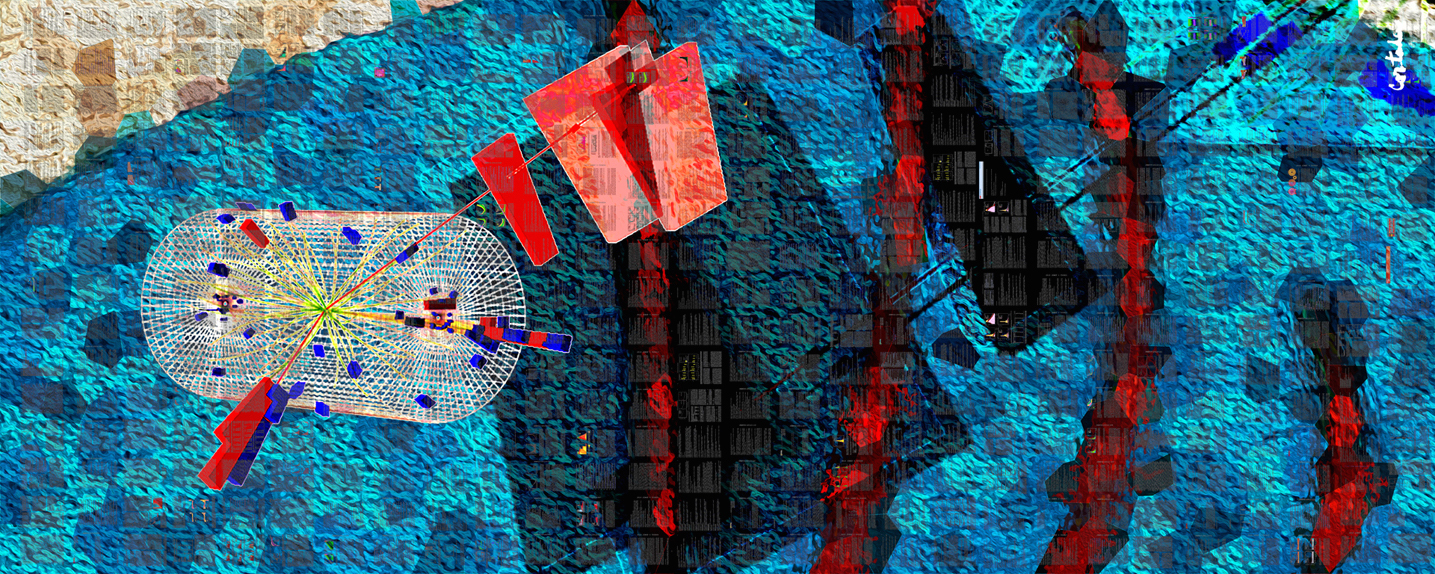

Compact Muon Solenoid

LHC, CERN

| CMS-PAS-MLG-23-003 | ||

| Extension and first application of the ABCDisCo method with LHC data: ABCDisCoTEC | ||

| CMS Collaboration | ||

| 27 March 2025 | ||

| Abstract: The ``ABCD method'' provides a reliable framework to estimate backgrounds using observed data by partitioning events into one signal-enhanced region (A) and three background-enhanced control regions (B, C, and D) via two statistically independent variables. In practice, even slight correlations between the two variables can significantly undermine the method's performance. Thus, choosing appropriate variables by hand can present a formidable challenge, especially when background and signal differ only subtly. To address this issue, the ABCDisCo method (ABCD with distance correlation) was developed to construct two artificial variables from the output scores of a neural network trained to maximize signal-background discrimination while minimizing correlations using the distance correlation measure. However, relying solely on minimizing the distance correlation can introduce undesirable characteristics in the resulting distributions, which may compromise the validity of the background prediction obtained using this method. The ABCDisCo training enhanced with closure (ABCDisCoTEC) method is introduced to provide a novel solution to this issue by directly minimizing the nonclosure, expressed as a dedicated differentiable loss term. This extended method is applied to a data set of proton-proton collisions at a center-of-mass energy of 13 TeV recorded by the CMS detector at the CERN LHC. Additionally, given the complexity of the minimization problem with constraints on multiple loss terms, the modified differential method of multipliers is applied and shown to greatly improve the stability and robustness of the ABCDisCoTEC method, compared to grid search hyperparameter optimization procedures. | ||

|

Links:

CDS record (PDF) ;

CADI line (restricted) ;

These preliminary results are superseded in this paper, Submitted to MLST. The superseded preliminary plots can be found here. |

||

|

Compact Muon Solenoid LHC, CERN |

|

|

|

|

|

|